Hardware evolution - from CPU to GPU

Current move to blockchain technology and deep learning could shift the focus of the microprocessor industry from general application performance to neural net performance. Originally computer processors were not designed for such complex tasks.

Both the x86 processors were designed for general workloads such as internet browsing, mobile apps, and video streaming are based on integer operations and tend to be sequential in nature. In contrast, deep learning workloads are based on floating point, or decimal, operations and are parallel in nature.

Hence deep learning demands different processor designs, specifically those with high floating point performance. The highest performing processor for floating point operations is the Graphics Processing Unit (GPU).

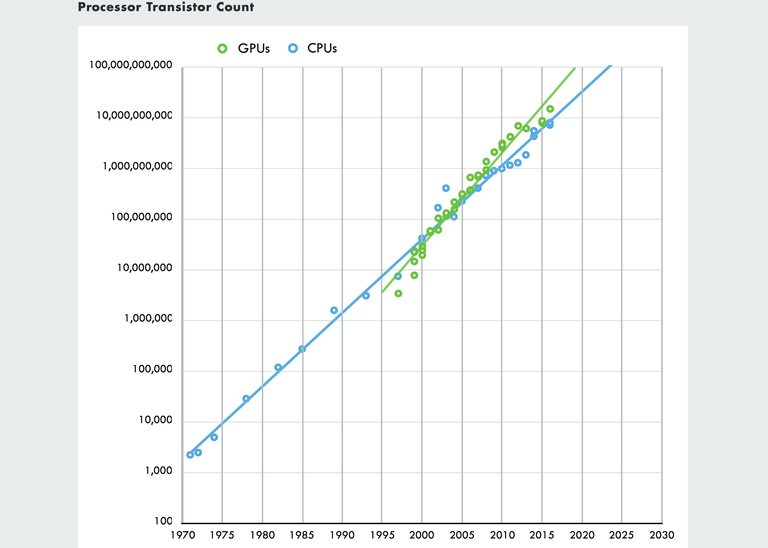

As shown below, since the mid-2000s GPUs have outstripped Central Processing Units (CPUs) in transistor count, a measure of chip complexity and performance. NVIDIA’s Pascal P100 GPU, for example, incorporates 15 billion transistors, almost double that of Intel’s “Knight’s Landing” Xeon Phi processor.

And because GPUs are designed for floating point intensive graphics workloads, most of its transistors are devoted to floating point operations, while CPUs serve a variety of workloads and can devote only a portion of their transistor budget to floating point operations.

Deep learning is unique enough as a workload to justify a new architecture and to support the daunting cost of ongoing chip development.

Moving forward, deep learning eventually could reside on-chip as part of an acceleration block, similar to the way graphics is handled today.

It's crazy to think that the gaming industry created the GPUs which have taken us out of the last "AI winter". Just a bunch of dorks playing video games. Now those same GPUs are powering the machine learning revolution. :P Thank you for the post!

Yes its funny how technology adopts, it would be interesting to watch how the whole new craze for mining coins using GPUs will is going to benefit the AI and Deep Learning capabilities.

AMD and nVidia have alread begun to develop graphic cards for crypto mining, missing the monitor connector and recently even with increased durability for non-stop mining. The next step might be the MPU - the mining processor unit. Or maybe more generalized something that also benefits AI even more than current GPU's. What are your thoughts on that?

Looks like AMD and Nvidia are best place to push tech into the next phase, Ive read that both AMD and Nvidia are looking to release bare bones GPUs specifically designed for the task of cryptocurrency mining, these stripped down GPUs will have lower specs than their gaming counterparts, yet be ASIC engineered to meet the demands of currency mining.

I am not sure if that is a good thing. This whole mining business creates a lot of demand for energy and rare metals. On the other hand we are blowing ressources for worse so here at least we are pushing decentralizing business and great blogging platforms that pay us.

Congratulations @evolutionlabs! You have completed some achievement on Steemit and have been rewarded with new badge(s) :

Click on any badge to view your own Board of Honor on SteemitBoard.

For more information about SteemitBoard, click here

If you no longer want to receive notifications, reply to this comment with the word

STOP