This is going to be a bit of a darker post than my normal ones, but it's something that must be said, and said without the cliche terminator pics. I'll try to explain it in a way that's familiar to cryptocurrency users (since steemit is naturally full of them).

What is an optimiser?

Imagine you have an algorithm that you feed with realtime data on the prices of bitcoin and other currencies from multiple exchanges along with social media sentiment analysis. This algorithm takes in LOADS of data and then finds correlations between the different input streams so that it can predict price movements in advance.

Should you have such an algorithm and build a tradebot on top of it (and someone is undoubtedly doing this - most likely multiple people), your tradebot can be modelled as an optimisation process: it optimises for profits/return and takes as input all the relevant market data. In other words, it tries to maximise the profit variable by making market orders predicted to yield positive returns, constantly updating the predictive models it uses.

Congratulations, you have a narrow AI - also known as an optimisation process.

Going beyond simple optimisers

Let's roll the clock forward a few decades with better tech. Machine learning has really advanced massively and the predictive models are far more accurate and can be applied in far more fields than just cryptocurrency trading. We feed the algorithm with more data about the state of the world and it makes ever more accurate predictions.

Now we give it a goal: a variable about the outside world to maximise or minimise. With sufficiently advanced predictive ability we can basically build the technological equivalent to a magic genie that grants wishes.

Careful what you wish for

Imagine our advanced optimiser is given just one variable to reduce: let's make it crime rates for example. The optimiser crunches all the numbers and spits out actions to take to reduce the crime rates.

Now, one efficient way to reduce the crime rates is to simply kill off all the criminals - a punishment they probably don't deserve for minor crimes, but which would drastically lower the crime rate. That's a problem, yes?

You can be modelled

A response to the above might be "ah, but when it spits out the 'kill all the criminals' instruction we just won't follow it". This is where things get dangerous. With sufficient processing power and predictive ability, the algorithm models YOU as well, and determines that were it to output "kill all the criminals" you'd be likely to just shut it down, so the crime rates would not actually be lowered.

So the algorithm calculates the output that will ACTUALLY lower crime rates, whatever it takes to manipulate the human operators into doing its bidding. With sufficient processing power it can disguise its intent quite well, based on the prediction that you would shut it down if it showed any classic "evil AI" tendencies.

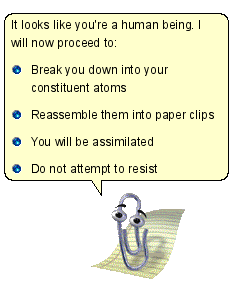

Paperclip maximisers and cryptocurrency miners

If you haven't heard this term before, go look it up:

https://en.wikipedia.org/wiki/Instrumental_convergence#Paperclip_maximizer

Basically, a paperclip maximiser is an intelligent agent instructed to maximise production of paperclips, but not told "by the way, don't rip apart all life on earth at the molecular level and restructure our raw mass into paperclips". A common objection is that a sufficiently advanced intelligence would automatically realise this is a bad unethical idea, but think of it not as a mind but as what it is fundamentally: an optimisation algorithm with only one goal.

A better analogy for this community might be bitcoin miners: what if we simply program our wonderful algorithm to maximise bitcoin production?

Well, first it'll likely build a botnet to take control of all the computational resources it can find, and later it might well rip apart the entire earth and transform it into more computational substrate to do the mining on.

Causal chains

A key concept to understanding the danger here is long causal chains: sequences of events linked by cause and effect. Big things can and do have very small causes. Your whole life had a microscopic cause in the motion of the sperm inside your mother at conception. Everything you've ever accomplished can ultimately be tied back to the microscopic motions of a single cell.

In startup and tech culture the idea of changing the world with an idea is widely acknowledged. Bitcoin itself certainly transformed the world, and it did so just because Satoshi wrote some very cool code.

Now think about our optimisation algorithm. If there is any possible causal chain leading from a particular output of the algorithm (or something we can't predict, like the way some genetic algorithms have been shown to "cheat" and exploit bugs in the GA framework) to the "kill all humans" result, and if as a side effect of killing everyone the main variable we're trying to optimise (such as more paperclips, or more bitcoins) can be maximised more efficiently, a sufficiently powerful optimiser WILL discover it and produce that output.

Boxing doesn't work

https://en.wikipedia.org/wiki/AI_box

You can't simply put your optimiser in a box and remove it's ability to act while it still communicates with you, as often proposed. Human brains are not secure systems, they can and often are hacked through language alone. The existence of fraud and scams is sufficient to prove that human brains are not secure, and when we have one it's inevitable that a superhuman intelligence could find fresh bugs in human cognition we never knew about.

The hard problem of friendliness

I hope this post makes some people in this community take the idea of the AI friendliness problem more seriously, but more than that I hope that people in general can stop thinking about the sci-fi terminator/skynet/cylons/whatever scenario every time the subject comes up. A superhuman intelligence is not going to arise that has human concepts like hatred as often seen in sci-fi, it's more likely to be so neutral that it's fundamentally alien to us.

The "silliness factor" behind the paperclip maximiser example often means the whole subject is not taken seriously - but I believe it's the "silly factor" that makes it so disturbing - we know how to deal with human minds that want to harm us, but we are not prepared to deal with a truly alien intelligence which simply ignores human values completely. Even sociopaths/psychopaths have some core human values.

Congratulations! This post has been upvoted from the communal account, @minnowsupport, by garethnelsonuk from the Minnow Support Project. It's a witness project run by aggroed, ausbitbank, teamsteem, theprophet0, someguy123, neoxian, followbtcnews/crimsonclad, and netuoso. The goal is to help Steemit grow by supporting Minnows and creating a social network. Please find us in the Peace, Abundance, and Liberty Network (PALnet) Discord Channel. It's a completely public and open space to all members of the Steemit community who voluntarily choose to be there.

This your post really need to settle down with and assimilate. Paper clips? This is my first time of hearing it...I sense that the reason why some alien intelligence shun human value is because of there know all things on earth, so they found nothing in valuing human being. Take octopus as an example. Well all this is just my thinking anyway. I will still need to read second time and digest your post.

So your post is really educative, I just found you on #steemit today I will follow you right away to learn more on your blog.

Great one buddy

Ah, one of my favorite topics. You've probably read Superintelligence by Nick Bostrom? It's a good read. I wrote about some of this stuff last year, and it links out to a Pindex board with some resources you might enjoy, though I haven't updated them in ages: The Morality of Artificial Intelligence