The Best AI Trend Is Yet To Come

A complete overview of all AI trends (we know of) coming at you

Title Image by Author, License for background held through Envato Elements

Title Image by Author, License for background held through Envato Elements

AI has made incredible progress over the last decade, and better tools and models are being developed every day. From GPU-Acceleration to Natural Language Processing progress, we have seen accelerators and enablers taking shape and move huge amounts of investments in the most recent past. Deepmind showed us just this week again that things thought to be impossible for another decade can become a reality in no time.

Ranging from Smart Robots to Neuromorphic Hardware, we will have a look at the top 13 AI trends that will be on everyone's mind from now until 2025.

I am in no way affiliated with any of the following companies. All of the following technologies are listed in the Innovation Trigger categorie by Gartner

1. Digital Ethics

However, the risks are also great, as the allures of using sensitive information are sometimes too great to see the downsides. Governments and companies need to address several ethical questions and find methods to capitalize on information while designing best practices to respect people’s privacy and maintain trust. The worst-case scenario in my mind for the future is a public that distrusts AI fundamentally and stalls development.

Think about Weapons, Fake Media, Socialmediabots, DeepFakes, and we haven’t even started with what state actors might be up to behind the scenes.

Screenshot from Google

Screenshot from Google

2. Knowledge Graphs

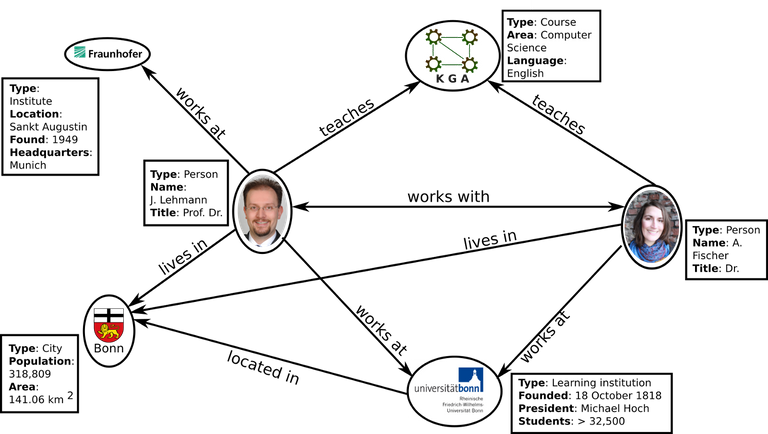

Knowledge Graphs have been around for over 20 years. The basic idea is that of taxonomies (ontologies more precisely), classifying, and grouping information. Knowledge Graphs are like the more conservative approach to intelligent systems, build on clear rules, and highly interpretable. Combinations of new Deep Learning-based methodologies and Graph Databases have contributed to these structures' modern hype learning.

Example of a Knowledge Graph, Source Github

Example of a Knowledge Graph, Source Github

They often are used in areas where transfer learning is needed, or understanding context is crucial. Google holds the record with their knowledge graphs, which are used for many services such as the search engine and voice assistants.

3. Intelligent Applications

Intelligent apps, aka I-apps (I wonder why Apple doesn’t hold that patent;) are apps that use intelligence in any form. This includes Artificial Intelligence, Big Data, and everything you can sell under the umbrella term AI. The big promise here is applications that get to know their users and can learn how to serve them better through continuous use.

The most known applications of this type are Cortana/Siri and the other assistants, Ada, the healthcare app, and AI dungeon, a multiplayer text adventure game that uses artificial intelligence to generate unlimited content. I asked AI dungeon what it thinks about our stories so far, and this was its answer.

example from I-app AI dungeon, Yes it really wrote that

example from I-app AI dungeon, Yes it really wrote that

4. Deep Neural Network ASICs

Many years ago, AI models were mainly trained on CPUs. The real breakthrough came after heavily optimized and parallelized code started running on GPUs. Google decided this trend should not end here and came up with TPUs(Tensor Processing Unit), a specified chip perfectly adjusted for computations common on their AI framework TensorFlow.

An ASIC(Application-specific integrated circuit) is a chip customized for a particular use. A GPU is technically nothing more than an ASIC optimized for graphical calculations, aka parallel matrix multiplications. The trend here is to design ASICs that are highly specific for a particular purpose. We could develop one that handles exactly the requirements of a Tesla car or one perfectly adapted for face recognition.

5. Data Labeling and Annotation Services

To validate systems and allow them to learn, we need to know what is right first ourselves. Just having data often is not enough. We mostly also need labels describing the data. As in the image below, a machine would need to know first that the subject is wearing a mask before it could ever learn to distinguish mask wearers from non-mask wearers.

Image of the author testing a Mask Detection AI

Image of the author testing a Mask Detection AI

This is where data labeling and annotation services come in.

Step 1: Upload thousands of photos.

Step 2: Qualified users will tell you what is in the Image. Low skilled people for things like mask vs. no-mask. Doctors for labels like what type of cancer is in the radiology image.

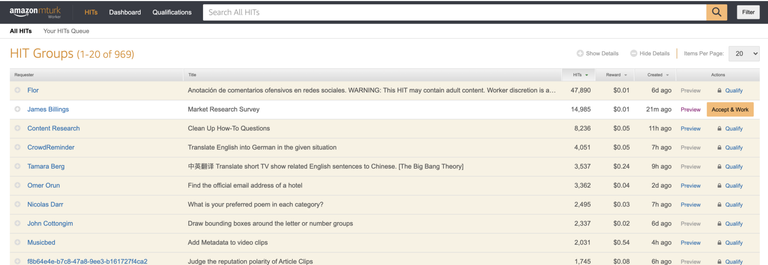

As you might imagine, this could be a pretty huge business in the next years. Amazon’s Mechanical Turk is probably the most well known. It allows you to earn money for labeling data or to answer surveys.

Amazon’s Mechanical Turk Dashboard Screenshot.

Amazon’s Mechanical Turk Dashboard Screenshot.

6. Smart Robots

Humanities last dream, or it's first? Since I was a little boy, I dreamed about humanoid-like robots doing mundane tasks for me. Cooking, cleaning, and assembling Ikea furniture.

According to Yahoo Finance, the market for Smart Robots is expected to exceed $23 Bn by 2025. While it is still a long road to the types of robots I imagined as a child, current developments are interesting, to say the least. Industry smart robots guard the assembly lines of many international products from cars to furniture. Their usage is not expected to reach a plateau soon.

The most fascinating current inventions are not toys for children but adults. Sex robots have been in the news for many years, and apparently, they are making money. Personally, I would not spend $5000+ for creepy dolls like these, but then again, who am I to judge?

7. AI Developer and Teaching Kits

AI kits is an umbrella term for instructions, examples, and software development kits that help developers/students understand and implement AI solutions. Such kits are currently in their infancy stage, and we can expect them to become more common knowledge in the next few years. Their goal will be to teach and get developers and students to a level of knowledge to use and contribute to AI adoption productively.

Current examples for developer kits include kits such as Atlas 200 DK AI from Huwai. Or Intel's AI on the PC Development Kit.

On the teaching frontier, the Magician Lite from Dobot is on top of the things I never needed but always wanted ;), be sure to have a look.

8. AI Governance

AI Governance is the process of evaluating and monitoring algorithms. This may include things as Bias, ROI, risk, effectiveness, and all other metrics that we will come up with in the future.

The main issue here is time. At the time of development, AI developers have to make assumptions based on data available to them right now. But what happens 5 years later after a possible black swan event such as COVID? Do your flight recommendation engine's base assumptions still hold?

The result is missed opportunities and bad decisions based on ancient assumptions. This is why we need AI governance plans. In short, it should at least include.

- AI model definition: What is the purpose of the AI

- AI model management: What can each model do, and what department is using it for what?

- Data governance: How is the data transformed? Which countries can you use them in? Potentially duplicated for which purposes?

For further reading, I can recommend Getting started with data governance by tdwi.

9. Augmented Intelligence

Augmented Intelligence refers to using AI to enhance the intelligence and productivity of humans. Instead of replacing workers, it strives to develop tools that help them become more efficient and effective.

Current examples include portfolio management software that enables financial planners to offer their customers custom-tailored solutions. Or assisting healthcare professionals in choosing the right drug for the right patient.

“Decision support and AI augmentation will surpass all other types of AI initiatives” — Gartner

While that most certainly is more a question of what solution belongs to what categories, definitely a strong statement.

10. Neuromorphic Hardware

Neuromorphic hardware refers to specialized computing hardware that was designed with neural networks in mind from the beginning. Dedicated processing units emulate the neurons inside the hardware and are interconnected in a web-like manner for fast information exchange.

So what's the difference? Currently, most processors implement the von Neumann architecture. This architecture has shown to be ideal for most tasks we were interested in for the last couple of decades. AI is different in terms of computation; it is heavily parallelized and requires decentralized memory accesses. This is where neuromorphic hardware sees an opening for more efficiency and speed. There is a huge variety of such architectures for different purposes, and no one size fits all approach.

11. Responsible AI

I personally realized only after reading Weapons of Math Destruction how important it is. When building systems, the developers themself barely understand what happens when “normal” people use them? In her book, the author listed the example of schools that used an automated teacher evaluation system. The issue was that no one understood the score it gave to teachers. This resulted in good teachers in a bad neighborhood to be fired based on results out of their control. When they objected, no one was around to explain the results that were deeply statistical in nature. This is only one of many areas where we should proceed with care and clearly highlights why we should monitor what our AI models are used for. The following video from Microsoft highlights the key components to think about.

12. Small Data

We all heard of Big Data and how you can improve a model by simply giving it more quality data to train on. Currently, we mostly see that an inferior model can easily outperform a better model by simply using more data. But what if we don’t have more data? Or we have a lovely use case, and there is not enough data, and we need to gather and label everything from scratch?

For example, we could want to transcribe handwritten notes/drawings from mechanics automatically. Probably no dataset exists that would allow us to learn the entire system on the real deal. So what can we do? This is where transfer learning comes in. We could basically first train our model on drawings and handwritten notes in general. Once the model learned how to interpret words and draw in general, we would show it the real deal, our Small Data from mechanics.

More and more papers are coming out, figuring and piecing together a system of how and in what order such a system should be trained. Transfer learning surely is here to stay.

13. AI Marketplaces

Sharing is caring; AI developers know this and openly share knowledge through 1000’s of publications every year. While sharing knowledge is great, sharing the entire model is even better. The basic concept is that after training a model on your data, you upload it to a website, and then users can pay for it. I have dedicated an entire video to this topic and think this trend is too huge to be ignored, have a look at

Conclusion

The world is moving faster than ever. We are in the most exciting epoch of technological progress, and AI is a big part. We got to know the most exciting trends that should help AI deliver on its promise and accelerate progress across all industries applying it. While we won’t see most of these trends in full action until 2025, we can expect some of them to become an integral part of our lives.

If you enjoyed this article, I would be excited to connect on Twitter or LinkedIn.

Make sure to check out my YouTube channel, where I will be publishing new videos every week.

Congratulations @lucks! You have completed the following achievement on the Hive blockchain and have been rewarded with new badge(s) :

Your next target is to reach 50 replies.

You can view your badges on your board and compare yourself to others in the Ranking

If you no longer want to receive notifications, reply to this comment with the word

STOPCheck out the last post from @hivebuzz: