One of the big news of the week was, of course, that OpenAI released GPT-4 for ChatGPT+ subscribers at a limited capacity. At first, it was released as 100 requests per 4 hours, but throughout the week the number of requests has been reduced. At the time of writing, you can only send 25 requests per 3 hours.

GPT-4 can provide answers with fewer factual errors compared to its predecessor GPT-3, and it has shown improvement in many tasks. Alongside the model's release on ChatGPT, OpenAI also published a 99-page technical report on GPT-4. However, many researchers in the AI community have criticized the report as a fluff piece masquerading as research, since it lacks information about the model's architecture (including model size), hardware, training compute, dataset construction, training methods, or similar.

Also, there is a 32k token version currently only available to researchers. 32K tokens are almost equivalent to 50 pages. In the GPT-4 demo, the presenter sent the whole discord.py documentation to GPT-4. GPT-4 then made use of the documentation to fix errors in the code it provided. GPT-4 can also understand images, but this ability is also only available to researchers currently.

While all the crazy stuff was going on with GPT-4. This news flew under the radar. Alpaca7B is a fine-tuned model based on Meta's Llama7B model. Up until now, it doesn't seem that newsworthy, just another model.

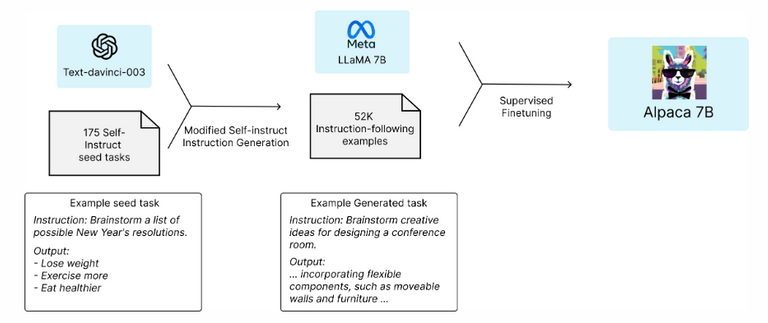

What is newsworthy about this is how they did train this model. They used 175 Self-Instruct seed tasks to generate 52k Instruction following examples using text-davinci-003(GPT-3). Then they used this data to fine-tune the Llama7B model. The whole thing cost less than 600$.

What does this mean? It means we are ahead of the curve when it comes to AI development. There was a research paper published last year that predicted model cost will go down to this sub 1000$ range in 2028. Only a year later we are fine-tuning and creating new models for less than 600$.

Oh did I forget to mention that you can run this locally on your computer? For the record, it still has some issues but it has only been released recently and it is open-source so it is going to improve in the coming weeks, in my humble opinion.

And a quick rundown of other news;

Google is starting to give access to its PaLM API. PaLM is Google's multi-modal large language model. It is the largest LLM in existence with 540B parameters. For comparison, GPT-3 had 175B parameters. Also, AI tools are coming to Google's workspace. This is currently only available to some business partners.

Microsoft confirmed that BingAI was running on GPT-4 the whole time as many suspected. And just like Google, Microsoft is integrating GPT-4 into Office 365. If you haven't watched I highly recommend watching Microsoft's presentation.

This is what I meant by it is not over yet. Next week is Nvidia's GPU Technology Conference, GTC for short, and it is going to be about AI and Metaverse. I believe there are going to be a ton of announcements at GTC and we will get massive amounts of insights into what is waiting for us in the next couple of months.

Anyway, be sure to be nice to AI. You wouldn't want to be killed when our AI overlords take over the world.