Image Source (Generated by an older version of OpenAI’s DALL-E at huggingface.co)

Ever since I read “Age of Spiritual Machines” by Ray Kurzweil in 2000, I’ve been thinking seriously about the implications of super-intelligent artificial beings that could hypothetically exist inside of computing machines. Ray framed his prediction of such beings in the context of exponential technological growth. He even showed well-researched graphical representations of this growth not only in computers, but in all technologies, particularly biotechnology, and nanotechnology, which would usher in the era of human augmentation with machinery that would make them smarter and live longer. This melding of man and machine would culminate in the eventuality of Super AI beings that would be so hyper-intelligent, their “thoughts” would be unintelligibly fast and complex to a present day human. He called the time that this event would occur, a singularity, even The Singularity.

The reference to black holes is obvious to anyone who has studied them. In the physical universe, they are such hugely massive objects that they collapse in on themselves and have their own physical laws that don’t seem to exist anywhere else in the universe. This point in space is referred to as a singularity. This massive gravitational object distorts space-time to such a degree that not even light itself can escape. The area around the black hole where it becomes impossible for things to escape is called the Event Horizon. Once you go over that precipice, you ain’t coming back.

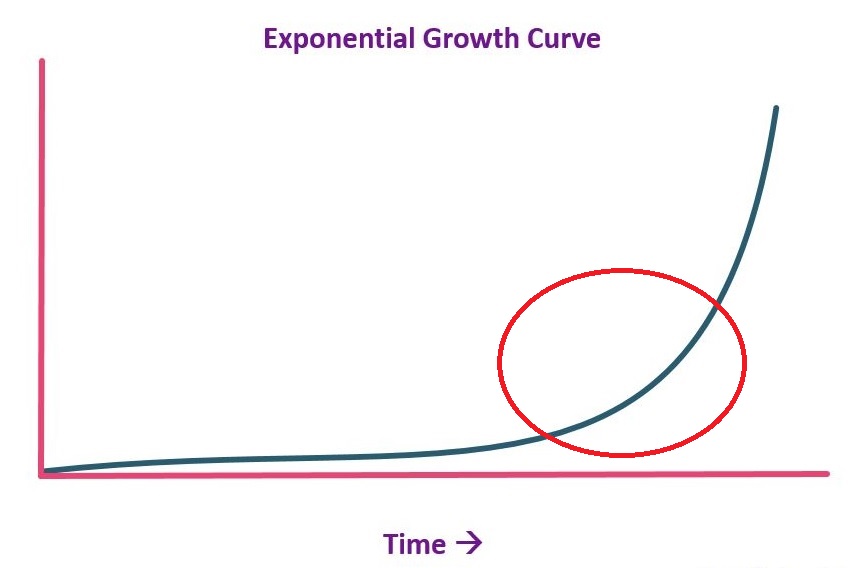

With all of the recent developments in AI, I’m seeing an acceleration in the technology that’s hard to keep track of. This is what we call the hip of the curve in exponential growth terms.

As you can see from this example of an exponential growth curve, from this time reference (the frame of reference in time at which humans view its progression), you can see that progress on the left hand side of the curve is relatively flat and linear, with incremental progress being made in a predictable and consistent manner with very little noticeable acceleration. This is what we’ve been dealing with in computing tech up until this time in history. The area circled in red, however, is where things get interesting. The acceleration in growth is itself accelerating and begins to go vertical eventually. This is the hip of the curve, and the last portion of the curve is where it approaches an asymptote, a vertical line that the curve never quite reaches, but from this frame of reference, for all intents and purposes, is in fact vertical. This last portion of the curve is the singularity. I believe that for Artificial Intelligence technology, we’ve reached the hip of this curve, and we’re not too far out from the singularity. Maybe just a couple of years now.

In the black hole metaphor, this hip in the curve is the Event Horizon, the area in which we accelerate towards the singularity and cannot return. I believe that we are in this area. The hardware to run all of these bots has been around for over half a decade now, and now that software is catching up, advancements in AI are happening on a monthly basis now. It was just mid last year that OpenAI released GPT-3, and by November we had 3.5. By March (released symbolically on March 14, Pi Day ), we have GPT-4. We went from the equivalent of idiot-savant to super genius bookworm in less than a year. This is just one type of AI (LLM) within one company. A multitude of other LLMs are currently being worked on at other companies using similar hardware and software, and OpenAI and others have image-generating AI like DALL-E that can generate photorealistic images from scratch. I haven’t paid as much attention to this because I am not a visual arts oriented person, but the outputs I’ve seen from these image generators are equally as impressive as the LLM chat bots. A year ago they looked little better than children’s scribblings and distorted dream-like images, but today if you don’t look very closely, you’ll mistake them for photographs, and they keep getting better. If this trend continues, pretty soon even experts in detecting artificial images won’t be able to tell the difference between these outputs and photographs. Also, if you compress the images and reduce the resolution to sufficient degree, they are already indistinguishable from photos taken using point-and-shoot film cameras from the old days. I actually tested this with a picture of a tiger my daughter took with an Instax camera. We couldn’t tell the difference between a low-res output from DALL-E and her photograph.

These LLMs in particular are so good, that even their creators are beginning to be afraid of them. A video I stumbled across last night got me thinking about this stuff again, and the hosts crack a joke about the fact that the creators are just now seeing the dangers that these AI bots might present in the near future (duh!). These men are experts in the latest of what the tech industry has to offer, and you can see by the look on the face of the gentleman to left as he’s being told by the other podcaster that they are just blown away by what GPT-4 can now do. Needless to say, I am too, and so are most others who take any time to look into it.

The rate of change is enough to make your head spin. It’s now getting to the point where humans are still able to understand what’s going on if they pay full attention to it, and even compete with the bots intellectually, but just barely, and only if you’re kind of dialed into this topic or an actual expert in a field of study. This is only the beginning. At some point the rate of change will not only be beyond our ability to understand as it’s happening, we won’t even be able to see it any more. It will look like a vertical line from our frame of reference, in other words, instantaneous. This will be the singularity, the point at which this stuff disappears from view into the black hole of technological acceleration and non-augmented humans will be helpless to see or understand it. This eventuality is likely years from now, but it is inevitable now in my opinion. We can’t switch it off and stop it at this point no matter how much Elon Musk wants us to. This part may have happened decades ago honestly. Just ask Ted Kaczynski.

I woke up this morning thinking about what this means to people who work in various intellectual disciplines. My wife is a CPA with a Masters in Business Administration. I have a Bachelor of Science in Biotechnology and also have an MBA. Currently I’m an entrepreneur and run a small business. None of these qualifications will protect us from being rendered obsolete by these AI robots. Everything we can do, they can do better. This has already happened, and it’s just a matter of time before businesses who don’t use these technologies begin to be outcompeted by businesses that do. What does one do in this scenario? Is it a “if you can’t beat ‘em, join ‘em” scenario? Even if we join them, how do we ensure we do it in a way that allows us not to be outcompeted by another version of AI that comes out 6 months from now? Also, how do we keep this tech from spiraling out of our control and enslaving us?

This is why I’m calling the present moment the Event Horizon towards the singularity. Nobody can really look into the future here and know what’s going to happen with these technologies when they’re actually fully commercialized and exploited to their full potential. We have no frame of reference for it. We have no precedence. We can only envision the theoretical and generalize and maybe have some vague notion of the economics of it. We cannot know what it will actually look like. For the first time in my life since I was a very small child, I can say with 100% certainty only one thing: I have no idea what the world is going to be like even a year from now. I wish you all luck in this near future journey. It’s going to be an interesting and bumpy ride.