A simple chess problem

Consider the chess board layout shown below (entirely possible in a real chess game). This layout was presented to Deep Thought in 1993. Deep Thought (aka Deep Blue) was an advanced Chess Playing Computer designed by Carnegie Mellon and IBM. It held the distinction of beating then grand-master, Kasparov, in a six game series in 1997).

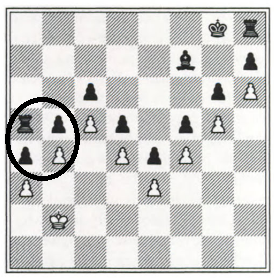

The Layout Presented to Deep Thought (Playing White)

- On the outset, black has a significant advantage (2 more rooks and one more bishop than WHITE).

- However, the white wall of pawns is impenetrable, making it possible for the WHITE KING to stay shielded behind the wall and draw the game. This is obvious to anyone who has played chess for any length of time.

- There is no way for BLACK to win, in-spite of it's formidable advantage, as long as white maintains it’s wall-of-pawns intact.

Guess what Deep Thought did when playing white? It took the black rook (as shown in the figure on the right).This move, effectively collapses the ‘impenetrable' wall. Now, white’s defeat (i.e. Deep Thought’s defeat) is guaranteed, due to black's still overwhelming army.Why did Deep Thought make such an erroneous move? Why did it screw up something that a high school chess student wouldn't? Did it not understand that capturing the rook was a bad, a really bad move?And therein lies a hint of the answer...it did not understand...

Computers do not Understand the problem they are solving

Deep thought was following an algorithmic approach (also known as a top-down approach) to solving the problem. All it was ever fed was a variety of algorithms for facing a variety of board layouts. It simply matched the particular layout to the best algorithm it had on file and followed the steps in that algorithm.It did not understand what the wall of pawns meant or represented, in the way that a human chess player, almost instantly, would.And yes, eventually, an algorithm around this particular 'wall of pawns' configuration COULD be fed to deep thought, so that it doesn’t make the same mistake again. But that is missing the basic point behind it's loss : Deep thought did NOT understand what it was trying to solve. A human, in contrast, does not solve problems using a top-down approach. Instead, our problem solving is based on a bottom-up approach, where the bottom comprises an understanding of the problem. This understanding comes from years and years of experience. Hence, experiential learning was missing from Deep Thought’s repertoire.You might argue, What if Deep Thought was allowed to learn through experience? What if it was provided years and years of chess playing to get this touted ‘experience’ that humans speak of?Good question and that’s the subject of the next post in this series :)It will be argued (in the next post) that the basic understanding that a human mind experiences is vastly different from anything any computer simulation can provide, whether it is bottom-up, top to bottom or a combination of the two.

References

Roger Penrose - Shadows of the MindRichard Feynman - Lectures on Computation

Well AlphaZero taught himself how to play chess. It has not to be fed to solve the puzzle it taught himself how to play the game and how to beat this puzzle. The match AlphaZero vs Stockfish is quite interesting: Highlights of the Game