While I appreciate your attempt at neutrality, I think you under represented the importance of broad definition of security. The narrow academic definition might as be a straw man and results derived from it are of limited use.

You are viewing a single comment's thread from:

I've definitely miscommunicated then because all I'm saying is that both kinds of approaches have strengths and the strongest advocates of each are speaking past one another :)

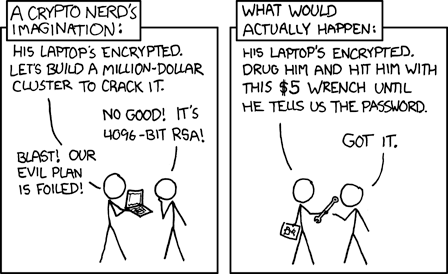

There is a lot of value to be had in both approaches and I think we will all have a better world if we try to tackle these problems from both sides rather than insist that the other approach "isn't real security". I'll use a couple of real world examples to illustrate and then go back to the xkcd well because it's just that good...

There are some spectacular examples of security protocols failing any rigorous analysis and hopefully you'll grant that the people working on them weren't intending them to insecure. An industry favorite is WEP for securing your connection to your wireless router. This is trivially broken and suddenly everyone's web traffic is free to be inspected. The motivation was right (many devices can connect, no mutual trust, encryption!), the primitives were right (streaming cipher), but there could not have been a formal analysis of the design because the keyspace was tiiiiny. There's a similar problem with the DVD encryption technology whose name escapes me. These were solutions to industry problems that looked Good Enough. The problem in both cases is that once a hack is found, the vulnerability is deployed everywhere and it's trivial to share the exploit.

Now the reverse case...

All the TLS, encrypted email, and password generators in the world aren't saving grandmas from Nigerian princes. It doesn't protect us from centralizing on our email for "Forgot password?" processes. TLS specifically is like a bane of many web companies and is one of the first big optimizations for their Edge network - offloading it and operating on clear requests inside the firewall.

So in my mind here's how we could work together better. I'll use an example from my work, Netflix. We want secure communication between clients and servers because we share sensitive data over the internet. We also want a strong notion of device and user identity and make sure that it is not transferable between users or devices because there is an incentive for malicious clients to do this. TLS doesn't solve all those problems and it's expensive to do a bunch of handshaking all the time and restricts what transports we can use. So a few years ago we worked on a protocol of our own that establishes identity in a non-transferable way, encrypts communications, and has extension points for us to evolve the protocol as we need. We have a great security team who worked through all of our business requirements and used industry best practices to build it. On the first day that the core protocol was open-sourced an external expert pointed out a timing attack. Obviously it was addressed, but it probably could have been found preemptively if a formal process and analysis/proof had been done. On the flip side, strong notions of Identity was a critical feature for us that is unaddressed in every public standard that I know of and it's not our core product, it's a business requirement. It doesn't behoove us to overinvest in a formal process when we can get "pretty good" and address all of our practical concerns.

This has been probably too long so I'll try to summarize. Formal process fills in the little cracks and details where hackers play. Business requirements and use-case driven design flesh out a big picture which may identify concerns or things un(der) addressed by a formal security hypothesis. If we work together with each hand washing the other instead of slapping the other, we'll have a bright future.