EDIT 2024-06-04: Fixed issue with flux implementation, thanks to tcbrindle for catching this.

EDIT 2024-06-24: Removed extra allocation from ranges libraries implementations. Thanks again to tcbrindle for suggesting this. This only affected the 'every other item' test.

C++ ranges are awesome as they often make the code simpler and easier to reason about. But how they compare against each other? To check that I did a micro-benchmark of c++ ranges for one particular use case I encountered in practice.

The scenario I tested was as follows:

- I have an input data set that needs to be filtered based on non-trivial lambda.

- Only a subset of this data is needed.

- Then an index is added to the elements.

- The last step is to reverse the sequence.

The repo with source code is here https://github.com/serpent7776/cpp-ranges-bench

It's basically filter+take+enumerate+reverse, but there are two tricky things here:

- The non-triviality of the lambda being used for filtering. In the example code, such a lambda is often a trivial function. What happens when the cost of invoking such lambda is not not negligible?

- Filter+reverse is a tricky combination. Filtering yields a result set of unknown size, which means that subsequent reverse cannot be done easily in the general case.

The ranges library needs to take both of these situations into account, otherwise it might introduce noticeable overhead.

Let's check it out.

The code uses catch2 to test for correctness and performance.

Catch2 is a unit testing framework for C++, but it also provides basic micro-benchmarking features.

Unfortunately, it doesn't provide any way to visualise the results, so I created a script and submitted PR adding plotting capabilities to Catch.

Tested implementations

I have reviewed the following implementations:

- clike - a simple C-like implementation. This still uses c++

vectorand its methods, but it's just a for loop with if statements. It collects the result in a vector and when it's done, it reverses it in-place. - algorithms - Manual implementation using STL algorithms. Instead of operating on the elements themselves, it allocates an extra vector with indices and operates on that. It only copies the elements in the last step.

- boost_adaptors - Uses boost::adaptors. It provides very minimal feature set, but it's surprisingly capable. Due to the limitations of adaptors library, it allocates an extra vector of filtered elements and operates on that.

- rangesv3 - Uses ranges-v3 - range library for C++14/17/20. Very similar to the implementation using std::ranges.

- stdranges - Uses C++23 ranges. This is the shortest and cleanest implementation. The compiler I was using didn't have

toimplemented, so I used equivalent function fromrangesv3library. This may have affected the results. - fluxranges - Uses flux, a C++20 library for sequence-oriented programming. A bit more noisy compared to other range implementations.

I used the following versions of the libraries:

- ranges-v3 0.12

- fluxranges faf2e0f8b9f616001890a87b613f47313443f8e5

- g++ stdlib, g++ (GCC) 13.2.1 20230801

All compiled in C++23 mode with the-O2flag.

Test cases

I have generated some random data using the faker python library. The data is a set of usernames with an id and a set of connected names. I will search for usernames that match some criteria which involves looking through the connected names. This should be enough to consider the filter criteria as non-trivial.

To do the comparisons I have generated 10 000 rows of data.

The test cases I will check are:

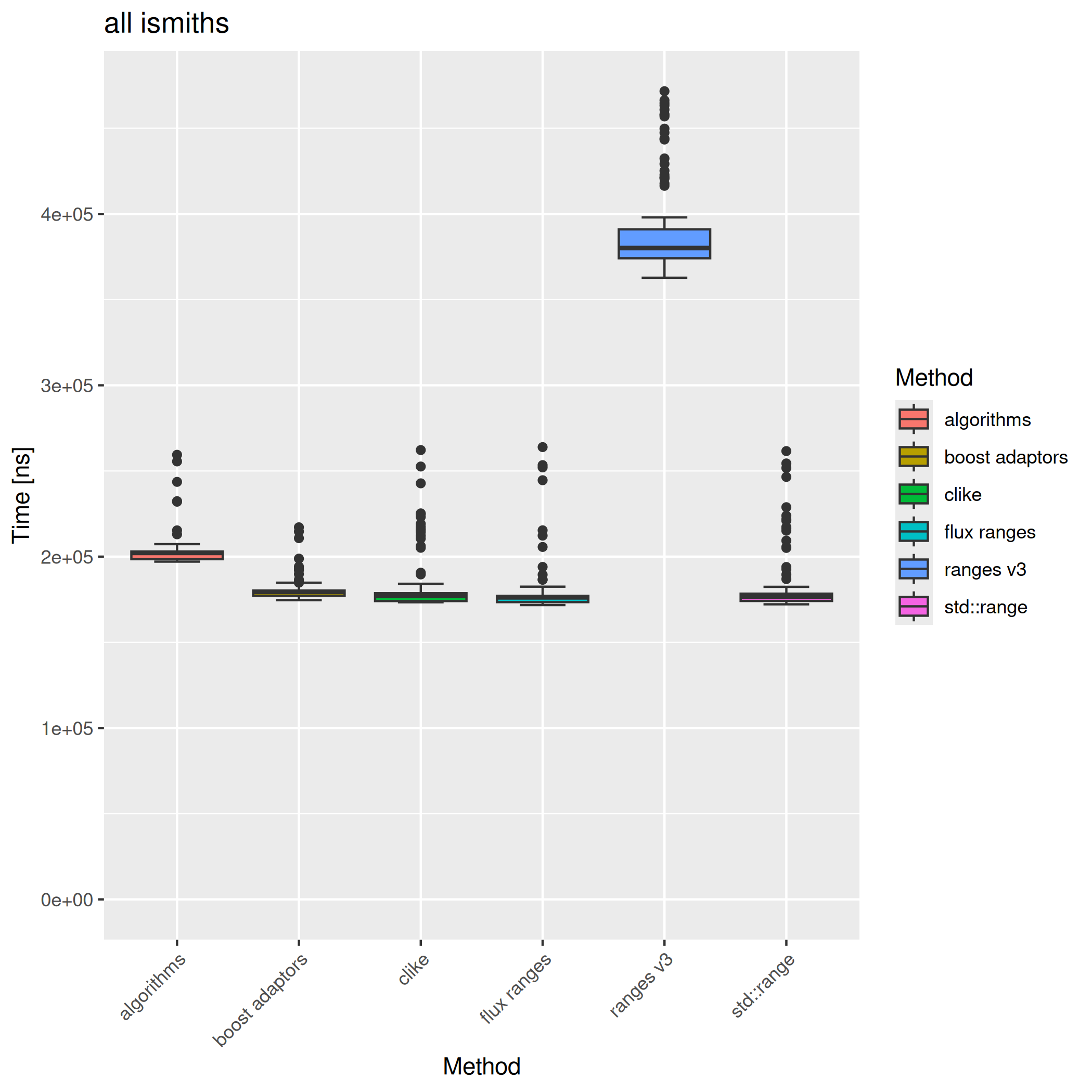

- all ismiths - select all items that have "ismith" as connection. There are 15 such entries in total.

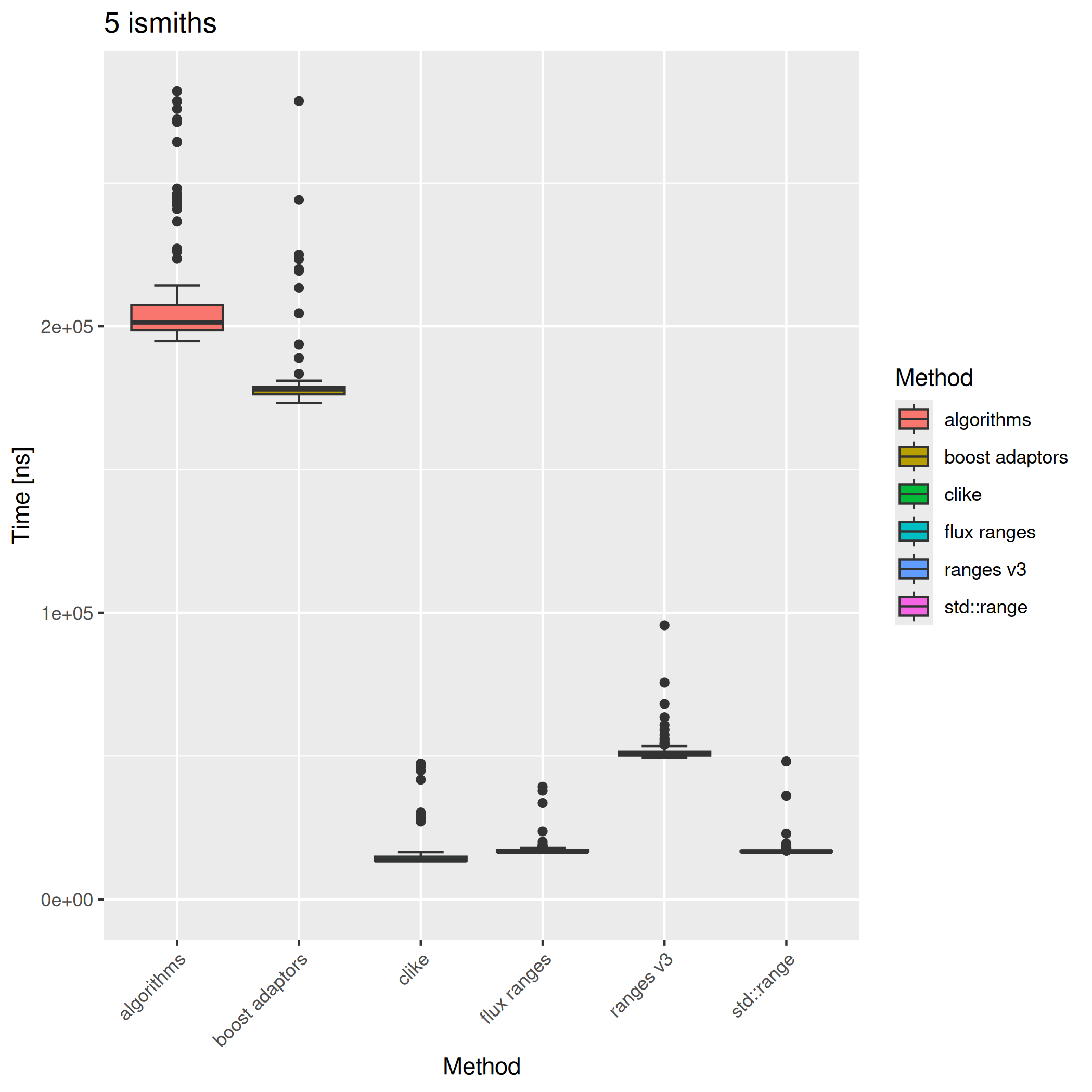

- 5 ismiths - select at most 5 items that have "ismith" as connection.

- empty result set - searches for an item that doesn't exist, yielding no results.

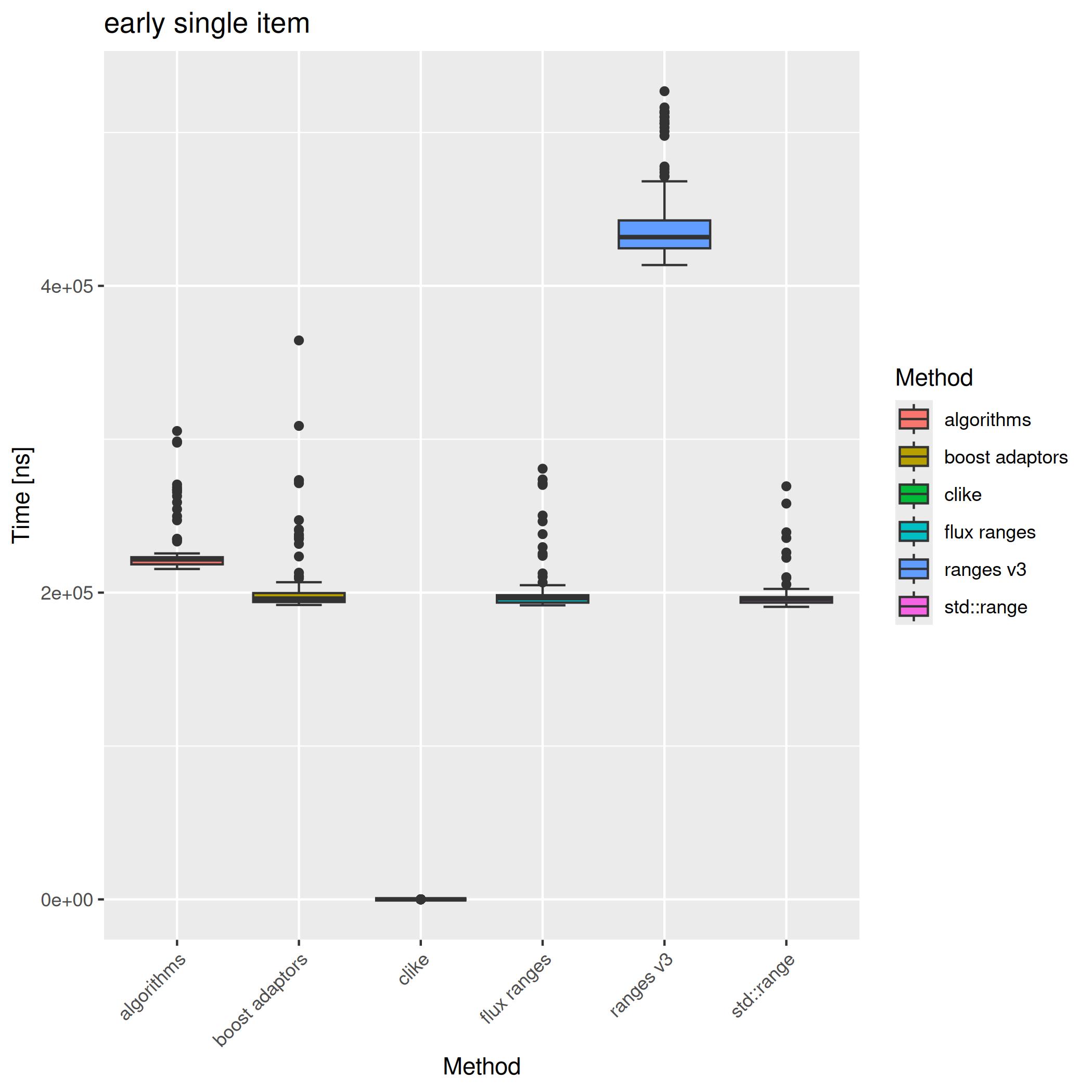

- early single item - searches for all items that have "henry79" as connection. There's only one such item, early in the sequence.

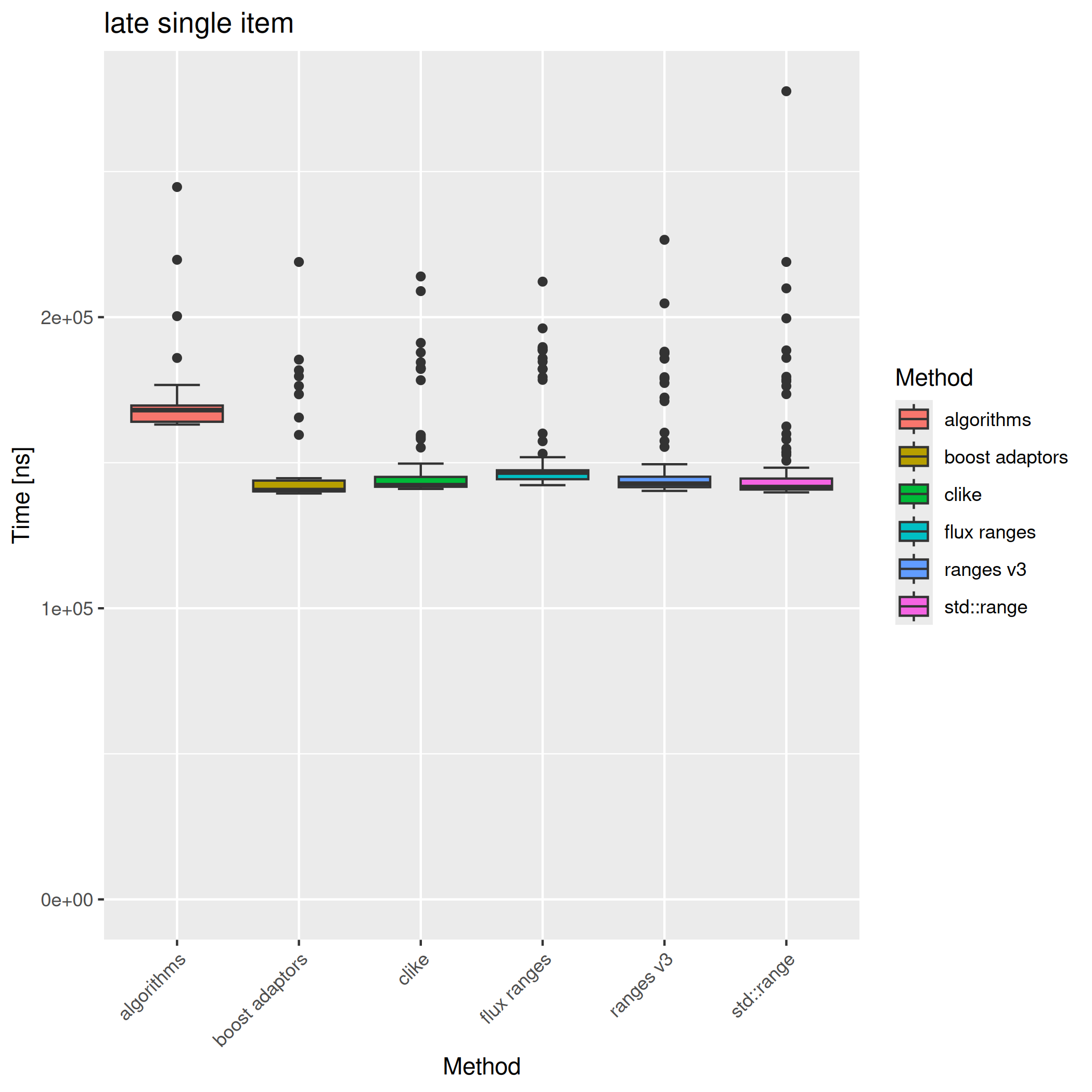

- late single item - search for all items that have "emilymclaughlin" as connection. There's only one such item, late in the sequence.

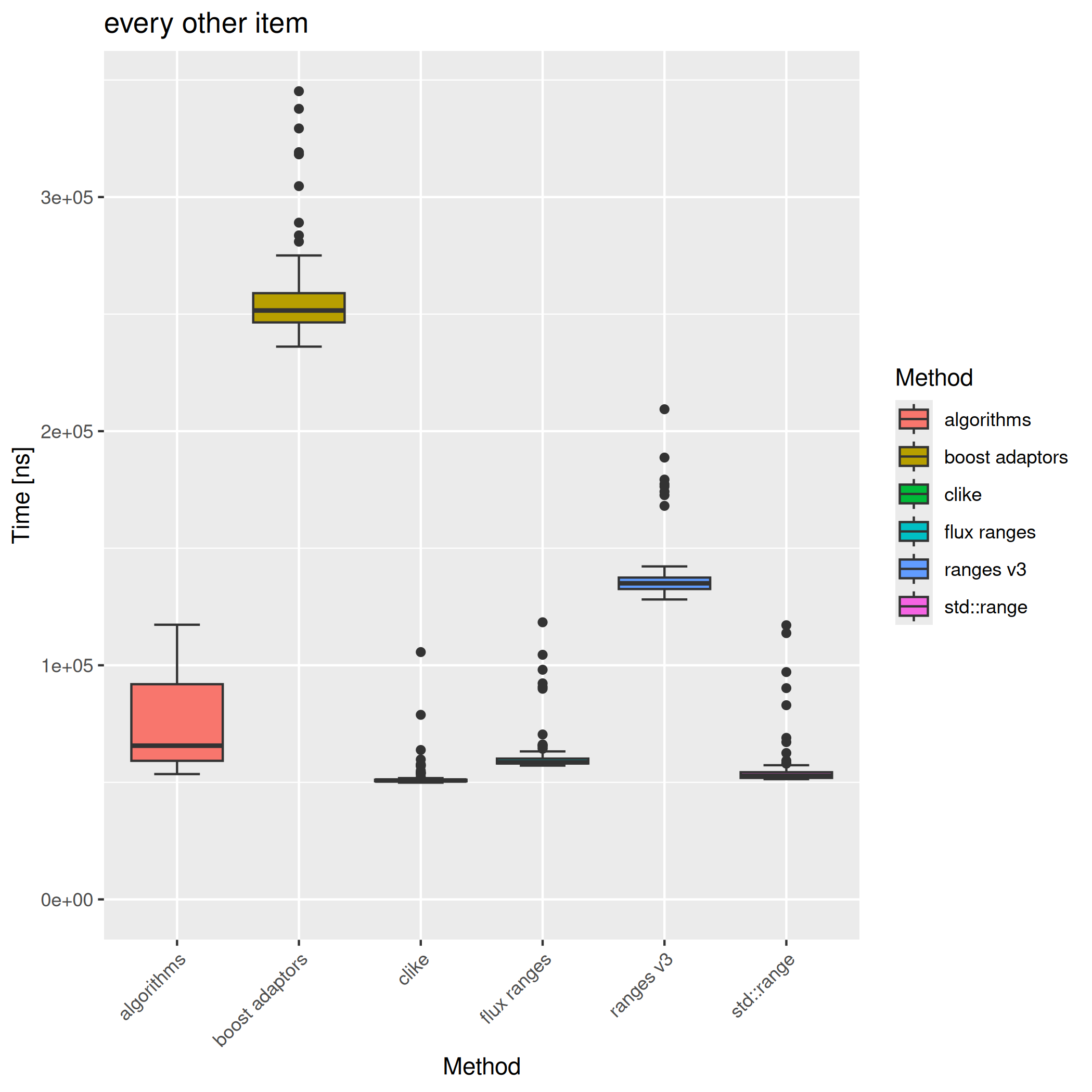

- every other item - accept every other item, while also performing some operation on the input data.

Results

- Flux and std::ranges are very similar with very good results. The only exceptions are 'early single item' and 'every other item' cases.

- Ranges-v3 was generally on par with other solutions. There are two cases where it's slower: 'all ismiths' and 'early single item'.

- boost adaptors are overall quite good. In 'all ismiths', 'empty result set' and 'late single item' are on par with fastest implementation and just slightly slower in other cases.

- C-like solution was always on par or better than other solutions.

Some more details

Let's try to see some more details. I will look at the 'early single item' test. This case is interesting, because there are big differences between the implementations.

First, let's run the test case for a few more times. I'll make the test cases run 1000 times by adding this code to each implementation section.

auto _ = GENERATE(range(1, 1000));

This uses Catch2 data generator to execute the section multiple times, each time with a different value. In this case I'm not interested in the value itself, I'm only doing this to run the section again.

Now let's run perf stat on it. To collect the data I'll will only run this single test case and only one implementation at a time, ignoring the benchmarks. I do this by invoking the compiled binary with the test-case name, the -c option with a section name that specifies which implementation to use and the --skip-benchmarks option so that no benchmarks are run. You can read more about Catch2 command line usage.

perf stat is invoked with -o stats --append, which appends the perf data to stats file. Read more about perf stat usage.

: > stats # truncate stats file

for t in clike algorithms boost_adaptors rangesv3 stdranges fluxranges; do

perf stat -o stats --append ./read "early single item" -c $t --skip-benchmarks ;

done

I will then convert the stats file with a perf-stat-to-sc script I wrote. It will create a sc-im spreadsheet, which will make it easier to compare the results.

| command | context_switches | cpu_migrations | page_faults | cycles | instructions | insn_per_cycle | branches | branch_misses | backend_bound | bad_speculation | frontend_bound | retiring | real | user | sys |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| clike | 0 | 0 | 1,417 | 9,763,699,969 | 27,310,955,366 | 27,310,955,366 | 6,611,235,612 | 84,103,168 | 7.2 | 22.2 | 21.2 | 49.4 | 3.573872222 | 3.437020000 | 0.119355000 |

| rangesv3 | 0 | 0 | 1,399 | 11,634,561,888 | 29,211,691,985 | 29,211,691,985 | 7,103,602,542 | 123,617,995 | 8.1 | 22.9 | 22.8 | 46.2 | 4.132017782 | 4.001041000 | 0.116310000 |

| boost_adaptors | 0 | 0 | 1,394 | 10,715,252,768 | 28,262,420,566 | 28,262,420,566 | 6,857,638,043 | 104,769,454 | 7.0 | 24.6 | 21.7 | 46.7 | 3.909084680 | 3.807669000 | 0.083028000 |

| fluxranges | 0 | 0 | 1,394 | 10,775,098,223 | 28,262,194,528 | 28,262,194,528 | 6,857,615,031 | 104,014,045 | 7.6 | 25.1 | 21.4 | 46.0 | 3.965913088 | 3.814496000 | 0.132746000 |

| stdranges | 0 | 0 | 1,413 | 10,731,793,760 | 28,251,095,766 | 28,251,095,766 | 6,857,354,712 | 104,070,967 | 7.6 | 23.0 | 21.7 | 47.7 | 3.955925504 | 3.844894000 | 0.092972000 |

| algorithms | 0 | 0 | 7,623 | 10,706,979,451 | 28,341,604,071 | 28,341,604,071 | 6,867,444,605 | 104,582,547 | 6.9 | 25.5 | 21.5 | 46.1 | 3.911286798 | 3.801366000 | 0.089797000 |

The first thing to notice is the difference in the number of page faults. Most implementations had about 1400 faults. The exceptions are custom algorithms with ~7600 faults. The differences in cycles and instructions are quite small. It's a bit bigger for rangesv3 and smaller for C-like though. The number of branches is also similar, slightly higher for rangesv3 and slightly lower for C-like. There are bigger differences in branch misses. The C-like implementation has the least number of misses. Rangesv3 have the most misses.

But this collects stats from the entire program run. The issue is that most of the time is spent on reading and parsing the input file, which is the same in all cases. I want to collect stats only for the single implementation function. For this I can use valgrind's cachegrind with additional code instrumentation.

I will add CACHEGRIND_START_INSTRUMENTATION and CACHEGRIND_STOP_INSTRUMENTATION just before and after the implementation function I'm interested in and then run the binary with --instr-at-start=no which will disable instrumentation at startup and will wait for the first CACHEGRIND_START_INSTRUMENTATION to enable it.

Read more about cachegrind usage.

SECTION("clike") {

auto _ = GENERATE(range(1, 1000));

CACHEGRIND_START_INSTRUMENTATION;

const std::vector<Out> found = clike(data, accept, max_items);

CACHEGRIND_STOP_INSTRUMENTATION;

REQUIRE(found == expected);

}

valgrind --tool=cachegrind --instr-at-start=no ./read 'early single item' -c fluxranges

This is the data I got:

| implementation | instructions executed |

|---|---|

| clike | 128 871 |

| algorithms | 274 757 967 |

| boost_adaptors | 274 757 967 |

| rangesv3 | 549 464 985 |

| stdranges | 274 757 967 |

| fluxranges | 274 757 967 |

It's a bit strange that all the results except for clike and rangesv3 are the same.

But this confirms that C-like executes far fever instructions than other implementations and rangesv3, the most.

Conclusions

Ranges are really cool. They often make the code easier to read and reason about. But they do not always fit all the use cases. Sometimes, in some very specific cases, a for loop might give more control that is needed to get some better results. In general though, it's usually better to start with a ranges, as they will be good enough in most cases.

I have not looked into the code yet, but you've managed to confirm what I already assumed, that I should stay away from all the "modern solutions". I suspect the clike version is also the most readable :o)

I think that I like std::ranges the most, because it's obvious what is going on just by looking at it. The C-like version is pretty clean too, but it's quite a bit more complex.