Amplification vs Safety

Amplification is a means by which empowerment is enabled for individuals within a society. The concepts presented in some of my previous blogposts all have something specific in common and that is individual empowerment. To empower the individual is to promote self liberation. In order for a person to strive toward freedom they must also embrace the increased responsibility which comes as a consequence of being free. Amplification can mean intelligence amplification, it can include amplifying individual capacity for compassion, empathy, morality, and most importantly the ability to make wise decisions.

In the absence of these amplifications the ability of a human to expression compassion for example does not scale. The reason for this is because there is a limit to the amount of perspectives any one person can leverage when making a decision. I may not be able to feel compassion for 100 people, or 1 million people, but I can program a bot which can collect data and respond in a way which amplifies my compassion. This is possible based on models of course.

If the model the bot follows is an accurate model of the world, and if the bot is designed to treat all humans with equal compassion, then in my own mind when designing this bot I only have to be capable of feeling compassion for one person in order for the bot to express compassion for an unlimited amount of people. In essence the bot would simple create an internal model of how to treat a person based on how one person is treated as an example.

The way intelligent agents work

By Utkarshraj Atmaram (Author) [Public domain], via Wikimedia Commons

If we look at the example in the descriptive diagram above we can see that intelligent agents (whether human or machine) are relying on taking information in from the environment (perception). These agents use these perceptions to create an internal model of reality. The human being creates a mental model of reality which by definition is not going to be very accurate because it's limited by the capacity of the human to create accurate mental models. The average human may or may not be good at logic or at abstract thinking so we cannot assume every human can do this well. The machine creates a purely mathematical model and we know for a fact that machines are extremely good at logic and detail. The machines can do models that the human brain can't do (relying on big data, creating models with high detail and precision).

What matters in order for amplification to remain prosocial in my opinion is that the model of reality which the intelligent agents build upon is as accurate as possible. In the case of myself without any amplification then I would put forth the hypothesis (which predictions can be made) that my ability to make wise decision is directly limited by the accuracy of the mental model of reality which I have. To put it in a different way because perhaps I'm not using the correct description of mental model, what I mean to say is that what I in my mind believe to be reality is what determines how wise I can be. If what I believe to be reality isn't close to what actually is reality then my ability to be wise is compromised because to be wise requires an accurate description of reality within that mental model. It is my opinion that only by way of amplification can I ever hope to develop an accurate mental model and only with an accurate mental model can I then make decisions which are effective (whether it be compassionate, or financially driven).

The safety argument typically flows like, if you give everyone an ability to form a more accurate mental model then those who have legitimate mental illness will become even more capable of inflicting damage. This in my opinion is a realistic argument because we know from guns, from fire, from practically anything, that when you give any kind of power to a destructive person then you increase their power to destroy. The same goes for cryptocurrency, the same goes for smart contracts, the same goes for letting slaves read anything other than the bible in the 1800s. In my opinion, the risk is always there than when you empower the disempowered there can be some percentage within the group being empowered that can become evil. We in fact still see this going on in certain parts of the world where rebels are at war with dictatorships.

The safety and stability is desirable but the freedom is also. The ability to be kept safe is what people want but people also want to know the truth about how reality works. People within societies must find a balance but in my opinion even if we want to make a safer and most stable society we require wisdom. If we restrict the ability to be wise then how is it different from times when people were restricted from reading, or going to college? In my opinion the path to self improvement should be free from obstacles. This of course isn't about only my opinion, but about community sentiment.

Amplification vs Safety vs Emergence

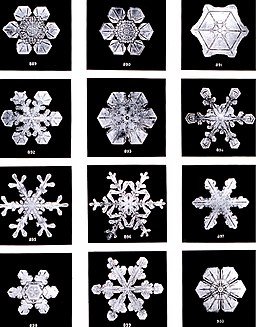

By Wilson Bentley [Public domain], via Wikimedia Commons

Amplification enhances the capacity to do constructive and destructive behaviors. People who want to contribute something constructive to the world can only keep doing so if they can scale up with the world. Meaning if the world becomes more connected then the way to actually be able to spread happiness to many people, or to make a decision which positive influences many people, requires the ability to measure, to track, to process larges amounts of information, to use technology (and machine intelligence) to scale up operations. So for doing good, it benefits from amplification of intelligence, of compassion, of kindness, of morality.

We know that people who want to do harm will also scale up with the technology. This is the primary concern which comes from those who argue about safety. If we look around we will see mental illness rates are high. Just recently there was a shooting at a wafflehouse which highlights this point. While I do not blame the gun in particular, I can see the argument that if we make destructive people even more capable by amplifying intelligence then it could increase the burden on the people who want to prevent the worst outcomes. While I sympathize with that point of view, I have also my own point of view where I do not want to be kept limited in my potential due to fear of what an "Evil" person may do. If I wish to be constructive and to be of as much benefit as possible then I will not want myself to be limited in that goal because there are destructive people who could use the same tools.

Emergence requires certain conditions to be in place. Just like with snowflakes, it is these conditions which allows for each snowflake to be unique. The beauty we see from looking at new and novel things is the result of the fact that there are conditions in place to produce diversity. Diversity in my opinion is encouraged by amplification, and emergence is a side effect. Meaning through amplification we could get a lot of new ways of seeing the world, of thinking, of solving problems, of expressing emotions, all in big profound new ways. In the absence of this we will simply not grow as much as individuals, as to grow (in my opinion) requires an ability to amplify beyond natural limits. This can be for example through other people such as if you form a company to make decisions using control groups rather than through a singular individual or it can be through the use of technical means.

Summary

- Intelligent agents must take information in by way of perceptions and from this construct an internal model of reality. Machine, human, any agent must have an internal model of reality.

- The more accurate the internal model of reality is, the more potential the agent has to make wise decisions. AI if it is to ever work, will have to create as accurate of an internal model as possible. Consider if the robot sees lots of cats, and over time it creates an internal model of what a cat is. This internal model of cat is also within for example the laws of physics best understood by the Standard Model of particle physics. In essence if the AI has a more accurate understanding of the model of reality then it may know what a cat is in much more detail than the average human.

- Empowerment of the individual comes with risks. We have to use the same amplifications to mitigate these risks by accepting the responsibility of containing destructive usage. On Steem we see this with the ability to flag (even if flagging is a bit over used).

- If we have diversity of minds, with amplification, then we can have emergence of something we never could have considered without the diversity and amplification in place. In the constructive sense this could be for instance Big Empathy which evolves from Big Data being made accessible.

Hello dana-edwards73, I like your

'Amplification vs Safety' Post. It's Really helpful for crypto.,blockchain. Please stay with us.

good point is here. I like your post.

I have not been able to capture the full meaning of your explanation, can you explain to me in brief the purpose of your program?

greetings and friendship

As always, I really enjoy your posts. Thank you for bringing quality content to Steemit :) With all that you pointed out, I think the only way a bot should be allowed to be taught to treat humans is simply Respect and a Compliment as it's universally accepted whether you are sane or violent, imho :)