Click Here to read Part 2 of this blog.

Radix (formerly eMunie)

As @monsterer2 correctly noted, the design of Radix appears to be trivially corruptible with a cost-free Sybil attack which can create conflicting histories of events. The posited corruption will not enable a double-spend, but rather cause transaction confirmation to be undone and stuck.

Radix has the logical clocks of Hashgraph (which was discussed in Part 2 of this blog), but it’s lacking the DAG wherein each Hashgraph transaction hash is committed via the transaction’s choice of parent transactions. Thus as the CEO Piers Ridyard admitted, Radix enforces no global structure on the ordering of transactions. Instead their incorrect idea (something inadequate about “Byzantine fault detection”) is that since each node has to be honest about its logical clock (because the duplicitous node can be caught lying by other nodes), that ordering can always be resolved. Yet although nodes must be honest about incrementing their logical clocks for each event they receive and honest about their commitments (i.e. nodes can’t change the history they claim to have observed), there’s nothing in the design that prevents nodes colluding to claim events proceeded in a different order than what other honest nodes observed. Such a collusion would not require them to lie about their logical clocks nor change the history they claim to have observed. There’s no penalty, cost, or mechanism (at least none described in the whitepaper) which prevents anyone from adding any amount of their own nodes to the system and having those nodes collude to concoct histories (with logical clocks that appear to be honest) that conflict with what other nodes observed. So the user of the system has no way to know which nodes are the honest ones. Even if Radix added a reputation system to attempt to determine which groups of nodes are defecting, the problem is that the defecting group could outnumber the honest group due to the system having no mechanism for preventing a Sybil attack. Which BTW, is essentially the vulnerability in Stellar SCP. And the user has no way to know which groupings of nodes are honest or not. The “finality time” concept will not be objective for offline nodes who come online. They will have to poll the network and the Sybil attack can have more nodes than the honest history.

Readers will note there are no proofs of safety in the Radix whitepaper. And no discussion of how a Sybil attack is avoided. So that’s a reliable indicator that my analysis above is correct. Note that @Peachy has indicated that a technical rebuttal may be forthcoming (c.f. also), but I still have my doubts (c.f. also).

No Finality of Transactions

The CTO and inventor of Radix Dan Hughes aka @Fuserleer at bitcointalk.orgtried to explain why he thinks a double-spend is not possible but he clearly made an error in his logic about the implications of the attack:

Bob later presents Atom(x') which conflicts with Atom(x). Bob can not be sure that nodes that aren't his don't have Atom(x). If they do, when they receive Atom(x') from Carol, they can inform Carol that it is not legit..with proof of Bob's previous transaction.

Carol will query nodes randomly and if Bob’s Sybil attack is extensive, then Carol will have some nodes telling her that Atom(x') occurred before Atom(x) and other nodes attesting to the vice versa. So we might suppose that Carol will not accept the transaction as he presumes. But the problem is that when Alice wants to spend Atom(x) the same ambiguity will be present so that if David is receiving the outputs from Atom(x) from Alice, he also can’t accept the transaction. Thus a Sybil attack can make the consensus system stuck and transactions can’t be spent.

The fact that he didn't stop, also suggests a fault or dishonesty.

The dishonesty of Bob is not penalized. He could already have received what he paid Alice for. Alice is the one who is stuck with the loss because she cannot spend the payment she received. And Bob could be shorting the token and perform a massive attack on many parties, such as (an exchange) attacking transfers from an exchange (i.e. on itself) after cashing out!

And given Dan didn’t realize or mention that in his explanation, I would make a strong bet that he is not testing for that attack.

He also essentially had the same sort of lapses in understanding and Sybil attack vulnerabilities (c.f. also) in prior variants of his designs that @monsterer had pointed out to him in 2016. That is not to claim he never had correct statements. He displayed deep thinking about BFT in the non-proof-of-work context.

No Total Order

Essentially the problem of Radix’s design is there’s no mechanism for a total ordering— no overall consistency of consensus. Thus there appears to be no way to penalize spamming the network with a Sybil attack. This appears to show up also in the reward mechanism. They mention that the rewards are limited in each shard, but there are quintillion possible shards. So even though their whitepaper mentions nothing about the reward system, I can still surmise that a Sybil attack can create massive token supply inflation. Given that nodes are disincentivized from remaining on a shard that is overpopulation, then via a Sybil attack the attacker can dominate as many shards as it wants to.

MaidSafe’s PARSEC

PARSEC is essentially Hashgraph with the added capability for higher churn of nodes and not patented. Otherwise it has the same flaws of Hashgraph, including only ⅓ liveness threshold1 which is thus a great risk the consensus can become permanently stuck. And given the higher node churn rate, we can expect the transactions-per-sec (TPS) to be even lower than Hashgraph. Note where they claim PARSEC has higher TPS, they’re essentially comparing it to Bitcoin, not to potentially truly scalable (sharded) decentralized ledgers.

1 The maximum possible where the validator nodes don’t sign their decisions. Yet then I don’t yet fully grok why Hashgraph can’t have the ½ liveness threshold of Byteball? Presumably it’s because Hashgraph forms its quorum with implicit votes of the gossip events instead of the explicit votes of the witnesses as is the case for Byteball. Thus Hashgraph is vulnerable to a sophisticated attacker even below its ⅓ liveness threshold because it lacks the randomization of a “common coin” algorithm.

Significance

PARSEC is especially suited for MaidSafe’s SAFE network which requires the higher churn rate. Notwithstanding PARSEC being technologically innovative, algorithmically simplifying, and interesting at what it does, I don’t think PARSEC is going to be that significant in the blockchain arena. Because of a need in the blockchain arena for higher TPS, true scalability, and better protection against 50+% attacks with better 50% liveness which PARSEC doesn’t offer. PARSEC might possibly be significant in the decentralized communication arena if there’s no blockchain tokenization to drive incentives. But then what drives the incentives and prevents the PARSEC’s Byzantine fault tolerance from being Sybil attacked?

Concrete Instead of Common Coin Algorithm

PARSEC has Hashgraph’s concept of “strongly seen” events which in Hashgraph determine the famous witnesses of each round. PARSEC adds the concepts of ‘observers’ which are those events that can strongly see ‘interesting’ events created by ⅔ or more of the nodes, and ‘meta-votes’ which which are ‘yes’ if a node’s most recent observer strongly sees an interesting event for the node receiving the meta-vote.

The solution to the binary Byzantine consensus (aka asynchronous binary agreement or ABA) provides the solution to the problem of consensus on the next gossip event. All nodes wait until all nodes agree on the meta-votes for all nodes, and the most recent event from each node with a ‘yes’ (aka ‘true’) meta-vote are sorted with a tie-breaker so as to objectively select only one of those gossip events as the winner for the next ordered event.

ABA has four key properties. The solution proceeds by broadcasting with four more key properties so that nodes can formulate their bin-values according to three rules. The bin-values are an algorithm for the estimates of the binary (in this case meta-votes) values which converges towards consensus as proven.

To determine which of the candidate events (from the solution of the bin-value consensus) is the winner for the aforementioned next ordered event requires introducing some randomness to prevent a sophisticated attacker from cheating at less than the ⅓ liveness threshold— which is one of the key differences from Hashgraph. The “common coin” algorithm wasn’t chosen to solve the ABA because it’s too unrealistic especially in a design intended for high node churn. Instead due to inspiration from a 2018 paper a simpler graduated (aka gradient) leadership-based concrete coin algorithm was formulated in this context of gossip events.

Stellar SCP

Before diving in, the Stellar SCP creator David Mazières succinctly and coherently explains the terms safety, liveness, and Byzantine fault tolerance (BFT), as well as explaining the intuition for the FLP impossibility theorem in terms of indeterminism of finality created by the asynchronous order of the arrival of information.

Federated Byzantine Agreement

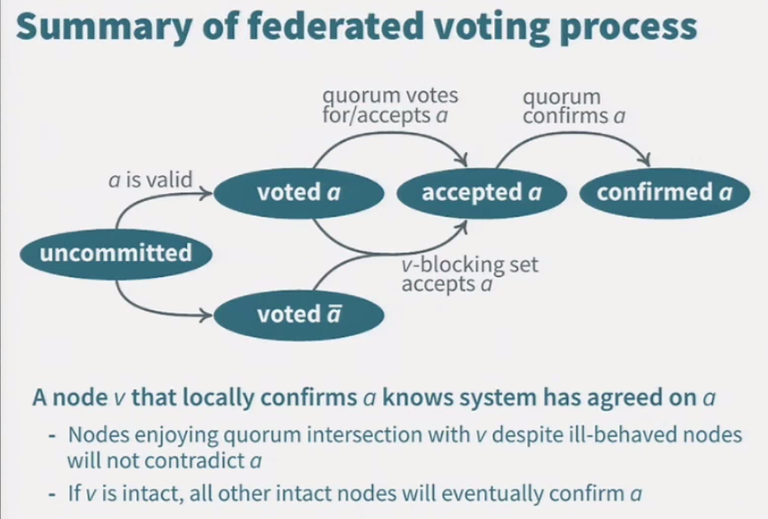

In essence the Federated Byzantine Agreement (FBA) in SCP requires ⅔ of the weighted trust of all nodes to approve of the next ordered event for it to become 100% final. IOW, each node chooses its own quorum slices. Each slice is (according to the said node) a subset of nodes that are trusted to approve the next ordered event. The next event is final system-wide with agreement of ⅔ of the overlap of all quorum slices in the system, weighted by allowing any of each node’s slices to participate in the overlap.

The liveness can be stuck indefinitely until that time. Nodes are allowed to change their votes between attempted voting rounds and even to change their quorum slices before the start of the first round (of the next slot) for approving the next event. Nodes employ a v-blocking criteria in a multi-step rounds process which insures the safety that nodes can’t be fooled into the incorrectness of presuming systemic finality on more than one fork, and to insure (running in conjunction with a deterministic leadership algorithm) that the system converges towards finality instead of oscillating indefinitely between changing votes. That is hoped to be the normal case, but only when the ⅓ liveness threshold is not breached.

Not Trustless

The tradeoff is that SCP’s safety, liveness, and BFT, rely on the judgements of trust that the nodes express in their quorum slices. There’s no incentive mechanism in SCP to encourage good and discourage malevolent behavior. Vitalik had pointed out that individual altruism is an undersupplied public good which is a Prisoner’s dilemma where everyone can be encouraged to defect. The implications of this being for example that either by Sybil attacking the nodes and/or a massive failure of transitive trust, an attacker can erase and replace the entire history of the chain. That is the insecurity of the nothing-at-stake subjectivity which plagues all extant non-proof-of-work consensus systems in insidious ways.

Thus it appears the political economics and game theory is not correctly contemplated, because for example it seems to be an incorrect analogy to equate FBA to Internet routing peering trust arrangements (analogous to slices) because routing is not a total order that requires safety. Errors in trust judgement in routing can at most cause the packet to not be delivered yet never cause the (encrypted) packet to be readable by the wrong ends or prevent the packet from being resent along a different routing path. That’s the essence of the End-to-end principle where security is injected by the ends and not necessary to trust the communication network between the ends. Whereas, failure of trust in FBA can lead to loss of safety which can’t be undone.

DFINITY

The team of DFINITY has collectively one of the most impressive set of credentials (perhaps rivaled by IOHK, ZCash, and Tezos) I’ve seen yet in the crypto blockchain arena and they have top-tier venture capital such as Polychain Capital and Andreessen Horowitz. For example, Andreas Rossberg being an expert in programming language (PL) theory recently corrected me on the very convoluted concept of value restriction— a concept that applies to PL theory not blockchains nor cryptography. Yet I also recently found a critical oversight in his reasoning that derives from his magnum opus on modularity.

However, after being initially awe-struck I realized that their core competencies (other than for Mahnush Movahedi and the collaboration with EPFL researchers) are in cryptography, math, and PL1 theory. And their apparent lack of applied (outside the halls of ivory towers) experience in blockchain research (including the critically important integration of political-economics and game theory) shows up in some critical weaknesses I found in their design notwithstanding that the DFINITY design is also impressive for the innovations it incorporates. Frankly it appears to me to be the typical case where geeks try to find a market for an algorithm (e.g. their BLS threshold signature scheme which becomes a hammer for every nail) instead of first having a market then developing the engineering to fit the market. I’ll apply considerable effort explaining my analysis of DFINITY’s design because it will exemplify the key weaknesses that I was able to improve upon in the (tentatively named CRED) consensus system design which I’m contributing to.

1 I speculate that their connection to the EPFL originates probably through the German, Castellan archetype (note I have some German ancestry) Martin Odersky the creator of the Scala PL, which has been a kitchen sink PL clusterfuck (c.f. 1, 2, 3, and 4) with an unsound type system.

Block Notarizations Versus Finality

Block creators are randomly selected each with a different weight. The weights guide the incentives to choose the chain with the highest weight. In normal operation where all nodes are honest, only the highest weighted block is confirmed (aka ‘notarized’) in each block period so finality is achieved after only two (2) block confirmations. Each of the two block confirmation is expected to consume a “few seconds”. I’m not 100% confident about that “few seconds” per block confirmation in the real world with the very large group size required.

However, in the pathological cases with many dishonest nodes can cause multiple blocks to be confirmed per block period (and presumably the attacking nodes will put conflicting transactions in these blocks), so finality can be significantly delayed although it will converge in bounded time if the liveness threshold is not exceeded.

DFINITY’s chain can become totally stuck while the small ⅓ liveness threshold is exceeded. Could require a hard fork to unstuck it. In fact all extant non-proof-of-work consensus systems other than Stellar SCP have this same threat of becoming stuck requiring a hard fork to unstuck when the liveness threshold is exceeded. This is yet another reason they’re all run by oligarchies to insure they don’t get stuck. Stellar SCP can become stuck but new trusted nodes might join the network to unstuck it without a hard fork, although this rescue isn’t assured or even necessarily likely.

Imperfect Handling of Network Splits

DFINITY boasts about their network split handling. Although it correctly stalls any minority chain until the network connectivity returns, it also can stall both halves of a network split if both exceed the ⅓ liveness threshold of the permissioned nodes. Whereas, if the liveness threshold was instead ½ then a two-sectioned split will always (unless split exactly in half) always have a half section which doesn’t exceed the ½ liveness threshold and thus is not stalled. However, a ½ liveness threshold can end up with all three sections stalled in a three-sectioned split, but less likely than for a ⅓ liveness threshold.

Undelayable Cryptographic Sortition

DFINITY’s decentralized “random beacon” is a non-interactive2 threshold signature scheme which provides securely randomized cryptographic sortition. Sortition is the process of selecting a sub-group of validators from a larger set of permissioned nodes. DFINITY’s BLS threshold signature scheme is claimed to be a significant improvement over group randomness of the prior art. Unlike Algorand and possibly others, it can’t be aborted by a sophisticated adversary with less than the liveness threshold to create a bias in the randomness. The BLS threshold signature scheme may have analogous or other advantages such as execution speed and non-interactivity compared to the random sortition in Ouroboros and the RandHound employed by OmniLedger.

2 Non-interactivity is a positive attribute in this context, because it means that the signers don’t have to interact with other signers in order to sign and broadcast. The ‘unique’ result is achieved unambiguously regardless of the ordering or proportion of candidate signers who sign, as long as within the liveness and safety threshold mentioned in the next Nothing-at-stake section.

No 100% Short-term Safety

DFINITY’s nothing-at-stake vulnerability to quorum busting (i.e. exceeding the safety threshold) attacks applies both to short-range and long-range attacks. IOW, there’s no resistance to an attacker which controls enough of the permissioned nodes (or of the system hashrate in the case of proof-of-work) to exceed the safety threshold. The objective correctness of DFINITY’s short-term and long-term finality relies on the safety threshold of the random beacon.

This isn’t abnormal because it’s the normal security weakness that applies to all extant consensus systems analysed in this multi-part blog series, including proof-of-work although proof-of-work is at least considered much more difficult to profitably attack than nothing-at-stake. C.f. the subsequent Potential Improvement on the Horizon section for a possible improvement coming.

Nothing-at-stake

The DFINITY whitepaper claims it’s immune to the insecurity of the nothing-at-stake subjectivity which plagues all extant non-proof-of-work consensus systems in insidious ways:

The DFINITY blockchain is further hardened by a notarization process which dramatically improves the time to finality and eliminates the nothing-at-stake and selfish mining attacks.

The notarization step makes it impossible for the adversary to build and sustain a chain of linked, notarized blocksin secret. For this reason, DFINITY does not suffer from the selfish mining attack [4] or the nothing-at-stake problem.

But the notarization relies on the security of the random beacon, which is only secure up to the chosen safety threshold of ⅔ (and a ⅓ liveness threshold) as stated in §7 DECENTRALIZED RANDOMNESS BEACON on pg. 9 where ƒ is the number of Byzantine nodes, t is the number of nodes for quorum, and n is the total number of nodes:

For simplicity of exposition we describe the random beacon protocol for a single group

Gwith|G| = nandn > 2ƒ(G).

The adversary cannot predict the outcome of such a signature if

ƒ ≤ t − 1and cannot prevent its creation ifƒ ≤ n − t.

Thus the adversary with ⅔ of the nodes is not prevented (by the otherwise unpredictable randomness of the random beacon) from creating valid forks in secret and then using them to fool offline users who come online and are unable to objectively detect which fork was the honest one. This is the definition of a nothing-at-stake attack.

Ostensibly the DFINITY team thinks the nothing-at-stake flaw only applies when the safety threshold is not exceeded. They must be making some comparison to the 50+% safety of proof-of-work. But the entire point of nothing-at-stake is in comparison to creating multiple proof-of-work forks which burns an exogenous resource and thus is not free. Whereas, nothing-at-stake consensus systems enable the attacker to construct unbounded number of forks at no cost.

Probably because of said myopia, they propose to make the randomness of the random beacon available to the application layer that runs smart contracts, so the quorum busting attacker can do more than double-spend his own tokens and potentially infiltrate the smart contracts! This proposal is entirely untenable because as explained in the subsequent section, all non-proof-of-work consensus systems are run by quorum busting oligarchies.

Inadequacy of Bonded Stake Deposits

DFINITY has bonded stake deposits to prevent a Sybil attack on the safety threshold for the set of permissioned nodes (aka ‘clients’ or ‘replicas’):

For example, if registration requires a security deposit, a misbehaving client would lose its entire deposit, whereas a miner in a typical proof-of-work blockchain would only forego the block reward during the time of misbehavior […] Moreover, DFINITY supports open membership by providing a protocol to register new clients via a stake deposit with a lock-up period.

³Sybil resistance is achieved for example by requiring a stake deposit for each replica.

Depending on the underlying Sybil resistance method, the endorsement is, e.g., the proof of a locked-up stake deposit, the solution to a proof-of-work puzzle tower, or the certification by a central, trusted authority.

A client joins by submitting a special transaction into a block which also locks up a stake deposit

Bonded stake for nodes (have a carrying cost but) is not an opportunity cost because the attacker can recoup that cost with non-linear ROI by extracting maximum rents when dominating the system and can employ externalities for maximizing profit (even subdividing-and-conquering the politics of the users) such as shorting the token while attacking. Also the attacker can normally attain the stake nearly cost-free. These incentives are a power-vacuum and that is why all non-proof-of-work consensus systems are owned and run by an oligarchy behind the curtain. Normally that oligarchy prefers to appear invisible and benevolent only extracting profits from their control in ways that ignorant users (aka greater fools) are unable to blame on the (often nameless, surreptitious) oligarchy, such as by raising transaction fees to the maximum the market will bear (and fees aren’t even mentioned in the DFINITY whitepaper)! The users are milked by the oligarchy parasite. And then one day the oligarchy crashes the token exchange price, buys up all the tokens cheaply, pumps up the exchange price and repeats the ongoing manipulation. Wash. Rinse. Repeat.

Unsuitable for Wide Deployment

Thus even with forfeitable stake deposits, the nothing-at-stake makes non-proof-of-work consensus systems unsuitable for any sort of fair protocol for the Internet. They’re insolubly and invariably parasitic systems that no honest person could recommend for wide-scale deployment.

Yet Nakamoto proof-of-work systems can’t be scalable (c.f. also). And for proof-of-work, only the one-chain-to-rule-them-all is really secure long-term. Yet that one-chain-to-rule-them-all world reserve currency (i.e. Bitcoin) will kick all the masses off-chain with $50,000 transaction fees.

Potential Improvement on the Horizon

Note I’m currently contributing to an unlaunched, vaporware project tentatively named CRED which posits to be the first non-proof-of-work consensus system that has an adequate protection against nothing-at-stake. It provides stochastic resistance against the quorum busting attacker long-term and an absolute 100% safety threshold in the short-term (a feat that AFAIK has never been achieved before). And it will also be highly scalable with consistent instant confirmations, minuscule transaction fees, a superior ½ liveness threshold, and an alternative mechanism for not getting stuck when the liveness threshold is exceeded.

Cardano’s Ouroboros

Click Here to continue reading Part 4 this blog.

The linked webpages and whitepapers cited in this blog have been archived at archive.is and/or archive.org.

Hi Passing by And Upvoted you :) !

:

“Of the spirit of women. - The spiritual power of a woman is best demonstrated by her sacrificing her own spirit to that of a man out of love of him and of his spirit but then, despite this sacrifice, immediately evolving a new spirit within the new domain, originally alien to her nature, to which the man's disposition impels her. (from Assorted Opinions & Maxims 272)====> Friedrich Nietzsche

Slight correction and clarification is needed on the quoted DFINITY analysis.

Note their whitepaper is presuming

½safety and liveness thresholds, but I presume⅔safety and⅓liveness thresholds, because their assumption is unrealistic about proof-of-stake security.Also I wrote:

And I wrote:

Do you have your works saved in other platforms to ensure their survival?

If you did not start it already, at least remember from the next time to save them in other places too.

It will be sad to lose your content when this platform goes down.

I think one has to have at least a relevant B.Sc. and 3 years of relevant experience to comprehend your posts.

You got upvoted from @adriatik bot! Thank you to you for using our service. We really hope this will hope to promote your quality content!

I also thought of that recently and @smooth provided me with some instructions on how to backup STEEM data. I plan on hiring someone soon I think for that task. Maybe to make an export tool that can be used to import the data over to the ledger I am working developing. But my TODO list is very long so I hope I will get it done.

Would be good to have your works saved, and I am also interested at saving my own.

So if you ever create such a tool or find one, please let me know.

The backup tool was created and run recently. I need to gather the code myself and run it again. But in theory all my posts are archived. Also I archived most of them on

archive.isandweb.archive.org, including the page that lists my blogs and the other page that lists my comment posts. Also archived on those sites thebusy.orgcopy of all my blogs and comment posts.It may be somewhat difficult to reconstruct them if ever Steem goes down. Yet the information will not likely be lost, for those diligent enough to dig it up. Perhaps at some point in the future the archives can be restored to some new project that projects to have a sustainable lifespan, but entropy is always increasing overall and so eventually information is lost.

Glad to see this reply from you. Hope you are well.

This reply confused me multiple times:

How is your project going?

Your obsession with @berniesanders is pathetic. Get a life.

You got upvoted from @adriatik bot! Thank you to you for using our service. We really hope this will hope to promote your quality content!

You got a 100.00% upvote from @luckyvotes courtesy of @stimialiti!

You got a 60.00% upvote from @sleeplesswhale courtesy of @stimialiti!

This comment has received a 66.67 % upvote from @steemdiffuser thanks to: @stimialiti.

Bids above 0.1 SBD may get additional upvotes from our trail members.

Get Upvotes, Join Our Trail, or Delegate Some SP

This one sentence reply has as many upvotes and 10% of the value of the original post. This seems very wrong to the uninformed like me.

Reason is I played this game better than him.

I suppose that explains why he wants to make a new one, lol.

You got a 27.10% upvote from @luckyvotes courtesy of @stimialiti!

You got a 36.25% upvote from @sleeplesswhale courtesy of @stimialiti!

This comment has received a 76.92 % upvote from @steemdiffuser thanks to: @stimialiti.

Bids above 0.1 SBD may get additional upvotes from our trail members.

Get Upvotes, Join Our Trail, or Delegate Some SP

lol man lol!!.,,,.....

I see that Ethereum is trying to copy DFINITY now. I am aware of their research forum:

https://ethresear.ch/

I presume EOS will also try to copy extant published designs which are superior to their DPoS.

This is why the project I am working on will launch as closed source initially until the first-mover advantage (FMA) is fully locked in.

How does an idiot like me with virtually no liquid capital available to invest in a project like yours get involved to help?

LOL, basically, all I have is blind faith that you seem to be one of the most knowledgeable people in the blockchain space, and who goes to Herculean levels of effort to keep the public "in the know".

I presume you’re not a programmer, so the best then is participate in the onboarding land rush and affiliation commissions phase of the project. You should hopefully be able to do very well with that (but no guarantees of course). Early Steem bloggers ended up as millionaires.