TL;DR

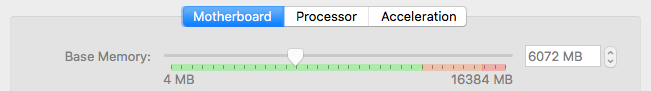

I wanted to share with you all how to begin building an open-source platform for analyzing cryptocurrency market data. If you aren't a fan of getting your trading advice from platforms derived from traditional markets and are curious about building your own tools for forecasting and investing, I hope you'll read further and comment.

All feedback is appreciated.

Free Datahz?

There are many APIs to choose from with more detailed options ... often with a price tag.

I chose this API for a few reasons:

- Free (for now?)

- Historical Data for Many Alt-Coins

- Easy of Use!

(Thanks to http://coinmarketcap.northpole.ro)

Setup

Get VBox

https://www.virtualbox.org/wiki/Downloads

Get ELK VM

https://bitnami.com/redirect/to/151919/bitnami-elk-5.4.1-0-linux-debian-8-x86_64.ova

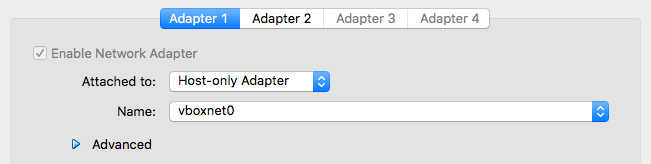

Config ELK VM

Change Adapter 1 to Host-Only

Enable Adapter 2 to NAT

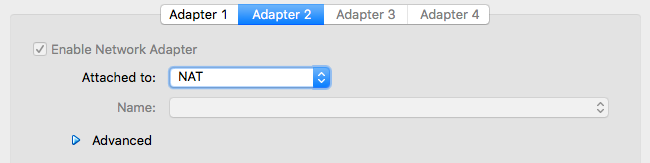

For Improved Performance, Adjust RAM/Processors/Etc.

Login with Defaults

bitnami/bitnami

(Change password)

Enable ssh (this can be skipped but, I preferred ssh for this)

sudo rm -f /etc/ssh/sshd_not_to_be_run

sudo systemctl enable ssh

sudo systemctl start ssh

Install python + pip + elasticsearch.py

sudo apt-get upgrade -y python-dev libssl-dev git-dev

sudo python < <(curl -s https://bootstrap.pypa.io/get-pip.py)

sudo pip install elastic search

Check IP Address

sudo ifconfig

eth0 Link encap:Ethernet HWaddr de:ad:be:ef:ca:fe

inet addr:192.168.100.101 Bcast:192.168.100.255 Mask:255.255.255.0

Now we are ready to get going ...

The basic workflow is like so:

- Fetch JSON data from API

- Parse JSON data

- Configure elasticsearch index mapping to use the JSON object timestamp

- Send result of parsed JSON data to elasticsearch

This script can be saved to to something like chistory.py:

#Imports

import sys

import datetime

import requests

import json

from elasticsearch import Elasticsearch

#Variables

server = 'localhost'

arg1 = sys.argv[1]

arg2 = sys.argv[2]

doctype = arg2.lower()

id = arg1

#Send data to ELK function

def sendtoelastic(data):

try:

es = Elasticsearch(server)

es.index(index=id, doc_type=doctype, body=data)

except Exception as e:

print 'Error sending to elasticsearch: {0}'.format(str(e))

pass

#Send settings to ELK function

def settingselastic(data):

try:

es = Elasticsearch(server)

es.indices.create(index=id, ignore=400, body=data)

except Exception as e:

print 'Error sending to elasticsearch: {0}'.format(str(e))

pass

#Build request, download and load JSON data

payload = {'coin': arg2, 'period': arg1}

r = requests.get('http://coinmarketcap.northpole.ro/history.json?', params=payload)

h = r.text

d = json.loads(h)['history']

#Send settings

settings = '''{"mappings": {''' + '"' + arg1 + '"' + ''': {"properties": {"timestamp": {"type": "date","format": "epoch_second"}}}}}'''

settingselastic(settings)

#Send data

for i in d.items():

for l in i:

if type(l) is dict:

sendtoelastic(json.dumps(l))

Usage:

python chistory.py 2017 BTC

python chistory.py 2017 BTC;python chistory.py 2017 ETH;python chistory.py 2017 DOGE

python chistory.py 2016 BTC;python chistory.py 2016 ETH;python chistory.py 2016 DOGE

Coins:

A current list of coins can be found here

Dumping Index:

If for any reason we aren't happy with the results, we can dump elastic like this:

curl -XDELETE 'http://localhost:9200/_all'

Haz Datahs ... Now What?

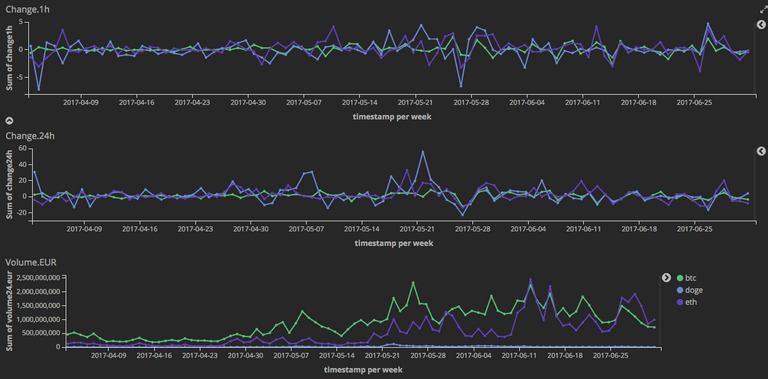

Here's a quick how-to visualize your data in Kibana with a few line graphs which may be of interest ...

- Open your browser and go to http://192.168.100.101/elk (use IP address from above)

(user/bitnami) - Create Index "201*"

Create Visualization

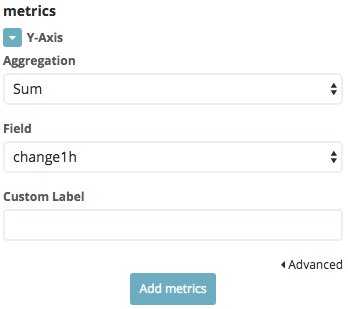

Change.1h x Date

- Click the "Visualize" Button

- Click "Create New Visualization"

- Click "Basic Charts > Line"

- Select Index "201*"

- Click Drop Down Button for Y-Axis

- Select Aggregation > "Sum"

- Select Field > "change1h"

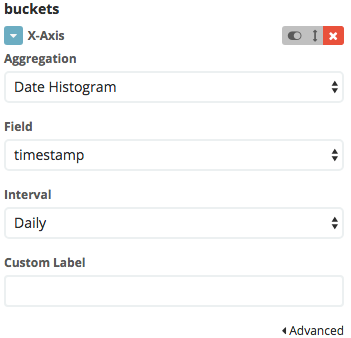

- Click "Select Buckets Type" > X-Axis

- Select Aggregation > "Date Histogram"

- Select Field > "timestamp"

- Select Interval > "Daily"

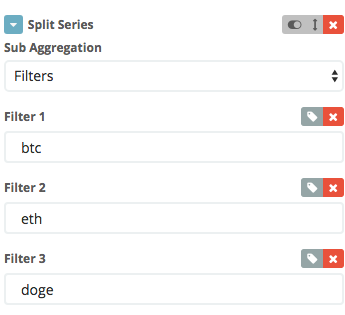

- Click "Add Sub-Buckets" > "Split Series"

- Sub-Aggregation > "Filters"

- Filter 1 > "btc"

- Filter 2 > "eth"

- Filter 3 > "doge"

- Save

Change.24h x Date

- Change Y-Axis Field > "change24h"

- Save

- Change name Change.24h

- Check "Save as a New Visualization" Option

- Save

Volume.EUR x Date

- Change Y-Axis Field > "volume24.eur"

- Save

- Change name Volume.EUR

- Check "Save as a New Visualization" Option

- Save

Create a Dashboard

- Select Dashboard Button

- Create New Dashboard

- Add Visualizations to Dashboard

On APIs

This is just a basic example of leveraging an API for analytics.

The basic mechanics ought be able to be applied to other APIs of interest.

If you'd like to see more API analytics, follow and up vote. I hope this helps someone!

If you made it this far ... Thanks!

Will this api be able to provide the bid spread in a baseline 0 graph as opposed to what is currently displayed by the crypto exchanges. May be able to get a better read on momentary momentum.

I'm not really clear on all those buzz words but, I'll try to explain further ...

This is collecting raw data from the API:

From this point, users/traders/gamblers can begin performing quantitative analysis as we see fit.

This post received a 3.6% upvote from @randowhale thanks to @cayce! For more information, click here!