In this article, I will reach far and wide (not too far or wide) to try and bring some hope back into the hearts of those who invested in novel blockchain-based decentralized applications with the belief that they would penetrate the mainstream market sometime soon. I will only cover scaling solutions not already mentioned in Fred Ehrsam’s post; therefore, my discussion is not centered around Ethereum. My focus will be on less-popular projects due to the abnormal rate of development in the blockchain space, which forces me to believe there may be something else out there about to take the market by storm.

The blockchain craze swung into full gear this year, with cryptocurrency and token market capitalizations experiencing growth on an exponential scale. Although we all may be fascinated with the implications of decentralized/blockchain based applications and the arrival of the web 3.0, we must not get swept away in ecstatic celebration just yet. While reading over Fred Ehrsam’s “Scaling Ethereum to Billions of Users,” it becomes clear that without proper scaling solutions, we won’t be creating a decentralized version of Facebook, Instagram, or any other platform with millions or billions of users anytime soon.

Recent calculations suggest that dApps on Ethereum can only handle about 7 transactions per second. If we compare these stats to Facebook, where they handle at least 175,000 requests per second, we begin to comprehend the massive gap between what’s theoretically possible and technically realistic, for the majority of recent projects. If ICO’s are raising unprecedented amounts of money for groundbreaking applications, shouldn’t those applications at least serve a few million concurrent users? Where will these apps be built? What ecosystem will they belong to?

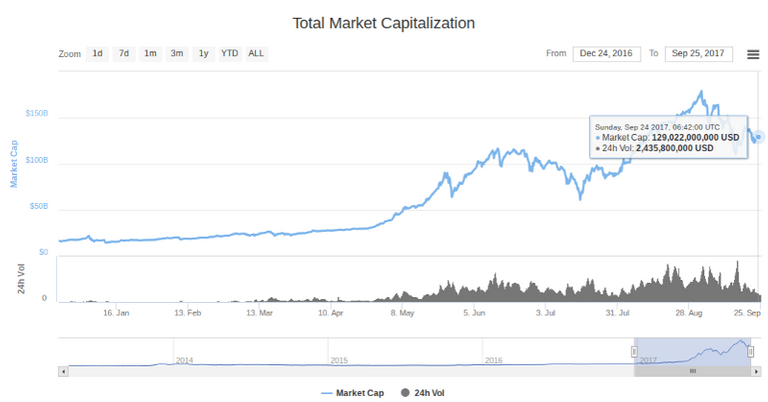

On January 1st, 2017, the total market capitalization of all the cryptocurrencies, including tokens, was just over $18 billion. If we exclude Bitcoin from these calculations, the total was only around $2 billion. At this point, Bitcoin accounted for over 80% of the total value in the market.

Today, the total market cap is hovering around $130 billion, and the market cap without Bitcoin amounts to about $68 billion. Now that Bitcoin makes up only half of all the value in the market, and the combined market cap of other blockchain assets has grown over 20X, I believe there must be some new scalable blockchains quickly developing in the growing plethora of emerging projects.

What follows is a summary of three popular contenders in the field of highly scalable blockchain inspired networks. After the summary, I will offer a quick barrage of criticisms for each project.

IOTA:

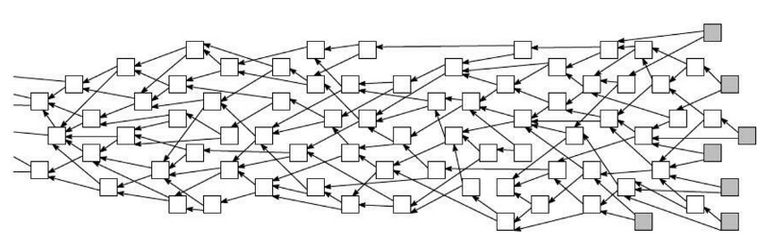

According to its whitepaper, IOTA is a “… cryptocurrency for the Internet-of-Things industry…(and)…works in the following way. Instead of the global blockchain, there is a DAG (= directed acyclic graph) that we call tangle. The transactions issued by nodes constitute the site set of the tangle (i.e., the tangle graph is the ledger for storing transactions)…to issue a transaction users must work to approve other transactions…” Specifically, when issuing a transaction, the node, “chooses two other transactions to approve (in general, these two transactions may coincide), according to some algorithm. It checks if the two transactions are not conflicting and do not approve conflicting transactions. For the transaction to be valid, the node must solve a cryptographic puzzle…similar to those in the Bitcoin mining (e.g., it needs to find a nonce such that the hash of that nonce together with some data from the approved transactions has a particular form, for instance, has at least some fixed number of zeros in front).”

Now this is quite hard to imagine at first, so here is a visualization of a tangle:

The main benefits of IOTA’s system can be condensed into three points. First, there are no fees per transaction, because there are no miners to perform validation. Every user validates past transactions when making a transaction; therefore, the computational power used to solve the cryptographic puzzle during validation can be considered the ‘fee.’ Microtransactions, with values of only fractions of pennies, become entirely feasible within this structure.

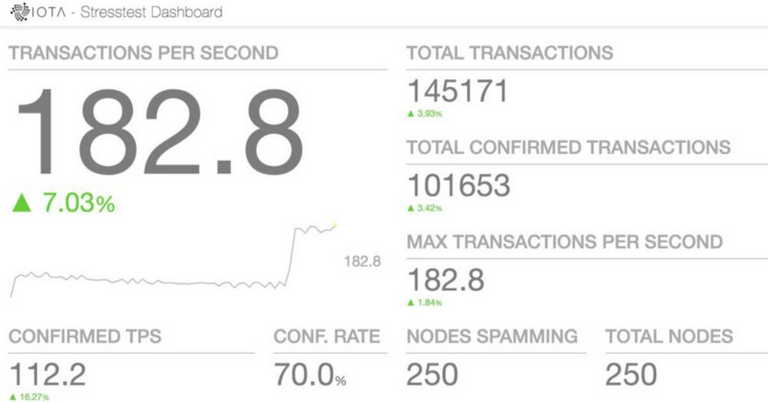

Second, due to the network’s design, it becomes stronger as it grows. The more transactions and nodes, the higher the overall security and possible throughput. The Tangle proved it could handle 182 txs/s during a stress test in April, and cofounder Dominik Schiener indicated a target of 1000 tx/s for the next stress test. Theoretically, IOTA can scale to accommodate unlimited transactions per second.

The third benefit of this system, released on September 24th, are Flash Channels, which allow real time streaming of transactions. Lewis Freiberg explains, “When a channel is created each party deposits an equal amount of IOTA into a multi-signature address controlled by all parties. Once the initial deposits are confirmed the channel does not need to interact with the network until it is closed. Once the parties have finished transacting, the final balances are published to the network.” He ends with the claim, “This approach can reduce thousands of transactions down to just two transactions.”

Flash Channels are currently in beta; however, if successful at scale, they open up a number of exciting possible use-cases. Any on-Demand service that requires instantaneous validation of nano-transactions at high volume, such as Pay-per-Second streaming, could potentially be implemented using IOTA in the near future.

EOS:

The final line of the EOS whitepaper concludes, “The software is part of a holistic blueprint for a globally scalable blockchain society in which decentralised applications can be easily deployed and governed.” Unlike IOTA, which spends most of its whitepaper talking about Machine-to-Machine payments and connecting the Internet of Things, EOS appears at first glance to better address my fundamental concerns of finding a blockchain based environment for the next generation of decentralized applications with millions or billions of users. Let’s delve deeper into the structure of the ecosystem to see how exactly EOS plans on making this possible.

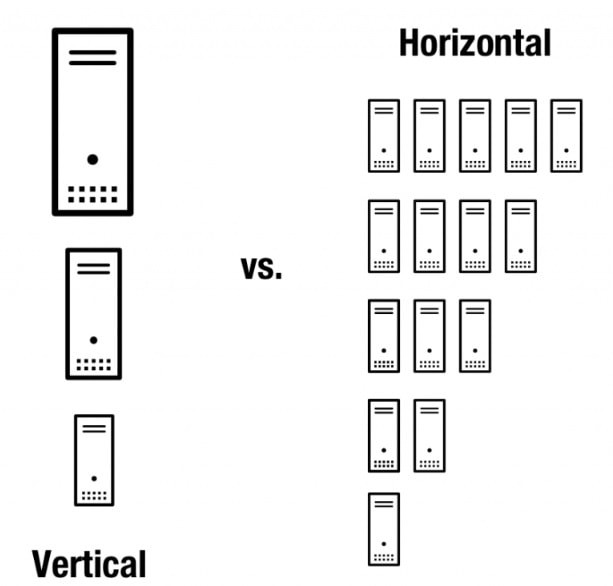

The technical whitepaper was published on June 5, the yearlong ICO was launched on June 26th, and on September 14th, we witnessed the release of the alpha version of EOS.IO software, Dawn v1.0. The abstract of the whitepaper states, “The EOS.IO software introduces a new blockchain architecture designed to enable vertical and horizontal scaling of decentralized applications. This is achieved by creating an operating system-like construct upon which applications can be built….The resulting technology is a blockchain architecture that scales to millions of transactions per second, eliminates user fees, and allows for quick and easy deployment of decentralized applications.”

Here are a couple graphics to better explain horizontal scaling versus vertical scaling, and what a blockchain based operating system entails:

According to an introduction by Ian Grigg, the “EOS.IO developer will be supported by a web-based toolkit that provides a fully-serviced framework on which to build applications as distributed web-based systems coordinating over the blockchain. Accounts, naming, permissioning, recovery, database storage, scheduling, authentication and inter-app asynchronous communication are all built in. A goal of the architecture is to provide a fully-provisioned operating system for the builder of apps, focussed to the web because that’s where the bulk of the users are.” Inter-app asynchronous communication is related to EOS’ definition of smart contracts as messages.

Messages are a critical component of the EOS infrastructure, “Each account can send structured messages to other accounts and may define scripts to handle messages when they are received. The EOS.IO software gives each account its own private database which can only be accessed by its own message handlers. Message handling scripts can also send messages to other accounts. The combination of messages and automated message handlers is how EOS.IO defines smart contracts.” Therefore, what must be proven functional at scale, in order to bring real value to the project, are asynchronous smart contracts (ASCs). ASCs are the backbone of EOS’ plan to actualize horizontal scaling and increase the number of possible transactions per second within dApps to levels needed for mainstream usage. If successfully implemented, according to Jean-Pierre Buntinx, “…this will allow the underlying blockchain to process a high amount of transactions at the same time, while still being able to serve other network commands.” Specifically, the implementation of ASCs, “allows for transaction processing and Dapp execution at the same time without creating a “queue” on the blockchain.” The queue, in any blockchain in use today, is the source of the majority of speed constraints within the network.

Three months ago, EOS developers conducted a test run of a currency smart contract. The developers explained, “We…used EOS transactions to create an account (@simplecoin) and upload the WASM code to the test blockchain…This code was compiled using the WasmFiddle interface to generate the WebAssembly (WASM). It uses several simple API calls such as readMessage, load and store to fetch and store information from the blockchain. This particular contract will create 1 million coins and allocate them to the account @simplecoin, then it will allow @simplecoin to transfer these coins to other accounts which in turn can transfer them to others.” For the actual test, a developer stated, “I created a unit test that would load this contract and then construct individual transactions to transfer funds from @simplecoin to @init1 1000 times…The final result yielded about 50,000 transfers per second average over many different runs.” The results of this experiment, although the developers cautioned that it was too early to draw conclusions, may be a glimpse of the coming capabilities of a fully functional EOS.

In addition, to the asynchronous smart contracts, and the operating system-like construct, it is worth mentioning the feeless structure and Delegated Proof of Stake consensus mechanism. Transactions are feeless because users and dApp developers are required to hold a certain number of tokens in order to interact with the network. The number of tokens in your account impacts what you can do on the network. The Delegated Proof of Stake (DPoS) consensus mechanism works like a republic, where tokenholders elect ‘witnesses’ who are responsible for making administrative high-level decisions.

Together, all of the features of EOS make for an appealing, and exciting, project, whose promises are the stuff of dreams for dApp developers and investors. However, it is important to note that for now, the project is just an early prototype, without a fully released blockchain. Before you get too upset, I will say that I think the odds of this master plan coming into fruition are not that low, given the blockchain celebs working on the project and the backing of Block.one.

RADIX:

This project is actually a new one for me! I have never heard of Radix until I started looking into highly scalable blockchains for this article. I heard about EOS during the summer while trading other cryptocurrencies, and I found IOTA on Bitfinex around the same time. I was surprised to discover that Radix, which was previously called eMunie, has a history dating back years, and is tied directly to the work of one developer, Dan Hughes.

The first couple posts I encountered while scanning the Radix forum for basic information completely caught me off guard. After spending so much time researching ICO’s, and experiencing firsthand, the madness of streams and streams of inappropriately valued startups crowding into the blockchain space, what I found in those posts was pleasantly surprising. On Sept 26th, in response to a question about the status of the anticipated Radix ICO, Dan Hughes replied, “The thing is, we have enough in the pot to get us to the finish line…I’ve invested heavily into the project (liquidated investments, sold assets and even downsized my house) to ensure that we aren’t reliant on external money from unknown parties to get development done.” He continued, “Why have an en-mass ICO, even a capped one, when we don’t need the money to finish development. We might as well wait until we need funds for scaling up and marketing…If people want to invest now, they’ll want to invest in say 6 months, probably more so, as there will be greater progress.” At this point, I started loving the spirit behind the development of this project. Now let’s delve into the whitepaper and see if this enthusiasm lasts.

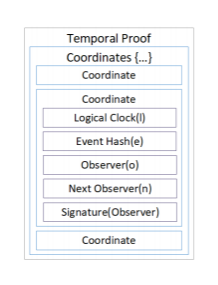

The whitepaper, published on September 25th, states, “In this paper we present a novel method for implementing a Distributed Ledger that preserves total order of events allowing for the trust-less transfer of value, timestamping and other functionality….and requires no special hardware or equipment.” Specifically, “….we propose a solution to the double-spending problem using a peer-to-peer network of nodes with logical clocks to generate a temporal proof of the chronological order of events. The system is secure providing nodes with a historical record of the generated temporal proofs are able to participate. Further, by providing the ability to execute arbitrary scripts, a fully expressive, Turing complete, state change system may be built on the new consensus paradigm.” After reading this, I identified one term that definitely required further explanation, ‘logical clocks.’ A logical clock is, “an ever-increasing integer value representing the number of events witnessed by that node. Nodes increment their local logical clock when witnessing an event which has not been seen previously. Upon storing an event the node also stores its current logical clock value with it.”

As the paper progresses, several terms are introduced to help visualize the network. Tempo is the name of the ledger system used in Radix. An instance of the Tempo is called a universe, and any event occurring within the universe is called an atom. The Tempo Ledger consists of a networked cluster of nodes, a global ledger database distributed across the nodes, and an algorithm for generating a cryptographically secure record of temporally ordered events. The first two components should be relatively familiar for anyone reading this article, and the third component is where we find the ‘secret ingredient’ of this entire project, logical clocks. The paper continues, “A Universe is split into Shards, where nodes are not required to store a complete copy of the global ledger or state. However, without a suitable consensus algorithm that allows nodes to verify state changes across shards “double spending” would be a trivial exercise...Temporal Proofs provide a cheap, tamper resistant, solution to the above problem.” Therefore, “Before an event can be presented to the entire network for global acceptance, an initial validation of the event is performed by a sub-set of nodes which…results in: A Temporal Proof being constructed and associated with the Atom, and a network wide broadcast of the Atom and its Temporal Proof.”

Basically, every transaction or event in the universe, is ‘stamped,’ so to speak, with a temporal proof, consisting of space-time coordinates, and signatures. This information is used to prevent double spending and settle disputes on the ledger. Part of this ‘stamp,’ is an integer value, or ‘timestamp,’ of the logical clock of every new node that encounters the atom, or ‘package,’ on its trip through different nodes and shards in the universe. Clearly, it helps me to visualize this in terms of the postal service.

Here is a more technical visualization of the components of the temporal proof:

Even more specifically, when a user broadcasts an atom to Node(N), the node verifies the transaction, then forwards the information to the next relevant node, Node(P). Node(P) needs the information about this transaction because it contains shards that hold the records of either, or both, of the users involved in the event. The process begins when, Node(N), “…append(s) a space-time coordinate (l, e, o, n) and a signature of Hash(l, e, o, n) to the Temporal Proof (creating a new one if none is yet present). Where l is Node(N)’s logical clock value for the event, o is the ID of the observer Node(N), n is the ID of Node(P), and e is the event Hash(Atom).”

Another visualization to help understand the process, and the progression of the logical clock values:

As you can see, we can clearly tell which event occurred in which order due to the logical clock values attributed to the event along its road to verification. We can also see that by only requiring nodes that possess the shards connected to the specific records of the involved users to validate a transaction, the ledger becomes both highly scalable and extremely efficient.

In terms of rewards for nodes, they will be distributed according to work done. In other words, if your node decided to, “…broadcast that it was processing only partition 123. Any transactions that live in that partition are then received by your node, and that node would earn rewards for the work done upon transactions in partition 123.” Now, I could continue going over the whitepaper for pages and pages, personally I think it one of the most concise, professional, and logical, whitepapers I have read so far. However, I feel as though I might be straining your attention span already, so I will just recommend looking over the rest of it on your own time.

Let’s get into real world testing and the practical viability of the project. On August, 23rd, Radix announced it had privately deployed its network for an insurance company, Surematics. They were keen on emphasizing that this was not a test, a pilot or trial. So far it looks like everything's running smoothly, and if we track Radix to back when it was called eMunie, we find that the technology has been undergoing testing for years. On Sept, 27, Dan Hughes commented that within a few weeks, during public testing, Radix should hit 100k txs/s or higher. On April, 14th, a test was conducted using only two nodes, which resulted in over 14k tx/s. Due to the partitioned state of the full network, tests should have no problem hitting over 100k txs/s if a decent number of nodes are used.

I am excited to follow the project for the next several months, and I believe this might be the ‘sleeping dragon’ I had predicted to exist in this space. Further documentation will be provided in the near future on specifically the process of building applications on the network. For now, I see an organically funded, well-developed, tried and tested, highly scalable, distributed ledger network that is only now moving into the public sphere.

Popular Critiques:

I will keep this section short and only mention critiques raised by informed members of the public and prominent blockchain commentators.

IOTA:

The most popular criticism of IOTA’s technology involves recent vulnerabilities found in their personal hash function, Curl. Neha Narula reported, “We show the details of our proposed attacks, one which destroys user funds and one which steals IOTA from a user, in this repository.” A bunch of angry remarks and back-and-forth accusations have been generated by this report; however, the facts are that the vulnerabilities did exist, and they have now been patched.

The second most popular criticism revolves around the use of the Coordinator Node. Currently, only, “…by relying on at least 67% of users being good actors and selecting only valid transactions to reference, IOTA can eliminate the possibility of double-spends being successful.” Therefore, due to the low number of transactions currently handled on the network, a Coordinator, is used to ensure security. Specifically, “The Coordinator is run by Iota Foundation, its main purpose is to protect the network until it grows strong enough to sustain against a large scale attack from those who own GPUs.” The Coordinator protects the network by setting milestones, which ensure no double spends have occurred. This worries some people, due to the inherent centralization risks of having everything monitored by a node controlled by a foundation. The developers promise this is a temporary measure.

Finally, the last critique of the network is IOTA’s use of ternary instead of binary. Ternary is a numerical system that uses three digits, instead of the two used in binary. Most processors and computers today are built using binary; therefore, for use on existing hardware, “…all of its internal ternary notation has to be encapsulated in binary, resulting in significant storage and computational overhead. Math must either be performed on individual ‘trits’ or first converted from binary-wrapped-ternary encoding into the machine’s native number representation, and back again afterwards — in either case imposing a large computational overhead.”

EOS:

The first concern with EOS centers around its proposed usage of Delegated Proof of Stake and its creator Dan Larimer, who also founded Bitshares and Steem. CoinDesk reported, “According to Tone Vays, a blockchain consultant, in both Larimer’s former projects, the cryptocurrencies at work were controlled predominantly by a small inner circle looking to hype the platform.” Tone Vays continued, “Both Bitshares and Steemit allowed insiders to create lots of tokens for themselves, and after that, the proof-of-stake nature of the project allowed those insiders to print tokens of value for themselves in perpetuity.”

The second concern was raised by Vitalik Buterin, “Dan’s EOS achieves its high scalability by relying on a small number of what are essentially master nodes of a consortium chain, removing Merkle proofs and any other protections that would allow regular users to audit any part of the system’s execution unless they want to personally run a full node themselves.” The ‘master nodes’ mentioned refer to the fact that, “At a million transactions per second each node is required to achieve 100’s of megabytes per second per connection.” Therefore, “At scale, block producers will be operating a new internet backbone powered by EOS.IO software. Block producers will be like Tier-1 Internet providers with dedicated fiber optic connections across continents. These producers will operate data centers that Tier-2 subscribers can connect to. Tier-2 includes anyone looking to run a full or partial node or a large application. For example, services like block explorers, web wallets, and crypto-currency exchanges would be Tier-2 subscribers to the block producers.”

RADIX:

I haven’t been able to find too many criticisms of Radix; however, that itself could be a critique of the project. In other words, a lot of the technology is still under wraps, as multiple patents have been filed, and it seems like the information on the specifics will only be released once the tech has been tested and maybe even patented.

One quote from the Bitcointalk forum sticks out, “Radix was eMunie. If I remember correctly it was only 1 guy called Dan and he was weird and paranoid wouldn’t let anybody else work on development, which is just the antithesis of community and the project pretty much died.”

Concluding Remarks:

On Sept, 27th, Vitalik Buterin, co-founder of Ethereum, stated in an interview relating to a timeline for proposed scaling solutions, “I would say two to five, with early prototypes in one year. The various scaling solutions, including sharding, plasma and various state channel systems such as Raiden and Perun, are already quite well thought out, and development has already started. Raiden is the earliest, and its developer preview release is out already.” This is the timeline for what many consider the top contender in the race for the perfect ecosystem for dApp development. If any of the listed projects have a chance of staking their claim in the space, I think this is the timeline they have to beat. I know I will be watching the next year closely, because 2018 will be the year of the race for the scalable blockchain! One thing we do know, it will not be a boring race. I have my eye on Radix, but that is merely based on a ‘feeling,’ what is your top pick?

Stay tuned for updates from Shokone, we will be watching the developments in this space closely, and soon you will see a blockchain based project revolutionize your conception of social media. Envision a highly scalable, convenient, microtransaction based, platform for creative original content.

If you enjoyed this article, or think someone else might, please share. If you want to talk more, or debate my points, leave a comment below, or contact me on linkedin

Sources:

The original post with full citations can be found on https://medium.com/@zvnowman

Great article, I learnt so much by reading this! Do you think there's a chance that Ethereum can scale up with more users/nodes?

Looking forward to more news on this topic (following you).

I think it will be a close race, they do have the benefit of a $150 million foundation, but also the downside of a crowded blockchain. It will be interesting to see what happens in the near future, we won't have to wait long to find out!

Thanks a bunch! Great to hear the information was helpful for someone!

This was a cogent piece. I felt you freely handed us a juice slab of your brain and feel enriched by your insights into these three projects. Do you have an idea why IOTA chose a ternary number system?

It is the first I heard of Radix aside from being a cutting early 80s postapocalyptic scfi novel. If it is already deployed succefully commercially it could really come out of left field and enter the game with momentum.

I know Dan Larimer has addressed some of Vitaleks criticism you mentioned, on Merkle tree proofs and other points in this article:

https://steemit.com/eos/@dan/response-to-vitalik-buterin-on-eos

He says: EOS does have a merkle tree over all the transactions within a block. This means it is possible to prove you have been paid without having to process all blocks nor trust the full nodes. In fact, these proofs are smaller than ETH because light nodes don’t even require the full history of block headers.

Anyway zvnowman thanks for your clarity and intelligence and i look forward to more. What a fascinating game to watch....

Wow I just saw this comment, thanks so much! To be honest this did take a whole lot of time, way more than I intended on spending. Thanks for the link to Dan's responses. I agree, it is definitely a game to watch, I appreciate your intelligent commentary. I'm going to have to check out that Radix novel!

In reference to the ternary number system, I remember reading an explanation that had to do with its ability to perform complex computations quicker, which theoretically does give it a major advantage, if only the hardware was available for it.