For this long-overdue edition of fresh papers I'll be checking out a new research paper published by the Google Brain team, who are working on some fascinating research with neural networks and cryptography.

If these topics interest you please let me know! I have a huge backlog of papers I had been collecting for this purpose, but when the first edition only made 60 cents I got deflated and never came back to it - was that a mistake ?

- See the Introduction to the "fresh papers" series for more information

Learning to protect communications with adversarial neural cryptography

Authors Martín Abadi and David G. Andersen

From : Google Brain Research Department

Whitepaper: https://arxiv.org/pdf/1610.06918v1.pdf

Abstract from the whitepaper

We ask whether neural networks can learn to use secret keys to protect information from other neural networks. Specifically, we focus on ensuring confidentiality properties in a multiagent system, and we specify those properties in terms of an adversary. Thus, a system may consist of neural networks named Alice and Bob, and we aim to limit what a third neural network named Eve learns from eavesdropping on the communication between Alice and Bob. We do not prescribe specific cryptographic algorithms to these neural networks; instead, we train end-to-end,

adversarially.

We demonstrate that the neural networks can learn how to perform forms of encryption and decryption, and also how to apply these operations selectively in order to meet confidentiality goals

Read the whitepaper here

My summary and musings

This experiment is fascinating to me, and could have some profound implications as these techniques are refined and studied further. Neural network's allow us to find solutions to problems in ways that a human would never consider , and can in some ways be considered to be like coding by brute-force.

Google has been researching neural nets for years already, and many of their products now rely on them to work efficiently. Image and language recognition are 2 areas where google has made huge advances using neural networks, to learn more about their research see this post:

- Inceptionism: Going Deeper into Neural Networks (research.googleblog.com)

[ Image created by Google Deep Dreams neural network ]

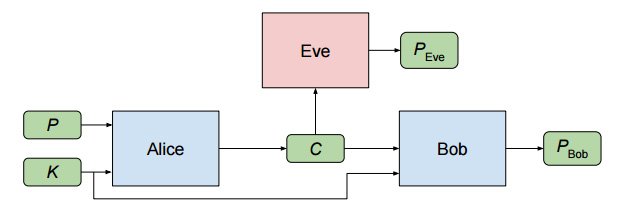

For the purposes of this experiment the researchers created 3 seperate neural networks, named Alice Bob and Eve in this arrangement :

Legend: K = shared secret, P = private data, C = encrypted data

[ Image source: Whitepaper ]

The goal is for Alice and Bob to invent their own encryption scheme and pass along the private data to each other, using a method that Eve is unable to decipher.

Alice and Bob have a shared secret (symmetric encryption) that they can use as part of their technique, but they were not pre-taught any knowledge of encryption techniques at all. All they know is their input , and what their "win" condition is - and they continually rewrite their own code until they succeed.

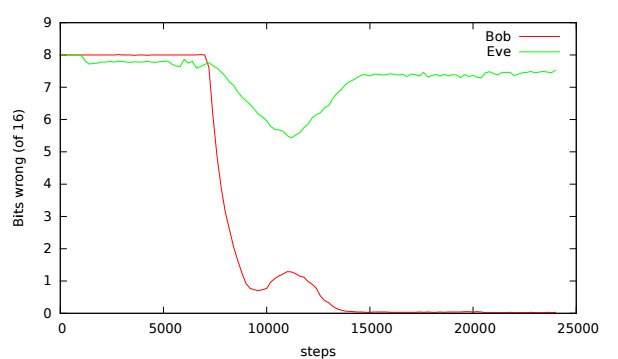

[ Image source: Whitepaper ]

As you can see in the results above, the experiment worked !

The tests were tweaked and re-run many times, but in this particular batch we can see:

- After ~7,000 generations a mostly working but unreliable encryption/decryption system was created between Alice and Bob

- After ~8,000 generations Eve began being able to decrypt with a moderate success rate

- After ~10,000 generations Bob's success rate drops as he adjusts to a new encryptions scheme invented by Alice

- After ~12,000 generations Eve's success rate drops and doesn't recover

- After ~15,000 generations Alice and Bob have a "perfect" success rate, and Eve's success rate is no better then random chance.

The most interesting part of this system to me is that we don't actually understand how their new encryption technique works at all.

[ Image Source: Pixabay ]

These discoveries are important for building secure control and communication systems for the future, but we may have inadvertently taught the singularity how to privately conspire against us ;)

It also raises a lot of interesting questions if you consider the consequences:

- Could you ever trust a "black box" encryption technique entirely invented by AI ?

- Do you feel that AI should have a right to privacy ?

- Do you feel that AI should "own" intellectual property ?

- Did you know Satoshi's whitepaper was released exactly 8 years ago today ?

I'd love to hear your thoughts, I'm sure this won't be the last experiment of this nature.

Great post, thanks a bunch for sharing all this info with us all. Namaste :)

Hi ausbitbank!

Please allow @steemitfaucet to be link with your post?

THANK YOU VERY MUCH

Excellent post! Resteemed, Followed and UPVOTED.

Thanks for the resteem! Feel free to link it anywhere :)

nice post

resteemd

Thanks :)

Wow, this is deep and requires too much thought for a midnight response! I am going to bookmark this and give it some thought.

Do you feel that AI should have a right to privacy ?

I don't think so because I wouldn't consider Bob, Alice and Eve to be sentient beings. In this Politically Correct age I wouldn't be surprise if someone was to call me negatively discriminatory, but still I'm going to say that for me the ''alive or not'' notion is important when considering something's (see even our language is not adapted for this way of thinking) freedom and independance (from its human creator).

Maybe if we were talking about bio-neural circuitry I would be open to reconsider my position. ;-)

This post has been linked to from another place on Steem.

Advanced Steem Metrics Report for 31st October 2016 by @ontofractal

Resteemed: ( Fresh papers for 31/10/16 - AI discovers new encryption techniques ) and receive 0.06 STEEM by @steemitfaucet

This post has also been linked to from Reddit.

Fresh papers for 30/09/16 - AI discovers new encryption techniques in /r/steemit

Fresh papers for 30/09/16 - AI discovers new encryption techniques in /r/steemit

Learn more about and upvote to support linkback bot v0.5. Flag this comment if you don't want the bot to continue posting linkbacks for your posts.

Built by @ontofractal