Yesterday, @cautilus posted a guide for project selection and earnings estimation. The guide is excellent and walks though how to use the host data from the various whitelisted projects to compare the profitability of running different projects. In the comments on reddit it was mentioned that one of the things the Gridcoin project is missing is an automatic way to determine what project is the most profitable to run. Obviously this is a tall order given that users constantly change between projects and the fact that some hardware is better at certain tasks.

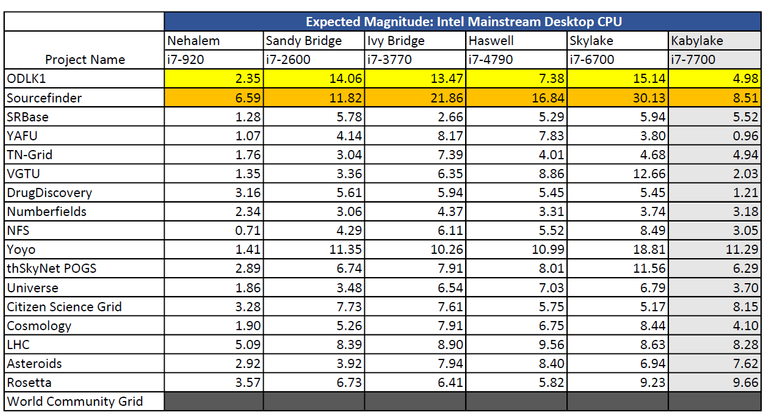

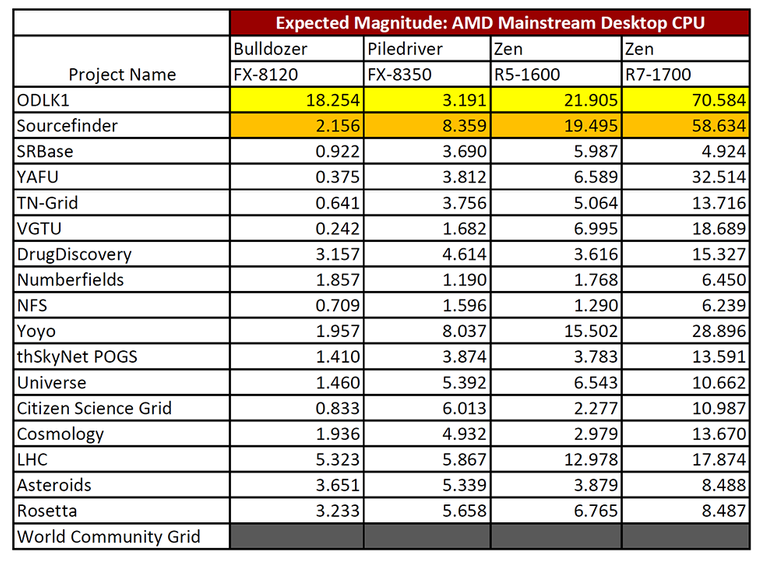

I think that one way we could address this is to automatically generate a table every superblock which estimates the Mag for some common CPU and GPU architectures on the whitelist projects. If we make the table available (maybe on Gridcoinstats) it could solve a lot of the questions on the reddit page.

Methodology

Instead of just making the suggestion, I figured I should get the ball rolling and wrote up a script to search the /stats/host.gz files for the whitelist projects. The script searches each hosts file for the top 5 computers with the given processor type and takes the average of their Rac. This average Rac is then divided by the team Rac and multiplied by an estimate of the total magnitude allocated to a project.

Obviously this method makes some assumptions and can give erroneous results if there are too few consistent participants of a project with the desired processor type. It is also susceptible to people who stockpile tasks and submit them all at once. If anyone has some suggestions for improvements to avoid these issues please let me know. Also does anyone know the true average for the total Rac allocated to a project? I took some averages from Gridcoinstats and got values ranging from 4000-4400.

Results

All of that said, it’s time for some CPU performance data!

ODLK1 is a new project so there is low competition for now.

Sourcefinder is frequently out of work units

World Community Grid does not make its host file available

One thing to note with the above chart is that there are not that many people using i7-7700 processors on most of the smaller projects. If there are less than five users the Rac reported by the script drops off considerably.

Hopefully these tables are interesting and can be useful to some of the new users. I wrote up the script relatively quickly so I’m not going to make it publicly available until I fix some of the more glaring issues with it. I’ll also work one of these up for the GPU projects. If anything looks significantly off based on your own hardware let me know so that I can make improvements.

One source of error might be that some people do not use all threads/cpu-time and therefore reach lower RAC than reachable with their CPU in theory. I also find the discrepancy between Intel and AMD a little suspicious.

But it's great that you started of with this, it is important information for new users and also for everyone who tries to optimise their system. I just started an experiment of running almost all CPU-projects on my Threadripper 1950X (32 threads) to get some estimate of the relative earnings for all the projects. link to the steemit article.

Yeah the thing about only running a few threads is an issue. Additionally the slow decay of Rac means that there is a lot of data that has to be ignored from people who have switched projects.

Which project in particular are you looking at for the Intel vs AMD results?

Well just a 1700 doing 14x more mag than the 7700 seems a bit too far off. Except if there is some insane twist in the ODLK project that prefers AMD by that much.

Oh, thats because ODLK has only been on the whitelist for a couple days. Someone could have switched their machine over early and skewed the results. That why it highlighted in yellow. The sourcefinder results are also suspect because those results can also be manipulated since the work units are limited.

Additionally there is limited data for the 7700 which is hurting its statistics. They should perform at least as well as the 6700 in all projects.

okay, now it makes more sense the longer I look at it. The difference can also be due to the higher number of threads of the AMD CPUs.

For my project, I would like to read out the date and the cpu-time I used for a task from each project. Do you have an idea on how I could extract that data from the project homepages?

Assuming you are solo mining:

go to your account page, then to hosts, then find the computer you are looking for based on its configuration and click on tasks. This will bring up a table of all tasks you have been sent as well as the status and how long they took to complete.

If you are in the pool you need to look up the pools stats and find your computer in the list and there will be the option to view its tasks.

you can also read the average time to complete a task from the /stats/host.gz file

yeah, I know that I could do it manually. But my CPU does 32 Tasks at once, so something between 200-1000 Tasks a day. Typing all of that into a txt file would pretty much be impossible.

A really great idea! Would be very very cool if you'd set up a homepage for this with some data to explore :)

I don't think we can get much out of these statistics, too many variables.

As already mentioned, you don't know how many threads are used or how high the allowed cpu time is nor you know if there's overclocking in use and how high this overclocking is or even underclocking for better performance/power.

As an example I've overclocked some time ago an Intel Core2Quad from 2,4 to 3,6 GHz (=+50%!) on air without any issues (sure, power went way more up than performance in comparison).

Intel's Core-Series had in it's entire life-time not an increase in performance as shown - per core per thread per clock it was not even 2x.

Sure, there are some new instructions (newer SSE, AVX, ...) but I don't think BOINC-Projects use these instructions heavily...

I'm still looking through how to handle outliers in the statistics. The point about overclocking is one of the reasons I chose non-K processor types for intel. On further thought I'm reconsidering whether I should have used the X parts from AMD since they have less overclocking headroom.

The real point of this is to tell you which projects you should look at running beyond the normal advice of picking a project with low team Rac and low total users.

For example, Yoyo has a fairly high Team Rac for a CPU project and a decent number of users. However if you are running a CPU that is from ivy bridge or later (or Zen) it is a good performer across the board. This is probably due to the project not actually having much dependence on the processor frequency.

The tables also show that if you have a high core count CPU you should switch to sourcefinder (can claim more work proportional to # of threads), YAFU, or Yoyo. Which is not something that a new user is going to know.

It would be very interesting if you could do something similar with ARM based SBCs like Raspberry Pi or Odroid. Because these are limited to a few projects supporting them.