After 6 days of cleaning the server, moving files and replaying, techcoderx.com is now running on HAF enabled hived v1.26 along with HAfAH for account history APIs. Both are used to sync Hivemind on the latest stable release which are currently not HAF-based.

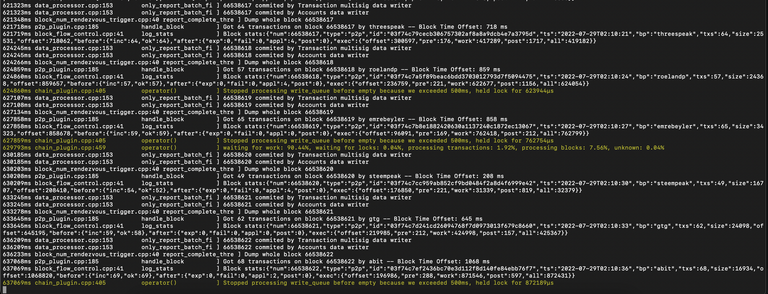

sql_serializer plugin in actionMany have probably asked about how to run a node like this. As I'm writing this, the docs for them are all over the place and that makes it hard to follow along. So I will be documenting the whole process I went through here for anyone to follow.

Certain parts of the guide may change over time as it isn't a final release yet, but most of this should be valid for the next 7 days from the publication of this post (most Hive posts are rarely viewed past the post payout anyways).

2024 update

Most of this guide is out-of-date except the hardware requirements and conclusion as it remains largely the same. Most HAF deployments today should use the Docker Compose that runs all the required components for a fully featured HAF node.

The guide below resembles the manual approach mostly for references.

Hardware requirements

- At least 6 core CPU, preferably scores >1200 single-core and >6000 multi-core on Geekbench 5

32GB64GB RAM (128GB or more works better due to ZFS caching)- 2x 2TB NVMe SSD in RAID 0 for Postgres DB. I will explain why later.

- 1TB SATA/NVMe SSD as boot drive and to store block_log file

- No GPU is required

- 20Mbps bandwidth. More is required for high traffic node.

If running on residential internet connection behind CGNAT, a nearby VPS is required for a public node. Anything $5/month or less should work as long as it has sufficient bandwidth. Alternatively, glorified reverse proxies (i.e. Cloudflare Tunnel) could also work if you accept the risk.

Dependency setup

For this guide, we will be using Ubuntu Server 22.04 LTS which is what I used on techcoderx.com node.

Essentials

First of all, install some essential packages (including ZFS):

sudo apt-get update && sudo apt-get upgrade

sudo apt-get install wget curl iotop iftop htop jq zfsutils-linux

hived and Postgresql

sudo apt-get install -y \

autoconf \

automake \

cmake \

g++ \

git \

zlib1g-dev \

libbz2-dev \

libsnappy-dev \

libssl-dev \

libtool \

ninja-build \

pkg-config \

doxygen \

libncurses5-dev \

libreadline-dev \

perl \

python3 \

python3-jinja2 \

libboost-all-dev \

libpqxx-dev \

postgresql \

postgresql-server-dev-14

ZFS pool setup

Running the node without compression will fill up the drives very quickly and you will need a lot more NVMe storage, so it is much more practical to place the postgresql database in a ZFS pool with lz4 compression enabled.

Ideally ZFS pools would consist of at least 2 drives, hence the dual NVMe requirement listed above. ZFS will also handle striping the drives to mimic a RAID 0 setup.

A single-drive ZFS pool is totally possible and it is currently what I'm using, but the performance of a single PCIe 3.0 NVMe SSD isn't enough to sync both hived and Hivemind at reasonable speeds (but can still catch up with the network). PCIe Gen 4 might work better, but I would say it is more cost effective over time to go for a RAID 0 setup.

If you only have one 2TB NVMe SSD like me, you can probably get away by using a very recent Hivemind dump (no more than 1 month old) for the next 6-12 months.

I have used this tutorial to set this up, but to summarize in a few commands:

List all disk identifiers

To create any ZFS pools, you need to know the identifiers for the drives to be used first.

$ lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS

loop0 7:0 0 62M 1 loop /snap/core20/1581

loop1 7:1 0 62M 1 loop /snap/core20/1587

loop2 7:2 0 79.9M 1 loop /snap/lxd/22923

loop3 7:3 0 44.7M 1 loop /snap/snapd/15534

loop4 7:4 0 47M 1 loop /snap/snapd/16292

nvme0n1 259:0 0 465.8G 0 disk

├─nvme0n1p1 259:1 0 1G 0 part /boot/efi

└─nvme0n1p2 259:2 0 464.7G 0 part /

nvme1n1 259:3 0 1.9T 0 disk

├─nvme1n1p1 259:4 0 1.9T 0 part

└─nvme1n1p9 259:5 0 8M 0 part

In my example above, I'm using /dev/nvme1n1 for my single drive ZFS pool. Yours may vary, take note of the identifiers of each drive to be used in the pool.

Create pool

Command:

sudo zpool create -f <pool_name> <disk_id1> <disk_id2> ...

Example for single-drive pool:

sudo zpool create -f haf /dev/nvme1n1

Example for two-drive pool:

sudo zpool create -f haf /dev/nvme1n1 /dev/nvme2n1

Get pool status

sudo zpool status

Enable compression

sudo zfs set compression=on <pool_name>

Set ARC RAM usage limit

Only needed for 32GB RAM systems. In this command, we are setting it to 8GB.

echo "options zfs zfs_arc_max=8589934592" | sudo tee -a /etc/modprobe.d/zfs.conf

You should now have the pool mounted at /<pool_name>. For more useful commands, refer to my hiverpc-resources notes on ZFS.

Postgresql setup

By default, the postgresql database is stored in the boot drive. We want it to be in the ZFS pool created previously.

Create the required directory with appropriate permissions (assuming haf is the pool name):

cd /haf

mkdir -p db/14/main

sudo chown -R postgres:postgres db

sudo chmod -R 700 db

Set the data directory in /etc/postgresql/14/main/postgresql.conf:

data_directory = '/haf/db/14/main'

And tune the database as such in the same config file (below values are for 64GB RAM, scale according to what you have):

effective_cache_size = 48GB

shared_buffers = 16GB

work_mem = 512MB

synchronous_commit = off

checkpoint_timeout = 20min

max_wal_size = 4GB

Restart postgresql to reflect the changes.

sudo service postgresql restart

Compile hived

git clone https://gitlab.syncad.com/hive/haf

cd haf

git submodule update --init --recursive

cd hive

git checkout <latest_develop_commit_hash>

cd ..

mkdir build

cd build

cmake -DCMAKE_BUILD_TYPE=Release -GNinja ..

ninja

sudo ninja install

sudo make install installs the required extensions for sql_serializer plugin to work with postgresql. To install hived and cli_wallet, move the executables found in hive/programs/hived/hived and hive/programs/cli_wallet/cli_wallet respectively to /usr/bin.

Setup HAF db

Enter the psql shell as postgres user:

sudo -i -u postgres psql

Create the db with permissions and extensions:

# Roles

CREATE ROLE hived_group WITH NOLOGIN;

CREATE ROLE hive_applications_group WITH NOLOGIN;

CREATE ROLE hived LOGIN PASSWORD 'hivedpass' INHERIT IN ROLE hived_group;

CREATE ROLE application LOGIN PASSWORD 'applicationpass' INHERIT IN ROLE hive_applications_group;

CREATE ROLE haf_app_admin WITH LOGIN PASSWORD 'hafapppass' CREATEROLE INHERIT IN ROLE hive_applications_group;

# Set password if needed

ALTER ROLE role_name WITH PASSWORD 'rolepass';

# Database used by hived

CREATE DATABASE block_log;

# Use database

\c block_log

# sql_serializer plugin on db

CREATE EXTENSION hive_fork_manager CASCADE;

Authentication

By default, Postgresql allows only for peer authentication. You may enable password authentication by appending the following line in /etc/postgresql/14/main/pg_hba.conf:

local all all peer # after this line

local all all md5 # add this

For more details, refer to the documentation here.

Block Log

As of writing this, there isn't a compressed block_log file to download, so if not already you can download an up-to-date uncompressed block_log:

Update (17 Oct 2022): The compressed block_log is now available for download.

wget -c https://gtg.openhive.network/get/blockchain/compressed/block_log

Compress block_log

To generate a compressed block_log from an uncompressed version, use the compress_block_log tool found in hive/programs/util/compress_block_log in the build directory.

./compress_block_log -j $(nproc) -i /src/uncompressed/blocklog/folder -o /dest/blocklog/folder

Create ramdisk

98% of the time the replay is bottlenecked by disk I/O if the shared_memory.bin file is located on disk. Move it to ramdisk to reduce SSD wear and significantly better performance, hence the memory requirements above. Replace 25G with the size of the ramdisk desired.

cd ~

mkdir ramdisk

sudo mount -t tmpfs -o rw,size=25G tmpfs ~/ramdisk

Configure hived

Generate a hived config file if not already:

hived --dump-config

By default, the data directory is ~/.hived but you may also store it in another directory if you wish.

mkdir -p ~/.hived/blockchain

Move the resulting compressed block_log and block_log.artifacts files to the blockchain folder of the data directory.

Then edit the config.ini file in the data directory as follows (find the key and modify its value, add them if it is not there):

Plugins

# Basic

plugin = witness webserver p2p json_rpc database_api network_broadcast_api condenser_api block_api rc_api

# Reputation

plugin = reputation reputation_api

# Get accounts/witness

plugin = account_by_key account_by_key_api

# Internal market

plugin = market_history market_history_api

# Transaction status

plugin = transaction_status transaction_status_api

# State snapshot

plugin = state_snapshot

# SQL serializer

plugin = sql_serializer

# Wallet bridge API

plugin = wallet_bridge_api

SQL serializer

psql-url = dbname=block_log user=postgres password=pass hostaddr=127.0.0.1 port=5432

psql-index-threshold = 1000000

psql-operations-threads-number = 5

psql-transactions-threads-number = 2

psql-account-operations-threads-number = 2

psql-enable-account-operations-dump = true

psql-force-open-inconsistent = false

psql-livesync-threshold = 10000

See sql_serializer plugin documentation on details of each parameter and configuring relevant filters if desired.

Shared file directory

Specify path to folder containing shared_memory.bin:

shared-file-dir = "/home/<username>/ramdisk"

Shared file size

At least 24GB is needed (currently 22GB), this value will increase over time.

shared-file-size = 24G

Endpoints

Configure the IP and port of the endpoints to be made available for seed and API calls.

p2p-endpoint = 0.0.0.0:2001

webserver-http-endpoint = 0.0.0.0:8091

Replay!

Finally we are ready to replay the blockchain. Start a screen or tmux session and run the following:

hived --replay-blockchain

If your hived data directory is not the default ~/.hived, specify it in the above command with -d /path/to/data/dir.

For HAF nodes, this process is split into 5 stages:

- Normal replay.

hivedreads the blockchain and rebuilds chain state. - Initial network sync, which fetches the latest blocks so far from the network.

- Indexes and foreign keys creation.

- Normal network sync to catch up after stage 3 above.

- Live sync, where your node is fully synced. You should see something like the first screenshot of this post above in your logs at this point.

This entire process took under 48 hours for me to replay 66.4M Hive blocks, ~5-10% faster than the previous version.

HAfAH

Setup required permissions

Run in psql console in block_log database for the setup scripts to run successfully:

GRANT CREATE ON DATABASE block_log TO haf_app_admin;

Installation

git clone https://gitlab.syncad.com/hive/HAfAH hafah

cd hafah

git submodule update --init

./run.sh setup-postgrest

./run.sh setup <server_port> postgresql://haf_app_admin:<password>@127.0.0.1:5432/block_log

Notice we are not cloning the submodules recursively as the haf repo without its submodules is the only dependency for the setup scripts.

Start HAfAH server

Replace <server_port> with the port you wish to use. I prefer to use the next one available, that is 8093.

./run.sh start <server_port> postgresql://haf_app_admin:<password>@127.0.0.1:5432/block_log

Test API call

To check that it works, try out an API call to the HAfAH server.

curl -s -H "Content-Type: application/json" --data '{"jsonrpc":"2.0", "method":"condenser_api.get_ops_in_block", "params":[60000000,false], "id":1}' http://localhost:<server_port> | jq

Hivemind

Valid as of v1.27.3 HAF-based Hivemind release.

Setup hivemind db

Run in psql shell in block_log database:

\c block_log

CREATE EXTENSION IF NOT EXISTS intarray;

CREATE ROLE hivemind_app WITH LOGIN PASSWORD 'hivemindpass' CREATEROLE INHERIT IN ROLE hive_applications_group;

GRANT CREATE ON DATABASE block_log TO hivemind_app;

Installation

python3 -m pip install --upgrade pip setuptools wheel

git clone --recurse-submodules https://gitlab.syncad.com/hive/hivemind.git

cd hivemind

python3 -m pip install --no-cache-dir --verbose --user . 2>&1 | tee pip_install.log

Configure

At the end of the ~/.bashrc file, define the env vars:

export DATABASE_URL=postgresql://hivemind_app:hivemindpass@localhost:5432/block_log

export HTTP_SERVER_PORT=8092

Where hivemind_app and hivemindpass are username and passwords set during role creation above.

Restore dump

Update (4 Jan 2023): As of now there is no HAF Hivemind dump available, so this might not relevant as you will have to sync it from scratch.

Download a copy of the dump here, and restore it:

pg_restore -j $(nproc) -d hive hivemind.dump

Run the upgrade script after restoring:

cd hive/db/sql_scripts

./db_upgrade.sh <username> hive

Sync the db

In screen or tmux session:

hive sync

In my single drive ZFS pool, the disk I/O is the bottleneck for 90% of the time and it took me over 2 hours to sync 6 days of blocks and left it running overnight to build the indexes. hived struggled a bit but still managed to not go out of sync.

Start server

In screen or tmux session:

hive server

Reverse proxy

At this point, you should have the required pieces to serve a public Hive node. To put them together into a single endpoint, we need a reverse proxy such as jussi, rpc-proxy or drone.

If your node is on residential internet behind CGNAT, setup the VPN and forward the required ports (hived and HAF app servers if running reverse proxy on VPN server, reverse proxy port otherwise) using iptables rules, as such:

iptables -t nat -A PREROUTING -p tcp --dport <port> -j DNAT --to-destination 10.8.0.2:<port>

Replace <port> with the port to be forwarded, and 10.8.0.2 with the actual Wireguard peer to forward the port from. Repeat this command for every port to be forwarded.

You may also have to setup the ufw rules to allow traffic to/from the Wireguard network interface:

sudo ufw route allow in on wg0 out on eth0

sudo ufw route allow in on eth0 out on wg0

Replace eth0 with the host network interface and wg0 with the Wireguard network interface (of the peer on the proxy server).

For those who need a jussi config, you can find them here. Replace 10.8.0.2 with the actual URL to the respective services.

The easiest way to run jussi is through docker:

git clone https://gitlab.syncad.com/hive/jussi

cd jussi

docker build -t="$USER/jussi:$(git rev-parse --abbrev-ref HEAD)" .

docker run -itp 8094:8080 -d --name jussi --env JUSSI_UPSTREAM_CONFIG_FILE=/app/config.json -v /home/username/jussi/jussi_config.json:/app/config.json "username/jussi:master"

Replace /home/username/jussi/jussi_config.json with path to jussi config file.

At this point, you should now have a Hive API node accessible at port 8094 which can be put behind nginx and be accessible by the public.

Conclusion

As of now, it is good to see that running such Hive node is totally possible with mainstream hardware (e.g. my barebones B350 motherboard) today. The disk requirements may look very demanding on its own, but it is nothing compared to the node requirements of popular blockchains such as BSC or SQLana.

Even though we now store the data as compressed format, the actual disk usage after compression remains the same at 1.9TB total compared to the previous node setup. Details below.

As for the RAM usage, hived have consumed less memory than previously as seen in the smaller size of shared_memory.bin file that is 2GB less.

Server resource statistics

These stats are as of 29 July 2022. For the latest stats, please refer to the latest witness update logs.

hived (v1.25, all plugins, as of block 66365717)

block_log file size: 620 GB

shared_memory.bin file size: 22 GB

account-history-rocksdb-storage folder size: 755 GB

blockchain folder size: 1,398 GB

hived (v1.26, d821f1aa, all plugins)

block_log file size (compressed): 317 GB

block_log.artifacts file size: 1.5 GB

shared_memory.bin file size: 20 GB

blockchain folder size: 338 GB

HAF db

Output of SELECT pg_size_pretty( pg_database_size('block_log') );

Database size: 2.9 TB

Compressed: 1.2 TB

hivemind (v1.25.3, a68d8dd4)

Output of SELECT pg_size_pretty( pg_database_size('hive') );

Database size: 528 GB

Compressed: 204 GB

RAM usage: 4.2 GB

Changelog

10 Oct 2022: Added sample Postgresql config

17 Oct 2022: Added download link to compressed block_log, update RAM requirements, update Hivemind dump restore instructions

21 Oct 2022: Added Postgresql authentication configuration

24 Oct 2022: Remove redundant permissions as per here.

26 Oct 2022: Update hive.db_head_state troubleshooting for Hivemind v1.26.1

3 Nov 2022: Remove references of moving shared_memory.bin back into disk.

2 Jan 2023: Update hived compile to use ninja instead of make.

4 Jan 2023: Update Hivemind setup to be HAF-based.

5 Jan 2023: Include instructions for running Python HAfAH in virtual environment.

7 Jan 2023: Added PostgREST HAfAH setup instructions.

25 Jan 2023: Reorder HAfAH setup instructions such that PostgREST is the first.

24 Dec 2023: Added note on the new Docker Compose deployment, removed Python HAfAH setup instructions and replaced OpenVPN guide link with Wireguard install script link.

I have an easier time learning Greek but I know I need to set something like this up eventually. Great detailed guide.

The big whales should have upvoted this excellent guide.

I guess I didn't have the popularity for them to notice, but this is still my highest paid out post since HF23.

Make them notice this quality, meanwhile there's a ton of shit posts worth hundreds of dollars.

Thank you for your witness vote!

Have a !BEER on me!

To Opt-Out of my witness beer program just comment STOP below

View or trade

BEER.Hey @techcoderx, here is a little bit of

BEERfrom @isnochys for you. Enjoy it!Do you want to win SOME BEER together with your friends and draw the

BEERKING.Thank you for your witness vote!

Have a !BEER on me!

To Opt-Out of my witness beer program just comment STOP below

View or trade

BEER.Hey @techcoderx, here is a little bit of

BEERfrom @isnochys for you. Enjoy it!Learn how to earn FREE BEER each day by staking your

BEER.The rewards earned on this comment will go directly to the people sharing the post on Twitter as long as they are registered with @poshtoken. Sign up at https://hiveposh.com.