I Asked ChatGPT: Is it beneficial to train a LLM on other LLMs prompted responses?

Answer:

Training an LLM on responses from other LLMs can increase data volume and speed up training but risks inheriting errors, biases, and reducing originality. Human-generated data is typically more reliable for building robust models.

Expanded answer below in comments:

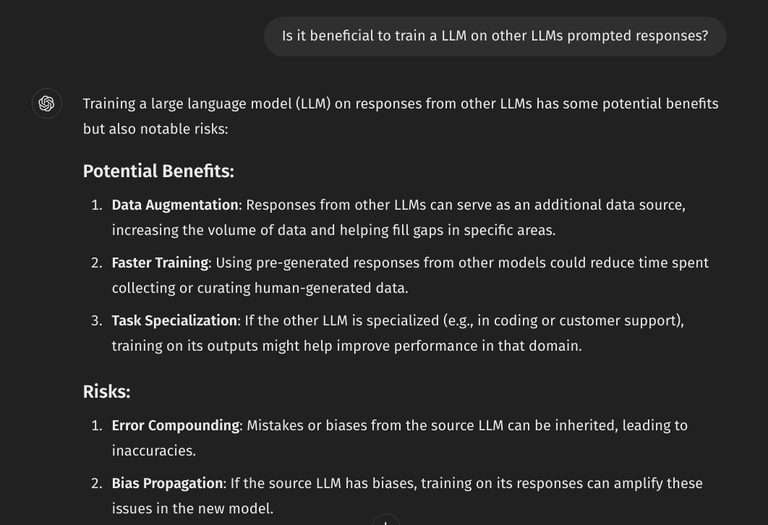

Training a large language model (LLM) on responses from other LLMs has some potential benefits but also notable risks:

Potential Benefits:

Risks:

Using diverse, high-quality human data is often more effective for producing robust LLMs.