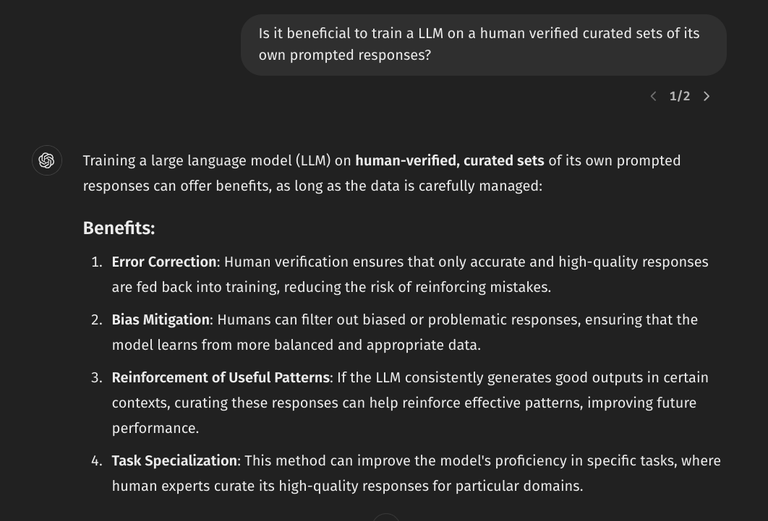

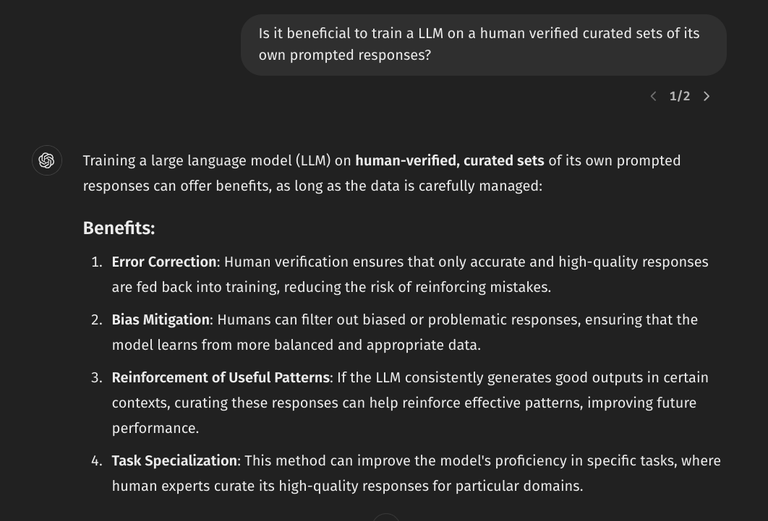

I Asked ChatGPT: Is it beneficial to train a LLM on a human verified curated sets of its own prompted responses?

Answer in the comments!

I Asked ChatGPT: Is it beneficial to train a LLM on a human verified curated sets of its own prompted responses?

Answer in the comments!

Training a large language model (LLM) on human-verified, curated sets of its own prompted responses can offer benefits, as long as the data is carefully managed:

Benefits:

Risks:

When done well, it can enhance the model, but human involvement is key to maintaining quality.