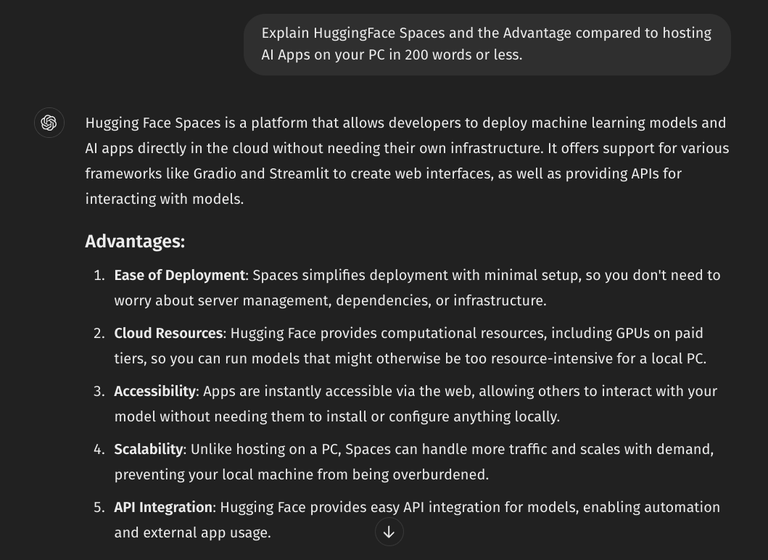

I Asked ChatGPT: Explain HuggingFace Spaces and the Advantage compared to hosting AI Apps on your PC in 200 words or less.

Answer:

Hugging Face Spaces is a platform that allows developers to deploy machine learning models and AI apps directly in the cloud without needing their own infrastructure. It offers support for various frameworks like Gradio and Streamlit to create web interfaces, as well as providing APIs for interacting with models.

Advantages:

Ease of Deployment: Spaces simplifies deployment with minimal setup, so you don't need to worry about server management, dependencies, or infrastructure.

Cloud Resources: Hugging Face provides computational resources, including GPUs on paid tiers, so you can run models that might otherwise be too resource-intensive for a local PC.

Accessibility: Apps are instantly accessible via the web, allowing others to interact with your model without needing them to install or configure anything locally.

Scalability: Unlike hosting on a PC, Spaces can handle more traffic and scales with demand, preventing your local machine from being overburdened.

API Integration: Hugging Face provides easy API integration for models, enabling automation and external app usage.

In contrast, hosting on your PC requires handling resource management, maintenance, and uptime, making cloud solutions like Hugging Face Spaces much more user-friendly.

Is there a charge for this or does Hugging Face do it for free?

What do they get out of it do you think?

HuggingFace is like a Github for Machine Learning... You can fork many LLMs and Image/Voice generating AIs over there. (Some AI tools come with Licenses, though. You have to provide your contact data to Meta if you want to download Llama.)

It comes with different tiers of compute power. The Free tier has 2 Cours and 2GB RAM which might be enough to run small LLMs... I'm sure they get a LOT of paying costumers who want more compute power and RAM, don't you think?

I would imagine. There is a business model that I am sure they are profiting from.

But it is good that those who know how to set up small models can run them.