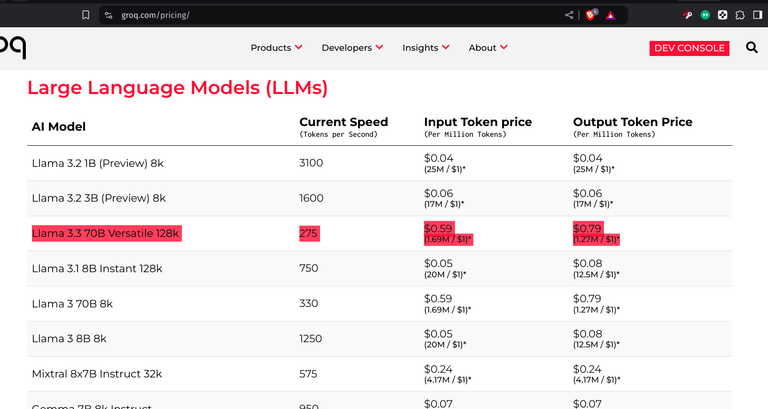

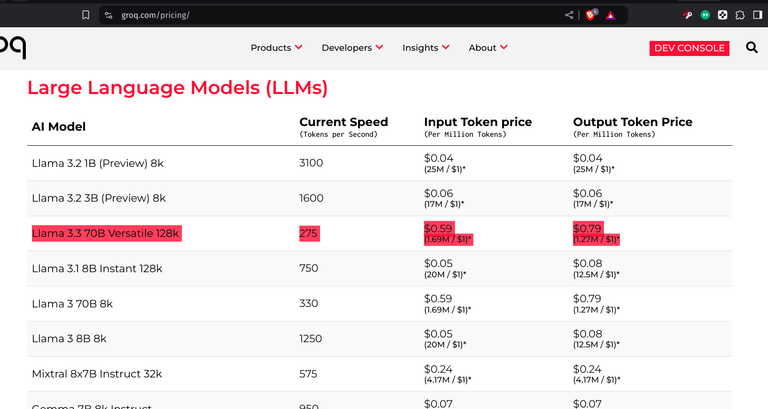

Llama 3.3 Inference Costs

Apparently, Llama 3.3 already released and it's really good! Though my local PC can't run it, so I looked up an inference for it. Looking at Groq's API prices, it's cheaper than I thought they'll be.

Apparently, Llama 3.3 already released and it's really good! Though my local PC can't run it, so I looked up an inference for it. Looking at Groq's API prices, it's cheaper than I thought they'll be.