The process of "computers on wheels" is already starting.

Tesla is going to have a very powerful platform in the next few years. There are already 7 million vehicles on the road with another 2 million expected in the next 12 months. This is a number that is likely to increase as their factories scale up.

One of the keys to this is the ability to offer a user experience. This is something that automotive companies really did not focus upon a great deal in the past. Sure, there were the addition of screens to some higher end models, along with Internet connection. However, this is nothing like having a full blown system complete with an app store.

It is something that Google and Apple aced. These companies leveraged their platforms in a huge way.

Now, Tesla is taking some of the first steps of moving in that direction.

Tesla: Computer On Wheels

Teslas' Fleet APII is now available in all regions. This means that third party developers, those who gain approval, can build their applications and provide it to Tesla vehicles.

What we are seeing is platform 101. Basically, more value can be added to the userbase through 3rd party applications. For the moment, the API is open but there is no "store" where apps can be uploaded.

That is something that will likely come in the future.

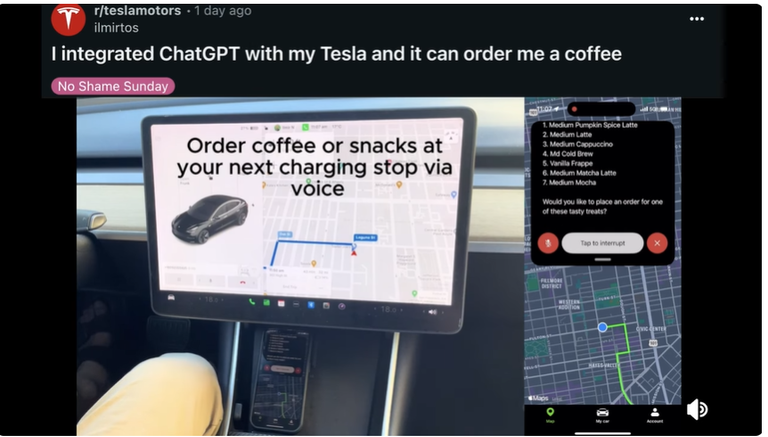

Here is a screenshot of a video of someone who used ChatGPT, tied into the Tesla, to order coffee for him.

The Apple And Google Model

We know how valuable the Apple and Google stores are to those companies. Apple, in their most recent earnings, was near $25 billion in service revenue. This is an annualized rate of $100 billion for the year.

The value of a digital platform increases the more that is available. Having developers all over the world creating applications that are loaded onto the platform is huge.

Tesla will certainly leverage this to a large degree. Whether this is a financial center like Apple remains to be see. However, if nothing else, 3rd party developers can create applications that enhance the user experience.

As the network grows, it only becomes more appealing to developers.

Tesla Inference

During the last earnings call, Elon Musk, once again, mentioned the concept of inference. On this one, he was ahead of the curve.

Tesla is over computing their vehicles. The same is true for the robotaxi and Cybertruck. This means there is extra compute available for inference.

Obviously, this is not something that is going to be used while autonomous driving is engaged. That said, when idea, these computers are going to be available for inference.

For those who follow the world of AI, this is an important area. OpenAi is already admitting they are compute constrained. Sam Altman didn't dive into whether it was on training on inference but, he pointed to that as the reason why the company is slow to roll out applications.

Either way, at this point, OpenAI, like most other companies, is responsible for all the inference compute people use. Tesla is looking to provide an epicenter of compute that will be available in the future.

Posted Using InLeo Alpha

I agree that Elon is building a AI LLM model for driving and it's other use cases will be vast. Technology is like an onion, you don;t know the next use case until you peel the layer.

Congratulations @taskmaster4450le! You have completed the following achievement on the Hive blockchain And have been rewarded with New badge(s)

Your next target is to reach 195000 comments.

You can view your badges on your board and compare yourself to others in the Ranking

If you no longer want to receive notifications, reply to this comment with the word

STOPCheck out our last posts: