First, a little bit of history

The internet communication technology has been evolved to the

work of many computers at the same time. In nowadays, with the massive

network acces, and the mount of data transferred as a result, the maintainance of the global conection is impossible whithout thinking in computer clusters working together. Facebook does not have a super computer (under San Francisco ground and protected by the U.S army)

that is responsible for handling all requests from its users. In facts, they have their servers distributed in many parts of the world, and these, through concensus, achieve to look like that super computer

Font

|For the final user, the datacenters make millions of operations in matter of seconds, wihtout its noticing. |

These serves needs to comunicate in a scalable and fault tolerance way.

A funny definition of a distributed system is that one says: You know that you have a distributed system, when you have components that could fail. Fualt tolerance is an important topic in these case. Constantly, the phisical components of many networks (the nodes) changes, becuase of pitfalls or actualizations. In this case, the most secure solution is copy all the information of the network in each computer. But this is really bad. And we have many reasons to argue.

Comes the engineering

In here is where we need to decide wisely how our nodes will have the communication. We define an architecture of a distributed system, as the set of statements or decisions that is going to take each system component that will make to function as a whole. In this post, we are going to talk about the Chord Ring, an arquitecture that allows the maintainance of a distributed hash table (DHT). I'm touch the application topic. This is for other post.

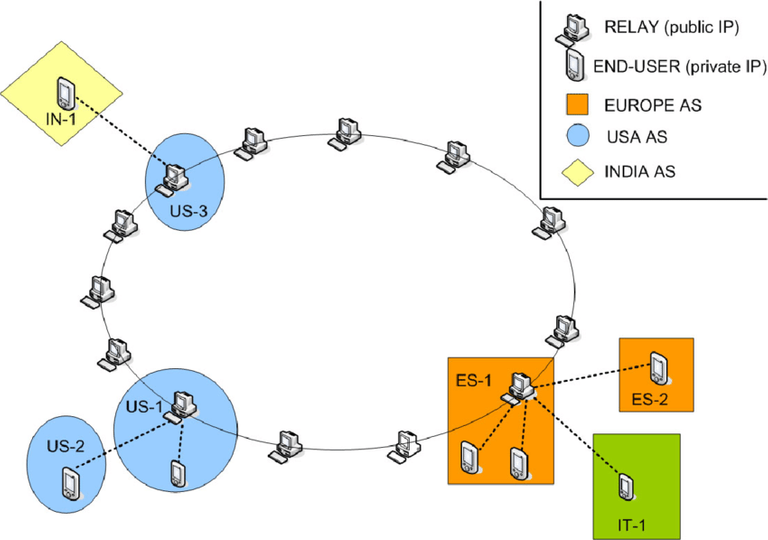

Fuente

Fuente A graphic representation of the Chord ring

A little note. From now and the rest of the post, its necessary a some technical knowledge, like a basic programming course, network knowledge and concurrent programming, also know something about operative systems, about code network interfaces (

sockets,binding,connect), and of course, math. If you want to learn, but you feel that you can't understand, keep going with your reading. Don't be sad if you are new. This is not an easy topic. As STEEM lives, this post will be waiting for you. So you can read it again, or ask me something in the comments.

A hash table is a mathematical function, like these one that teach us in the school. You define a set of values, or keys, and to each key, you asign an especific value, or y, in such a way that we can ask every time to a y for is value, and it allways return the same y after the last assignment that we did. Chord maintains a hash table partitioned between all nodes of the network.

So, we say that the components arquitecture are nodes, labeled with an id, that belongs to the domain of the hash table. Yes, we can ask to id, and id will answer with a y. Aditionally, in my own implementation, all nodes must support the standard network interface (with IP's and all of that).

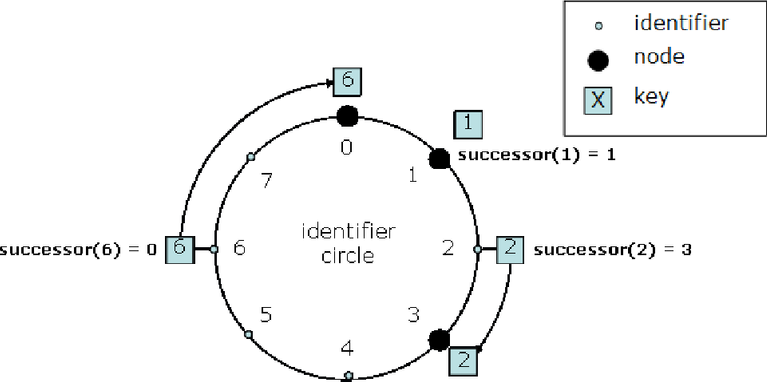

The Chord protocol mantain, in all possible state of the ring, one invariant: for each key, we know succ(key) = x, as the minor id greather than key, that is the id of an active node. In this point, the must suitable decision is that the node with id = x assume the responsability for the value of key in the hash table.

Font

We can see that

succ(6) = 0 Let's work

The goal of the implementation is make a balance between scalability and fault tolerance. You can see all the source code in github. I use next softwares:

python3.7. It's the cool lenguage ever. Ok, that's a joke. Perhaps julia will be better someday. But is really cool.

pyzmq16.0.3. The library that I use for send messages. If you want to know more about zmq, read here.

Ubuntu18.04. My operative system. Pretty cool. Inside it, I got all the power.

Docker, a container engine for encapsulate my application. In this post I'm not going to talk about docker. That is for other day.

A little few more word before the race start

I will try to talk in order of necessities. "Necessity is the mother of innovation", remembers Eric S. Raymond in its article The Cathedral and the Bazaar. But for a good understanding of what we need, we have to take a succefull strategy. I propose to go from the simplest to the complex (or at least try it, there is a time when things get circular and there is no way to escape from that circularity)

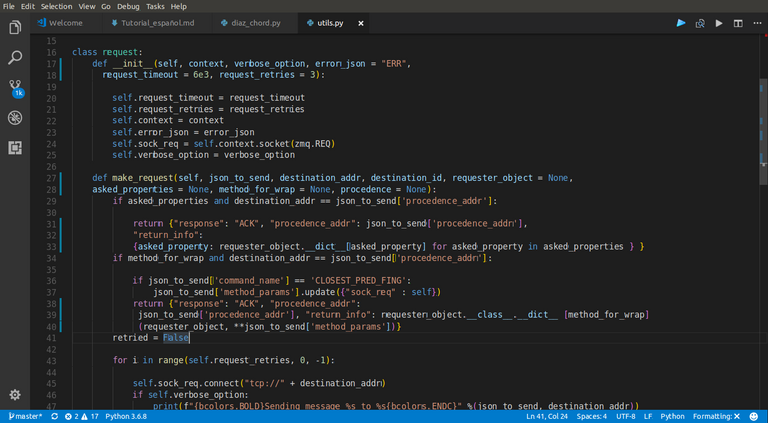

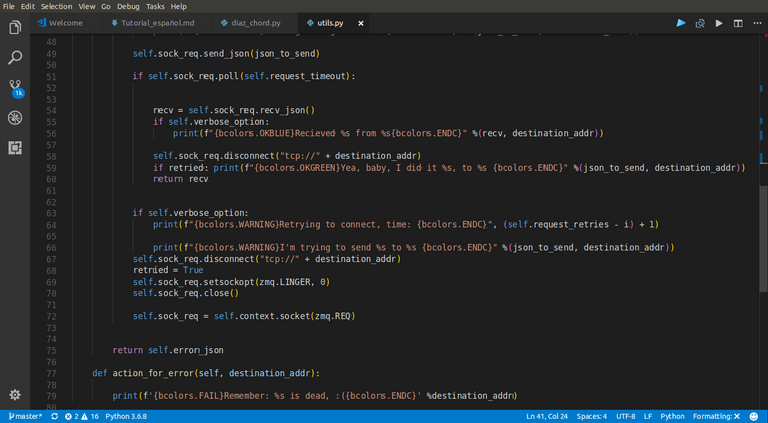

Messages structure

Using the zmq API, we can send JSON's (Java Script Object Notations) from one machine to other. A node needs to listen request from other nodes and reply to them, and in the other way, needs to make request and wait for a reply. This, define two types of messages: request messages, and replier messages. Remember, all the messages of type request wait for an answer. If the answer could'nt bee posible, the requested node is assume dead. We have in the utils.py file a request class, that wraps all the necessary activity for make a request.

|

|---|

|

In here, you can see the call self.socket.poll. This is the knowledge of operative system that was talking before. It does'nt metter if you not understand. This is what makes it possible to reposition the program flow after a while without receiving a response |

Another topic is the structure of the JSON messages itself. There is a minimal set of keys for each message type. In the case of replier messages, we have the response and return_info keys, and in the case of request I have the command_name and method_params.

The Node class

Inside the diaz_chord.py file we have the code that acitvates in each network node. I will refer indistinctly to the node class as a node, although in this case it is just code. In here there is the core functionality of the arquitecture.

So, the most simply network that we can have, and completely useless, is of just one node.

Actually, the idea of initiate the Chord with just one ring is not complex, some problems. The article that presents Chord, affirm a set of invariants that we need to mantain in order to no violate the Chord property (that one about the succesor), but in the case of one node, they don't look many cases in where, if there is fails and joins to the ring, Chord never be correct. More in here. But in few words: if each node has a list of k network next succesors, you need k+1 nodes initially connected to the network successfully at least in the network, for assert a succefull Chord in every state. I'll be back other day with all this theory. These gonna be real black metal. I swear it.

Our nodes have 3 next succesors. So we need to start the network without pitfalls for the 4 firsts nodes of the network.

Back to simplicity. We were talking about an initial node

The __init__ for an initial node

|

|---|

| Yo can see in here all that needs any node of the network, be the first one or not |

The most weird thing that we can see in that pictures, are the 21-23

lines, but these are the way in we construct the id that is gonna have the node (you can read hash in there, but this is other hash, not the Chord hash).

Other interesting thing is the zmq context, the structure through we create the sockets (another feature of the OS ).

And after that we can see the succ_list and other properties that we see in action later.

The lines from 34-37 defines the decisions that the node is gonna take for each defined command_name.

And well, like the node is an introductory one, we can send it directly to wait for a command.

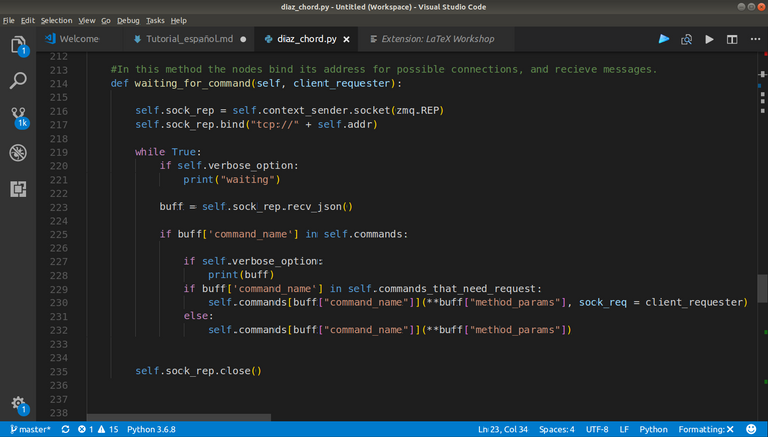

The waiting_for_command() function

|

|---|

The waiting_for_command() function assumes the answer for a requester. |

We can see in line 216 how we create a replier socket using the zmq context.

Until the node is live, it's gonna be waiting for the answer of other nodes and sending repliers (the function binded to the command_name, that has the return_info values.) So for that, we have a while True. The mos interesting line, for me, is 223, and this is because this blocks the ejecution of the program thread (we gonna back here later).

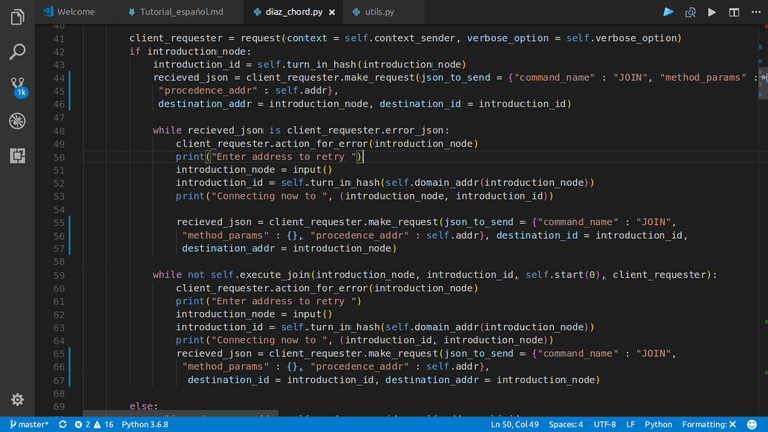

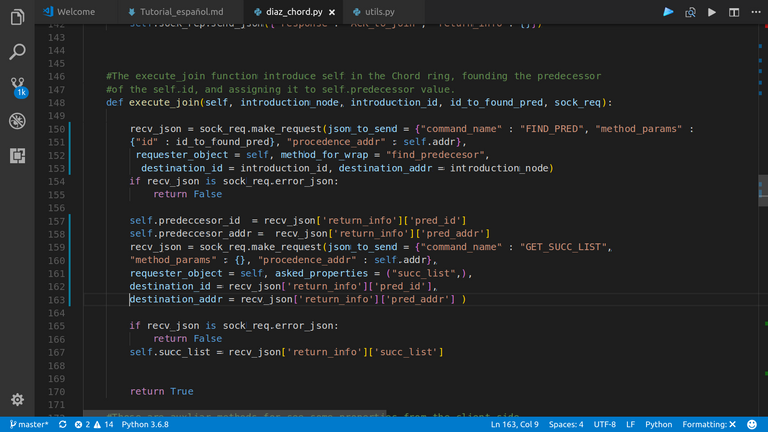

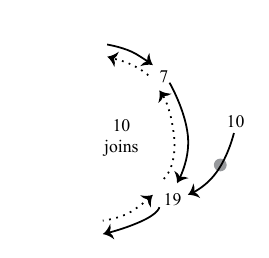

The __init__ for a nodo that makes a join

If a node is not initial, it needs to know the IP address of a network node. In that way, it can make a request to this IP, and obtain who is the predecessor, and "stand in front of it". This is all about the invariant that I said.

|

|---|

| If the request fails, ask to the user for other IP |

|:--:|

|

|---|

This is the method through a new node inserts in the network. Ask to the node who knows its IP, for its predeccesor, and keeps that value. Also keeps the succ_list of the predeccesor as itself. |

|:--:|

|

|---|

| For the node 10, we can see the result of execute the previous method. |

Stabilizing the network

After a succesfull join, the new node does not belong to the ring yet. For that it happens, we need to execute the stabilize function:

- Notify to its successor about its predeccesor value, and verifies that if predeccesor value is between the node id and its successor. If this is

True, we se the consecuent action in 119-120 lines. Else, we can see that the new value ofsucc_listfor the node is its successor, added to the successorsucc_listbut last.

|

|---|

stabilize function |

I want to talk about something that I see very interesting. The only no passive decision that we take in all the code when a pitfall happens, is in the lines 96-97. You may think that every time that we make request in the code, we need to take a decision in the request node, beyond doing nothing, return to the anterior point and wait. (Remember basics programming. This is just put a return in your code.) That's the way wrong. The only thing that node needs to do is wait, and repeat the request. There will be a time for a success, because stabilize will be executing periodically in each node, so there will be a point in where succ(x), for each key, will have a correct value(remember the invariant). This is the KISS principle screaming at your face (Keep It Simple Stupid!!!).

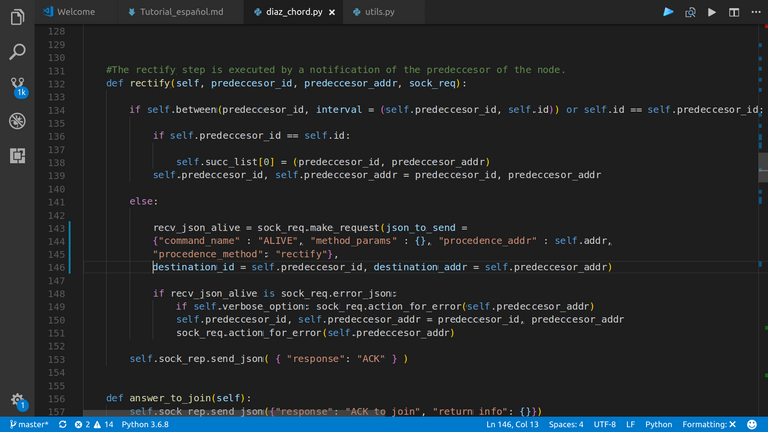

Regardless of the result, the stabilizing node sends a message to its succesor, notifying for its presence as a predecessor. This is the rectify method.

|

|---|

| This codes executes in the requested node |

The concurrent part

Like I said before, the stabilize function will be executing periodically, after a fixed unit of time. In the other hand, we also said before that the nodes needs wait for commands from other nodes. So, for avoid disponibility conflicts, I use a different thread for each function. In that way, the waiting command would not block the stabilize, and the request activity in stabilize wont block the replier role of the node.

|

|---|

The wrapper_action function, the executes at the end of __init__, y and wrapper_loop_stabilize. |

The main reason of Chord, looking for a key successor

An easy and clean estrategy is to look first for a predeccesor of x, and after that return its successor, that is the same of x. Let's think in two nodes A and B in the ring, such that succ(A) = B. They define an interval. So, if x is between them ( A < x < B), we have that succ(x) = B. In that way, we have the next code:

find_succesor |

|---|

And well... find_predecessor:

|:--:|

|

|---|

find_predecessor. The finger_table that you can see here is an optimization for make the query of the desire id in logarithmic order, instead of lineal. |

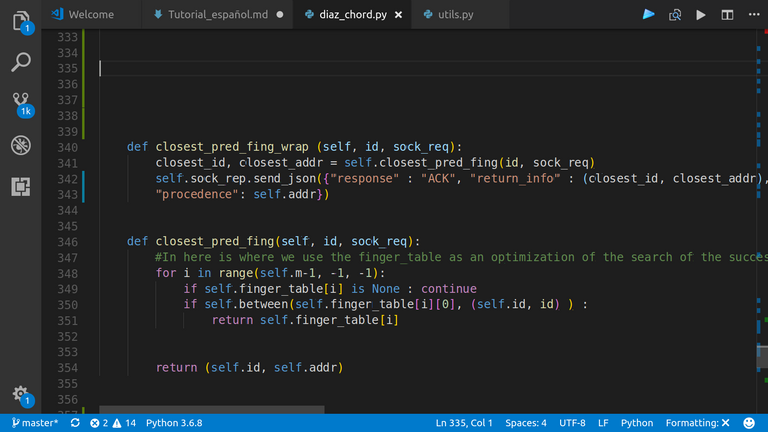

So, we need to show the method that fines the must closing predecing finger, in the finger table of the requested node:

|

|---|

closest_preceding_finger |

The little client for make request

There is a client writted in the client.py . You can play with that. Connecting to running nodes of the ring, and ask some commands an get funny answers. You can see the code in github

¿How deploy the network ?

I know that with what I have shown, whoever reads me should find himself eager for knowledge if he has come here, (if you have come here going through everything else, I want to believe that), and that the question now that you ask yourself is: "How does this Chord run?", "How does it unfold?", And as I am a good seller of products and stories, I tell you that in the next we will see it. I will talk about the last lines of the code, and how to use docker to run the system.

Conclussions

A distributed system is extremely difficult to test, both because of the nature of the problem it solves, and because of the way in which it does so. The code I have shown has certain unnecessary redundancies, parts that have no reason to be (not so many, let's clarify that). Anyway, that can be improved. But it works. It works for several instances that I have tried. I'll talk about that later. If you can give me some feedback, use it following the rules I left on github, or leaving a comment, it would be helpful.

Good night, and have a good code!