Recently I'm quite often compressing huge CSV files which have a lot of data redundancy. Unsatisfied with the ratio between compression time and compression effectiveness, I decided to google a little and find most promising data compression tools which are not mainstream, but yet provide very good results (in both the time and the compression ratio, with more attention to the ratio). So here it goes:

You can use pigz instead of gzip, which does gzip compression on multiple cores.

It’s an experimental compression software, still with alpha release.

It uses a series of compression algorithms. It’s main drawback it’s the time needed to compress something. But hey, it has won many times the Hutter Price (http://en.wikipedia.org/wiki/Hutter_Prize), a contest for compression programs.

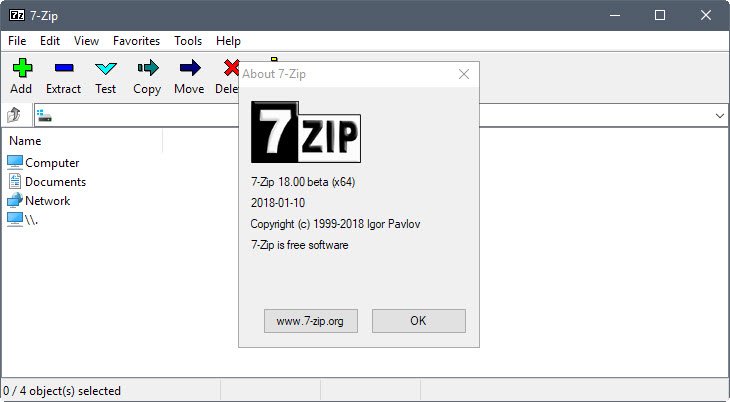

This is a classic one, and was my choice for long time. It has GUI for windows, supports utilizing multiple processor cores at once. Also, 7zip uses LZMA. The Lempel–Ziv–Markov chain algorithm (LZMA) is an algorithm used to perform lossless data compression. It has been under development since 1998. The SDK history file states that it was in development from 1996, and first used in 7-Zip 2001-08-30. Aside from some unreferenced comments about 1998, the algorithm appears to have been unpublished before its use in 7-Zip, and was first used in the 7z format of the 7-Zip archiver. This algorithm uses a dictionary compression scheme somewhat similar to the LZ77 algorithm published by Abraham Lempel and Jacob Ziv in 1977 and features a high compression ratio (generally higher than bzip2) and a variable compression-dictionary size (up to 4 GB), while still maintaining decompression speed similar to other commonly used compression algorithms.

Image sources: ghacks.net

Disclaimer: similar text is published on my personal wordpress

Have a nice day! Upvote my post only if you liked it :)