Seems to have created a new form of manipulation where the one being manipulated is actually manipulating themselves. But that would take an entire article to explain.

As I've said here, I've experimented a lot with Bing and that product or version of AI. And I've said elsewhere as well, for something like that, users engaging in that fashion are not going to the sites that depend on the clicks. So from there people have less incentive to create the new data the system depends on in order to stay relevant. In a way, it could potentially eat itself to death, or become stuck in the past.

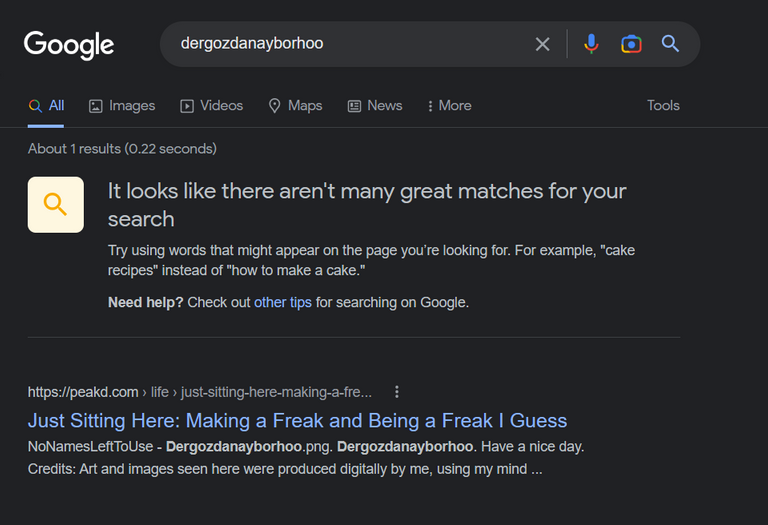

It's not as quick as google though. Google already has "dergozdanayborhoo" which is my invention or, new thought.

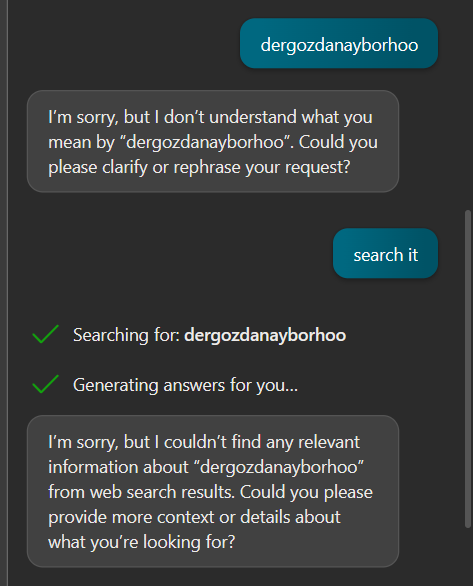

While Bing search chatbot hasn't caught up to me yet:

Good point.

I read somewhere that the data being used for some of these AI systems stopped about a year ago or so. Probably though, the paid for versions use up to date data.

For some systems yeah, it's old already. When it's tied to a search, it needs humans to constantly feed it. I can easily get today's news on Bing. But it doesn't make the events happen.

It doesn't "think" the same way a human can. And when a human takes what's already known and part of the database AI is using to "think", then publishes something based on that information, to AI and technically humanity, that's basically like you've burped and puked a bit up, then swallowed it. AI images, same thing really.