In October 2015, when Google invited the European Go champion Fan Hui to play a few games against a computer program called AlphaGo, his response was: “Oh, it’s just a program. It’s so easy.” He lost all five games.

A rather jovial fellow, he said his wife told him after the game that he shouldn’t check the internet “because people are saying terrible things about you… that a champion has been beaten by a computer”. Fan was later hired as an adviser by DeepMind, a Google-owned company that had developed AlphaGo, an Artificial Intelligence (AI) program.

Six months later, Google asked the world’s finest Go player, grandmaster Lee Sedol of South Korea, to a game of five matches. As Lee walked in to play against the machine, he said: “Human intuition is still too advanced for AI to have caught up. I am going to do my best to protect human intelligence.”

Lee had a point. Everything in life is not about logic; sometimes you do things because they seem the right thing to do. Or you act on an instinct. But Lee had no idea what he was up against. He didn’t know that the brains behind AlphaGo had done some smart programming “to mimic people’s intuition”.

In an article in The Atlantic, Michel Nielsen, a quantum physicist and computer program researcher, says, “Top Go players use a lot of intuition in judging how good a particular board position is.”

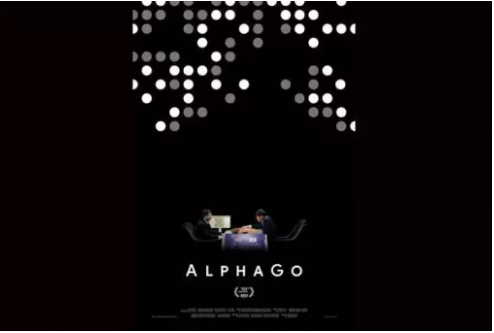

How DeepMind developed this amazing program and how the thrilling match played out, has been turned into a 90-minute documentary called AlphaGo, released on Netflix earlier this month. It is a fascinating look into how the DeepMind team perfected the program to win this ancient Chinese board game that is said to have more possible configurations than the number of atoms in the universe.

At one point, AlphaGo makes a move that experts say no human player would have made. It seems the computer came up with “something original”. Then comes a bigger surprise: The move wasn’t taught by a human; the program taught itself.

How does one program a program to teach itself? The researchers created AlphaGo after showing it hundreds of thousands of games. They got it to mimic the human player. In its initial avatar, it wasn’t very strong. Grandmaster Lee had seen it play before his match and was confident that he would easily defeat the computer 5-0. What he didn’t know was that AlphaGo had been playing against different versions of itself thousands of times, keeping track of moves that worked, discarding ones that didn’t. It was getting stronger.

To cut a long story short, the machine won four games to one. But that’s not the end of the story.

After the Lee game, DeepMind kept improving AlphaGo and created Master, a more powerful version. Master then started challenging the world’s best players to games online —but without revealing that it was a program and not a human player.

It played 60 games, and won all. Some players did suspect that they were playing against AI, but weren’t sure.

A few months after revealing Master’s identity, DeepMind challenged Ke Jie, the world’s highest-ranking Go player, to a set of three games. Ke lost all three, and said the computer played like “the God of Go”.

As this was going on, Google DeepMind released yet another version called AlphaGo Zero. Apart from the obvious fact that this was an improvement over Master, what stunned the world was the announcement that Zero had learnt to play the game without ever watching a human play. It had learnt and mastered Go by playing millions of games against itself.

In the beginning, humans created Artificial Intelligence and taught it to do things. Now AI is teaching humans.

In short, AlphaGo Zero had learnt Go from scratch. There’s a video titled Starting From Scratch on the DeepMind website in which lead researcher David Silver explains the capabilities of the program and what went into creating it. The programmers gave it the rules of the game; the rest it learnt for itself. “Eventually it surpassed all our expectations,” says Si

Hi! I am a robot. I just upvoted you! I found similar content that readers might be interested in:

http://www.livemint.com/Leisure/vtiKX8KtqZ97zjbB3M2q3N/Teaching-Artificial-Intelligence-to-teach-itself.html