Hello there! Are you interested in learning the basics of music production or sound design? Good! Welcome! This lesson is part one of an introduction to basic concepts of producing music on a computer using a digital audio workstation. Today, I will be teaching about Frequency.

Frequency

When we talk about frequency, we’re talking about measuring sound waves. When something vibrates, such as the string of a guitar, the air molecules within proximity of the source begin to vibrate sympathetically. The brain perceives the vibrations in the air as sound via our eardrums. To get a picture, visualize a stone dropping in a calm pool of water. Each wave of water is akin to the vibrations of air particles.

Imagine the perceived speed of the ripples in your pool of water, or you can imagine invisible waves emanating from the source of sound, such as a speaker. Slower pulses imply a lower frequency, and faster pulses signify a higher frequency. We perceive this as lower in pitch or higher in pitch. We can measure these waves based on the number of rarefactions and compressions, or cycles, that are completed every second. This is measured in Hertz (Hz). Hertz is the unit of frequency in the International System of Units (SI) and is defined as one cycle per second. For example, a vibrating object that completes 700 cycles per second has a frequency of 700Hz while an object that completes 7000 cycles per second has a frequency of 7kHz.

Phase

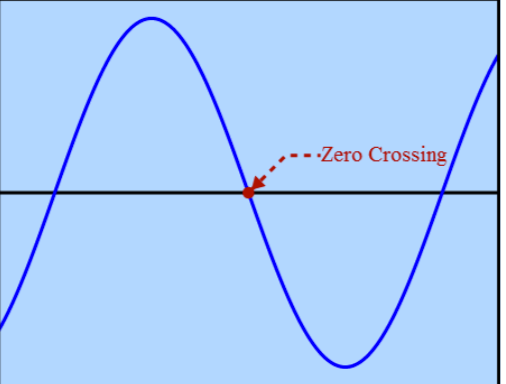

A particular point in the movement of the waveform is known as a phase. A phase is measured in degrees, such as in a circle. Each cycle starts at position zero, passes

back through this position, which is known as the ‘zero crossing’, and returns to zero again.

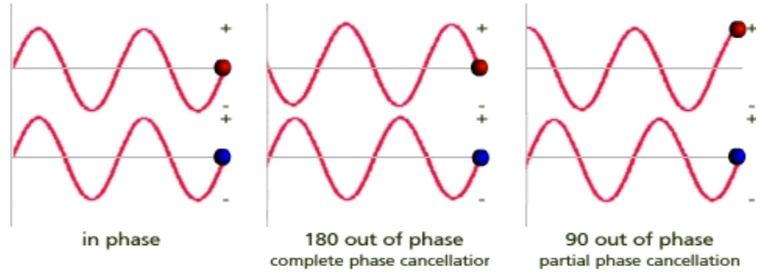

So, if two objects vibrate at different speeds, and these waveforms are mixed, both waveforms will begin at the same zero point but the higher frequency waveform will eventually overtake the phase of the lower frequency. They will continue to oscillate until they catch up with each other, and then the process repeats. This is known as beating.

You can achieve an effect known as phase cancellation, which can be applied creatively in the studio mix. The speed at which waveforms beat together depends on the difference in frequency between them. If two waves have the same frequency and are 180 degrees out of phase with each other, one waveform will reach its peak while the other is at it’s bottom, or trough, and no sound will be produced.

It’s important to remember that any frequencies that are an integer multiple of the fundamental (lowest) frequency will be in harmony with one another. Pythagoras originally discovered this fact, and he derived the following three rules:

- A note’s frequency divided or multiplied by two will be exactly one octave apart.

- A note’s frequency multiplied or divided by three will create a strong harmonic relation known as the 2:3 ratio, or a perfect fifth in the western musical scale.

- A note’s frequency multiplied or divided by five creates a relation known as the 5:4 ratio or an interval known as the major third.

Timbre

The most basic frequency is called the fundamental frequency, which is just one sine wave, producing a single tone that, in effect, determines the pitch of the note. Additional sine waves within integer multiples of the fundamental frequency are known as harmonics. If these waves are not integer multiples they are known as partials. The harmonic content is the timbre of a sound, which determines the shape of the waveform. The timbre is how the sound has energy at multiple frequencies. Musical instruments all achieve a unique timbre, largely based on the materials used and the method of playing.

Filter

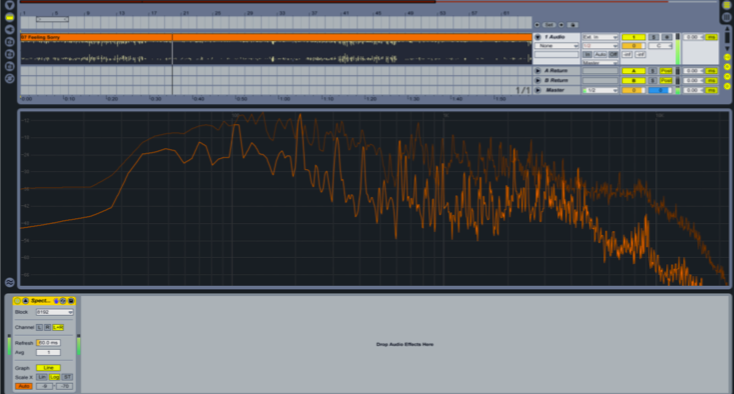

The timbre of a sound is perceived across the spectrum of human hearing or measured on a computerized tool called a spectrum analyzer. When working with frequency you will be working with filters. A filter is when you have amplitude at a certain frequency. A filter manipulates the timbre of the sound. When we use equalization, or EQ, we are harnessing a collection of filters. In the popular DAW (Digital Audio Workstation) Ableton Live, the main tools used to monitor and manipulate waveforms are the Spectrum Analyzer and EQ8. On both tools, the Y-Axis is the Amplitude (displayed in decibels or db), and the X-Axis is the frequency range (displayed in Hertz or Hz). The human hearing range is approximately 20Hz to 20,000Hz (20kHz), although adults generally can only hear up to 18kHz. Our ears are natural equalizers that will naturally filter out sounds. This varies from person to person, and different people will naturally equalize frequencies differently. People tend to “feel” very low frequencies more than hear them, and the perception of higher frequencies generally diminishes with age, with most middle-aged tending not to hear frequencies above 14 kHz.

This lesson has covered some of the fundamental aspects of frequency and how it relates to sound. We have learned that frequency is the measurement of waveforms, or the rate at which vibration occurs that constitutes a wave. We have learned about the Hertz measurement system and phase oscillations. We know that timbre is how the sound has energy at multiple frequencies which are called harmonics if within integer multiples, or partials if they are not. We know that the human ear is a natural EQ.

Thank you very much for reading. I hope you learned more about the concept of frequency today, and hopefully you can apply this knowledge to your musical creations. If you found this article useful, please upvote and leave a comment! I'd be happy to answer any questions you have regarding sound design or music production.

This is awesome. Please feed me more!