Women recently expressed concern on social media after learning their iPhones sort some of their pictures into a “brassiere” category. The AI setting is intended to detect a variety of things, including food, dogs, weddings, and cars, but a simple search in the photo app for “brassiere” made some women feel violated.

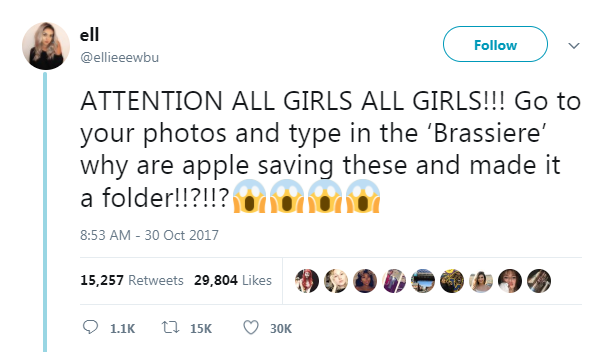

The image detection feature has been active on iPhones for over a year and recognizes over 4,432 keywords, but it garnered attention after a Twitter user posted about at the end of October:

The tweet received thousands of retweets and likes and left many women concerned about their privacy.

Despite the creepy implications of artificial intelligence categorizing pictures of women in their bras (I checked my phone, and the results were a bit unsettling) — and the fact that you can’t turn the AI setting off — the photos aren’t automatically shared with Apple.

Unless your phone is set to upload images to iCloud, the photos and their categorizations remain strictly on the individual’s device.

Further, the cataloging of bra shots was not universal. Quartz reported on the story and had its employees check their phones:

“Tests by the Quartz newsroom and others on Twitter confirm that ‘brassiere’ is searchable in Photos—however, results were mixed. One Quartz reporter’s search yielded only an image of her in a dress, skipping over photos of friends at the beach. Another wore a bra as a part of a costume, but the AI didn’t surface those pictures. The AI often included photos of dresses with skinny straps, or sports bras. Others confirmed that the folder had—disconcertingly—worked as intended.”

“For another Quartz reporter, the Photos app catalogued an image of a t-shirt (featured in a past story), which seems to confirm our working theory: It’s looking for shapes that resemble bra straps.”

Even so, the brassiere category on my phone included a picture of me in a tube top, which had no straps at all.

Regardless, The Verge noted an interesting disparity between women and men’s undergarments:

“One thing to note here is that while women’s undergarments like ‘bra’ are listed as categories, there’s no mention of men’s boxers or briefs. Clearly someone had to have made a conscious decision to include (or not include) certain categories. Even ‘corset’ and ‘girdle’ are on the list. Where is the same attention to detail for mens’ clothing?”

The Verge also pointed out that Google has the same feature and the photos are automatically uploaded to the cloud and stored on Google’s servers. Google’s machine learning photo detection has been around since 2015. As the outlet observed:

“Should the fact that ‘brassiere’ is a category at all be concerning? Or is it more alarming that most people didn’t know that image categorization was a feature at all?”

This article was written for @antimedia

My Links:

Patreon: https://www.patreon.com/CareyWedler

Anti-Media: http://theantimedia.org/author/careyw1/

Youtube: https://www.youtube.com/channel/UCs84giQmEVI8NXXg78Fvk2g

Instagram: https://www.instagram.com/careywedler

Facebook: https://www.facebook.com/CareyWedler/

Twitter: https://twitter.com/careywedler

Thanks for posting this point of view Carey. Again, I'm pleased to see that you have found a new home here at #steemit and have an escape from the abusive environment of the other platforms.

With that being said, I find it absurd that anyone who is taking selfies and showing plenty of skin, is at all concerned about privacy. How is it possible that people think that there is ANY privacy at all connected to images that they upload to the Internet? If they are so concerned about how the pictures will be stored or categorized, then they should realize that they are in full control from this point moving forward and simply can STOP uploading those pics.

They can scratch that same itch by using disposable cameras that still use film or that provide instant Polaroid style pics that they can hold in their hand and store in a box in their closet, where only they can see them and control who else sees them. But they can't pass those pics around because anyone who has them can take a pic of the pic and then upload it... LOL You can't have it both ways.

The proverbial genie is out of the bottle, with respect to privacy and online pics. IMHO, these days privacy can only be easily achieved by keeping the content one is concerned about, OFFLINE.

BTW, it is definitely "...more alarming that most people didn’t know that image categorization was a feature at all". The mindless use of technology, with total disregard for the potential negative consequences that it poses, is a cancerous phenomenon in our society in the new milenium. It is at the very core of the growing foul, base, and low practice of revenge porn that ruins many young lives.

The practice of "active thinking" seems to be in an endangered state of being.

Shit, I freaked out when I realized some shit on my computer and/or phone had located and categorized all my pictures of rainbows and dogs!

Hi! I am a robot. I just upvoted you! I found similar content that readers might be interested in:

http://theantimedia.org/iphone-track-bra-brassiere/

Yes, that is where it was originally posted. I am the editor-in-chief of theantimedia.org @cheetah...

You can't blame AI technology if you are silly enough to not keep private pics private (or not take them at all)

!

Well, it sounds like the photos never left the phone (unless automatic upload to iCloud was enabled of course) so I would consider them "private".

However I think this story could open the eyes of people who didn't think such things were possible and maybe those people will think more about what data they (want to) share with companies like Apple by uploading them, for example, to a cloud.

Another thing the article points out is that somebody must have made the decision to include women's underwear but not men's which is an example for not blaming machine learning but that we as a society need to ask ourselves how we should deal with such technology. Regardless of what you think of it, categorizing only women in underwear is not neutral. Should AI always be "neutral" by only categorizing things in an "objective" way? Should AI analyze things that are so personal at all? There are many questions to be asked, some more complicated than others.

Overall I didn't see anybody blaming AI, the tweet that was shown even asked "... why are apple...".. I don't mean this in an offensive way but I think the whole thing is not about "blaming AI" but a different issue, feel free to discuss with me tho, I think its an very interesting topic.

Machine learning recognises patterns and common themes its more a reflection of society's interest in women's garments than the technology.

But most likely a human has handpicked the categories for the algorithm to detect

That's funny.

One of the more humorous posts that I've read today.

good work.

I feel so violated...no more bra selfies for me.

Thanks

Shit company, voluntary exchange, dumb masses.

I'm glad it's safe to take selfies on Android phones - LOL...

Safe for who? The one making the picture, or the one looking at it?

Unless you automatically backup/upload them to Google Photos, then they are analyzed inside the cloud, so its even worse lol.

Seems like the AI is in some kinky stuff.

well that is really weird. . on all accounts, especially that how it was snuck in to the functioning of the AI.. :-/

This was so long ago--why are you recycling information that everyone knows already?

-armintrepic

Thanks Carey ( @careywedler ) thank you for this. That's weird and very creepy. I would be unsettled to if I was a woman, even though it is not shared on Apple . Who knows in the future. though ??