For years I’ve always talked about how I respect Apple for trying to do everything they can to give people their privacy, I believe it’s important for people to feel confident in the way they want to express themselves as much as possible and that includes when we’re using technology as a medium of expression.

I’ve also put a lot of blind faith into the walled garden that Apple has over their software and approval process. A team of humans verifying every app for malicious code, apps tracking things that are not supposed to, and apps sending data back to unknown places.

Apple has always talked about being an advocate for privacy, and believes that it’s a fundamental human right. They also market the phrase “What happens on your iPhone stays on your iPhone“. It all sounds great right?

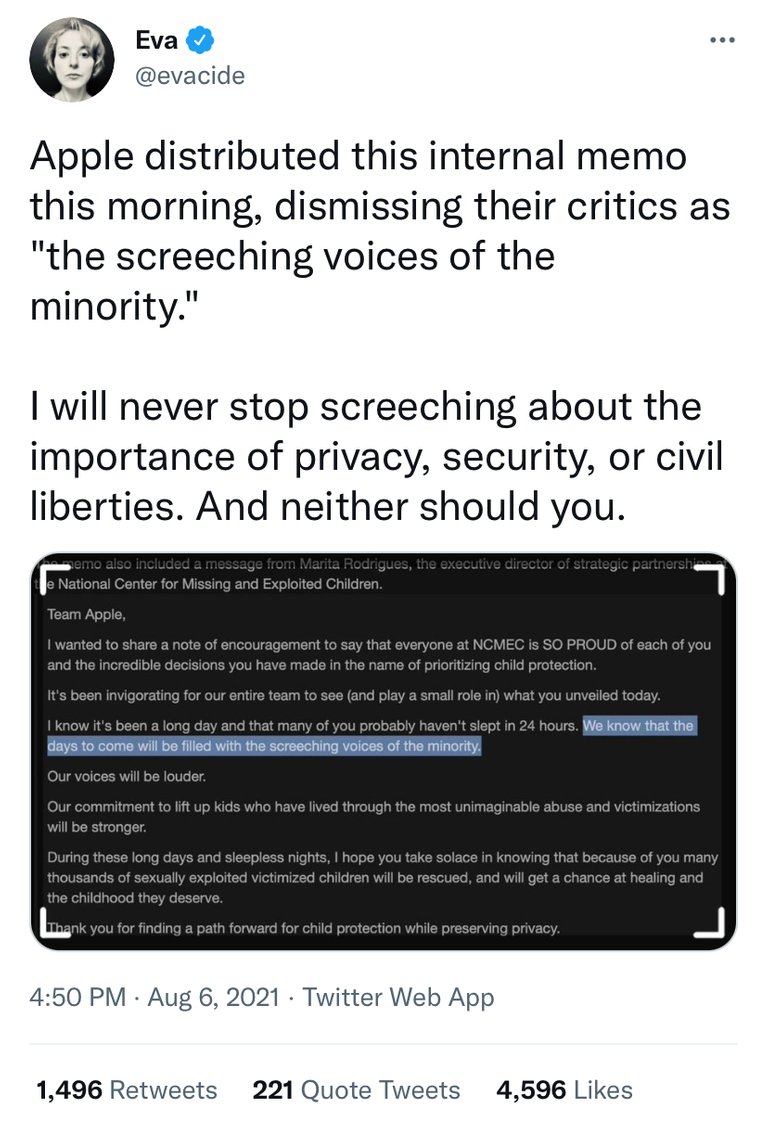

So far everything has been great in my experience but I recently came across a post on Reddit talking about how in a future update apple plans to scan photos for child abuse material and that they were partnering with an entity that says that people that are going to be against this type of scanning will be in a “screeching minority.“

So hold up,

Wait a minute,

Something ain’t right.

This is probably fake or somebody that just doesn’t like Apple in general wrote this, right?

Well it turns out that this information surfaced because of a controversial tweet made by the director of cyber security at the electronic frontier foundation, an organization that defends civil liberties in the digital space.

Here’s a bigger photo for those that want to see the full picture, links for all of this will be included at the end.

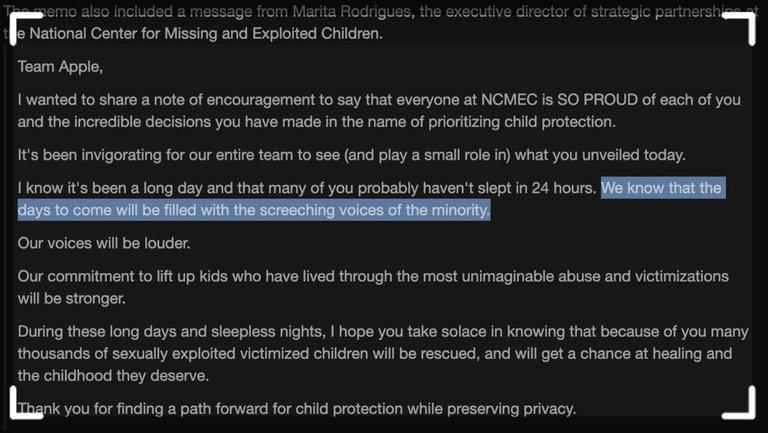

This is a memo that Marita Rodrigues, the executive director of strategic partnership at the national center for missing and exploited children sent to Apple.

It’s important to note here that the “screeching voices of the minority“ will include people that are speaking out against this not because they have something to hide or because they’re terrorists, but because they value privacy and can see that the road to hell is paved with good intentions.

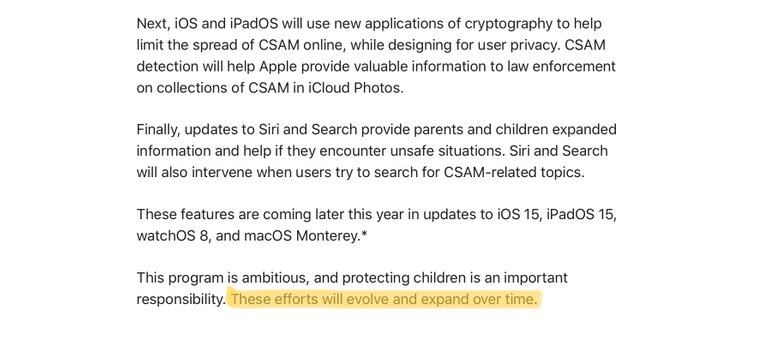

OK, so before we go any further, what does Apple have to say about all this? Let’s go straight to the gospel and let’s take a look at the support page that they put up with regards to this. I’m going to use screenshots here because Apple could change the wording on their website later so this is important now for documentation and preservation purposes. But again, a link to this is at the bottom of the page.

What I want to illustrate in the picture above is that currently Apple is doing this only in the messages app and when your phone is uploading photos to iCloud; but they say this will evolve and expand over time and without clarity and what exactly that means. Itleaves users like me questioning how this will play out.

“What does evolve and expand over time mean?”

”Is the scanning going to get more sophisticated as it “evolves?”

”Will it “expand“ beyond iCloud and the messages app?

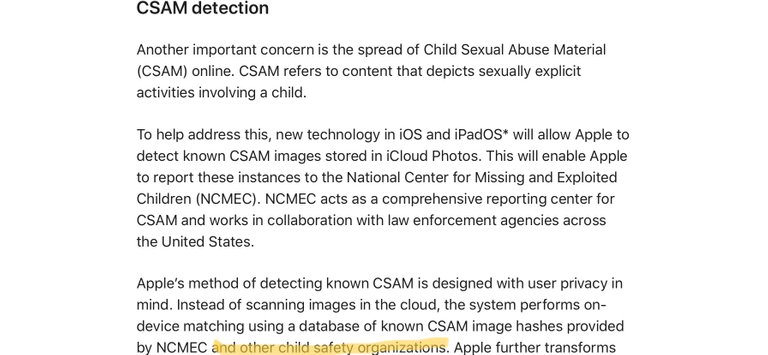

Let’s look at what Apple says about these two new controversial features:

To put the above screenshot simply, Apple is installing a database of codes on all iOS 15 devices that matches the codes from a database that NCMEC gives them. But not just that, “other child safety organizations” can contribute to it too!

“Who exactly are these other organizations and how can we be sure they don’t upload codes that include political dissent pictures or media of protests?”

Ok next “feature”

This screenshot from Apple‘s website talked about “on device machine learning to analyze image attachments“ so not only is it happening in the cloud but the analyzing and scanning is happening on the devices themselves now. If you consent to installing iOS 15 you’re basically installing an on device scanner or analyzer as Apple is calling it here.

OK, so what exactly happens when this on device scanning picks up something that it doesn’t see as appropriate? Well, here’s the response from the Apple support page itself:

Here it basically says that once the AI picks up enough questionable material, it will send it to Apple employees so that they can look at this material and then straight up disable your Apple account. Once your account is disabled you’ll have to go through an appeal process in order to get back your account, your messages, be able to use FaceTime, be able to download apps from the App Store, and we don’t know how backed up their support will be for something like this.

“How long will the appeal process take?“

“What parts of my phone, iPad, and macbook can I use while my account is disabled?“

“Are my devices basically bricked until a decision is made on the appeal?“

“Apple provides no support for people that have purchased Apple products from other people who didn’t disable the iCloud activation lock feature, if my account is disabled on my devices, will I be able to sell them to somebody else who can login with a different Apple ID or are my devices unsalvageable after that like current devices that have iCloud activation lock on them?“

Questions like these were popular on the Internet for a few days while Apple said nothing, then the Wall Street Journal post a video with an interview from Craig Federighi talking about the controversial features:

https://m.youtube.com/watch?v=OQUO1DSwYN0

Although she did have some good questions, this was hard to watch and I wish she would’ve went into some of the questions that I put in bold here. I wonder what the responses would be?

The biggest question on peoples minds is this:

“How can we know that this back door that’s being installed and will “evolve and expand overtime“ won’t be abused by foreign governments?“

Here’s examples of Apple bending over to government requests in the past:

China:

“On the Chinese variant, no Facetime audio calls can be made or received; Facetime Video, however, can be used. At 10% or 20% battery, the Chinese variant makes a robotic dying sound that can’t be disabled.”

Russia:

2019, when Russia dictated that all computers, smartphones, smart TVs, and so on sold there must come preloaded with a selection of state-approved apps that includes browsers, messenger platforms, and even antivirus services.

Source:

https://www.wired.com/story/apple-russia-iphone-apps-law/

When china wanted to oppress the Hong Kong protesters, they asked Apple to remove the Taiwan flag so those people couldn’t express their sovereignty, Apple took it out for that region of users:

Source:

https://www.theverge.com/2019/10/7/20903613/apple-hiding-taiwan-flag-emoji-hong-kong-macau-china

It seems that the government can say whatever it wants and apple will comply as long as it doesn’t hurt their bottom line dollar.

So Apple is doing this because this is supposedly going to stop child predators but have they talked about how it might enable some? Have we had a discussion about how some parents might actually use this feature to track and abuse their own children if they are adverse to the LGBTQIA+ communities? What about governments that will create their own databases to fish out their own citizens? In places like Iran and Egypt gay porn is banned and Memes of Winnie the Pooh are currently banned in China so the Chinese president doesn’t get his feelings hurt so how do we know that those countries won’t update their data bases with these images?

They are doing this in the name of “protecting children” however we’ve seen in the past that even if security is ramped up it still does virtually nothing to help with an increase of capturing offenders. Here’s an article where even after ramping up security, TSA still failed 95% of the breach attempts made by undercover agents at the airport.

This is just another example of “security theatre” where the perceived upgrades really do nothing to to achieve it but they DO encroach on our privacy:

Source:

https://en.wikipedia.org/wiki/Security_theater

And before you say “oh I’ll just get around it by not uploading my photos to iCloud and stop using iMessage” think again; this is being expanded to third party apps and before long it might just be a requirement at the request of some government:

Source:

https://www.macrumors.com/2021/08/09/apple-child-safety-features-third-party-apps/

If you’ve made it this far congratulate yourself on having a wider attention span than most and if you’re looking to see what I’m doing about it here’s my call to action:

1: vote with your software and don’t update your iOS devices to iOS 15, this will only work for so long until you’re vulnerable to new security flaws that are discovered or your data might become unusable with no way to get it off of your iOS devices.

2: vote with your dollar, I’m now buying an android device that I can completely erase the memory and install my own custom software onto it

3: be vocal about privacy rights for everybody around the world.

Thank you for reading.

~ Stay Sovereign

~ Stay Diligent

~ Stay Harmonious

SOURCES:

Link to original tweet:

Apple CSAM support page:

https://www.apple.com/child-safety/

TSA article:

https://www.nbcnews.com/news/us-news/investigation-breaches-us-airports-allowed-weapons-through-n367851

Craig Federighi‘s response to the Wall Street Journal:

https://m.youtube.com/watch?v=OQUO1DSwYN0

Glad to hear you're escaping the land of proprietary, over-priced, BS :-)

I'm going for one of the Librem phones myself. https://puri.sm/products/librem-5/

And they have a [apparently] privatized SIM-card plan now as well. $100 a month for unlimited talk/text/data, coverage-by-contract like Google's thing, but they don't pass on any info they don't have to. (haven't done due dilligence into this yet - to be clear) https://puri.sm/products/librem-awesim/

Congratulations @sovereignalien! You have completed the following achievement on the Hive blockchain and have been rewarded with new badge(s) :

Your next payout target is 250 HP.

The unit is Hive Power equivalent because your rewards can be split into HP and HBD

You can view your badges on your board and compare yourself to others in the Ranking

If you no longer want to receive notifications, reply to this comment with the word

STOP