The study is run by Michal Kosinski, a famous professor at Stanford University, who through his research has managed to "teach" an algorithm of artificial intelligence to recognize through the facial features of a photograph if the person is gay or heterosexual, with a striking precision of 91 percent for men and 83 percent for women.

"One of my obligations as a scientist is that if I know something that can potentially protect people from falling prey to such risks, I should post it. Reject the results because you disagree with them ideologically ... you could be hurting the same people you care about. " Kosinski said

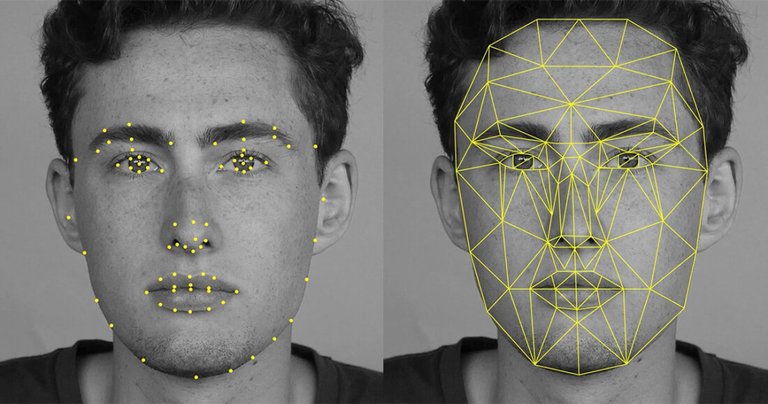

The researchers "trained" the AI through a database that consisted of 35,326 faces of 14,776 people, implementing a program called VGG-Face, with the ability to convert the "Face Facial" of people into numbers, and through a system of "deep neural networks" allowed to find correlations between the facial features and the sexuality of the individuals, through the mathematical analysis of a great amount of biometric data.

As a result of this research it was possible to determine that homosexual men and women tend to have characteristics of atypical genera, for example, different expressions and aesthetic features. This means that gay men have more feminine traits and vice versa. The study also highlighted certain trends; reflecting that homosexual men have the narrower jaw, longer noses, and larger forehead than heterosexual men. As for the women, they have more prominent jaws and the forehead smaller than the heterosexual females.

The paper also theorizes that the sexual orientation of an individual comes from exposure to certain hormones before birth, which would significantly influence the development of specific traits and with a specific sexual orientation.

The controversy

These statements have created great controversy about the origin of sexual orientation and the ethical consequences that technology would bring to the public. So Ashland Johnson, director of education and public research "Human Rights Campaign (HRC), has stated in a statement:

"Imagine for a moment the potential consequences if this flawed research will be used to support a brutal regime's efforts to identify and / or persecute people who believe they are gay"

"Stanford should steer clear of that junk science instead of giving its name and credibility to research that is dangerously flawed and leaves the world - and this case, millions of people's lives - worse and less secure than before".

Several experts on the subject have stated that it is necessary to interpret the findings on the ground and to raise the discussions and debate necessary to delimit the ethical and moral scope on the subject.

In my opinion, I think that this type of technological advances will bring more problems than solutions, and as we have seen in the article, the basis of study was made from a discrimination of certain races and genres that were excluded, this does not promise anything good, and even more, if it falls into unscrupulous hands, this exponentially increases the segregation of races and sexual tendencies, only by their appearance. A delicate subject that should be the subject of further analysis and considerations.

- http://www.abc.es/ciencia/abci-eres-o-heterosexual-maquina-puede-adivinarlo-foto-201709112227_noticia.html?utm_source=dlvr.it&utm_medium=twitter

- https://www.theguardian.com/world/2017/sep/08/ai-gay-gaydar-algorithm-facial-recognition-criticism-stanford

- https://www.theguardian.com/technology/2017/sep/07/new-artificial-intelligence-can-tell-whether-youre-gay-or-straight-from-a-photograph#img-1

- http://www.telegraph.co.uk/technology/2017/09/08/ai-can-tell-people-gay-straight-one-photo-face/

Good day @carlos-cabeza I appreciate all the info and hard work thank you :) Love it. Followed