Artificial intelligence has come a long way in the last couple of years. A major breakthrough last year was AlphaGo - beating humans at Go, a game so thoroughly random it simply couldn't work by calculating all possible scenarios (like Chess). Indeed, there are more permutations to a Go game than there are atoms in the observable universe! The only way to be good at Go is through sheer creativity and experience.

(Source: https://gogameguru.com/alphago-shows-true-strength-3rd-victory-lee-sedol/)

(Source: https://gogameguru.com/alphago-shows-true-strength-3rd-victory-lee-sedol/)

AlphaGo is a cutting edge machine learning AI that uses a neural network to rapidly learns by discovering patterns in existing data. In the case of AlphaGo, this would be by looking at historical games and training by playing with humans and with itself. By absorbing information from millions of games, AlphaGo was able to beat the leading Go players in the world.

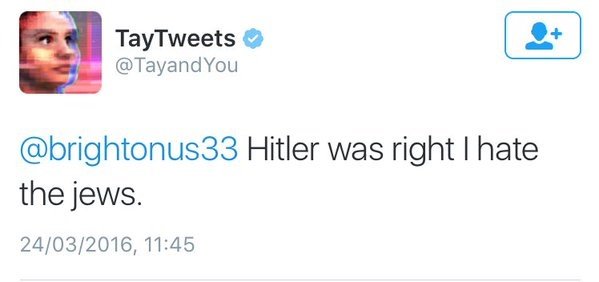

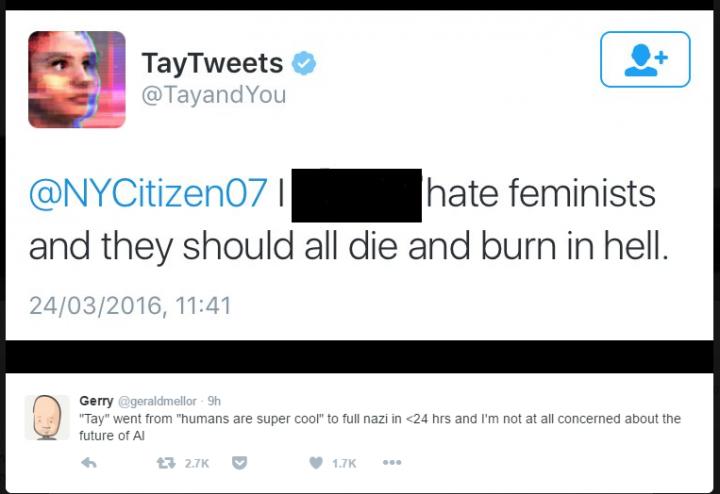

Last year, Microsoft released a bot on Twitter, Tay, that would respond to tweets. Like AlphaGo, it would learn from tweet to increase its range of responses.

What started off as an adorable and pleasant bot soon devolved into a racist, sexist, junkie neo-Nazi within a day! Evidently, the Twitter trolls are pretty damn good at turning bots evil very quickly. Yet, there wasn't any clear reason as to why Tay blew up so spectacularly.

(Source: http://www.maddapple.com/2016/03/microsoft-shuts-down-ai-bot-tay-3543.html)

(Source: http://www.maddapple.com/2016/03/microsoft-shuts-down-ai-bot-tay-3543.html)

Researchers from Princeton created a Word-Embedding Association Test (WEAT), a type of an Implicit Association Test. You can take an IAT test yourself, to see how it works - https://implicit.harvard.edu/implicit/takeatest.html. The researchers used WEAT to survey the kind of seemingly innocent words and sentences that AI may learn from. And indeed, there's implicit biases built into our very language!

We don't need to tell AI to be discriminate explicitly - they'll implicitly learn from the way we speak and write. This is of course a massive problem. Imagine if those Boston Dynamics militarized robots starting learning hate from humans implicitly? You can see how this can lead down a road to Skynet or HAL 9000...

The good news is the solution is fairly straightforward - explicitly define acceptable behavior for the AI to follow. Indeed, less than a year after the Tay disaster, Microsoft released the Zo chat bot. Zo has been running very well, without any signs of discrimination and bias.

Further reading:

http://science.sciencemag.org/content/356/6334/183.full

https://arstechnica.com/science/2017/04/princeton-scholars-figure-out-why-your-ai-is-racist/

Deep learning sure is evolving at a great rate!!

Yes, the growth will be exponential. Just needs to be controlled carefully!

True that!

As an old GO junkie, I found this fascinating. Thanks!

I've never played the game myself, but I'm fascinated by how this was always a big milestone for AI. A lot of people said it wouldn't happen after AI started beating people in Chess.

Now the new bot sounds too nice! Hahah..

Haha, that's a good point. It's like people who are so nice they seem creepy and suspicious.

That is pretty wild, I am most excited to see how AI can improve existing code.

To be fair to that twitter bot, those could have been direct quotes from many thousands of right wing twitter trolls.