Survey of prominent researchers finds only 7.5% think Bostrom-style “superintelligence” achieved within 25 years

Source:psychesingularity

Source:psychesingularity

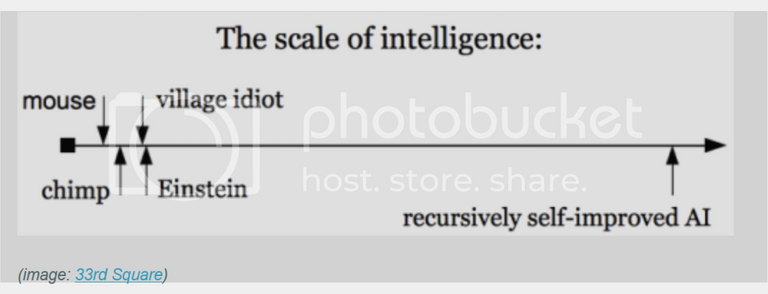

Nick Bostrom’s book Superintelligence: Paths, Dangers, Strategies could be important, if for no other reason than that the issue of building superintelligence will be with us for a long time, one way or the other. If he’s right, he will have been prescient. If not, superintelligence will remain a Cheshire cat humanity will be chasing for the foreseeable future.

Here are some of the main issues raised in the book:

Assumption of Superintelligence (SAI). Bostrom sort of skips the idea of what constitutes SAI. For instance, he says domain-specific machines are not SAI, only general intelligent machines are (p.22). There are no general intelligent machines, and if he knew how to build one, he wouldn’t tell – for reasons he says he will make obvious later in the book (see “Superintelligence is deadly” below.)

Bostrom’s aim is to discuss the implications of SAI, not whether SAI can actually be built. So, he assumes SAI will be built and then argues that that path will likely lead to oblivion.

Does Will Matter? Basically, Bostrom pretty much ignores the issues of will and consciousness. His dividing line is whether a machine is autonomous or not, and if so, it will make decisions based on its own motivations. It’s motivation might be to become a “singleton” – a ruler of first Earth, then the solar system, then ???; or SAI might decide it is imperative to transform the Universe into paper clips.

Superintelligence is deadly. Bostrom presents a plethora of classifications of SAI, and argues that any way that you create SAI, it will result in unpredictably; and that almost certainly will go bad – as in really, really bad. Here’s how one writer put it:

The book is dedicated to the control problem: how to keep future superintelligences under control. Of course, the superintelligences may not be inclined to comply. As Bostrom correctly notes, any level of intelligence may be compatible with any set of values. In particular, a superintelligence may have values that are incompatible with the survival of humanity.

Guilo Prisco, Thoughts on Bostrom’s ‘Superintelligence’

One quote from the book I thought pithy: “If we’re lucky they’ll treat us as pets; if not, they’ll treat us as food.” I don’t like the idea of being either pet or food. SAI would be alien intelligence. Given 1 year or 100 years, we have no idea what SAI would ponder. Humans could kibitz, but SAI wouldn’t really listen. Worse, SAI would be a really good liar.

What do A.I. Scientists Think?

Bostrom’s starting point is that superintelligence is inevitable, and then tries to imagine the unimaginable. Bostrom notes early in the book that much of what he says is probably wrong, but I think he’s just being humble. Most things he says in the book are probably right, except I wonder if he’s not wrong from the jump. That is, can ‘autonomous’ or conscious or general self-directing SAI really be built? That is, can we create a machine with some sort of will? Up until now, will is only a feature of biological entities. Bostrom assumes 'autonomous' machines with will can and will be built.

Bostrom wrote the book to warn of the existential risks of SAI (and to cash in on the hype). We might see international summits on the wisdom of SAI in coming years. Most people would reject building SAI if you asked them if they where willing to die for it. However, most practicing scientists in the area think the idea of SAI (artificial, general, self-motivating, greater-than-human intelligence) is fiction.

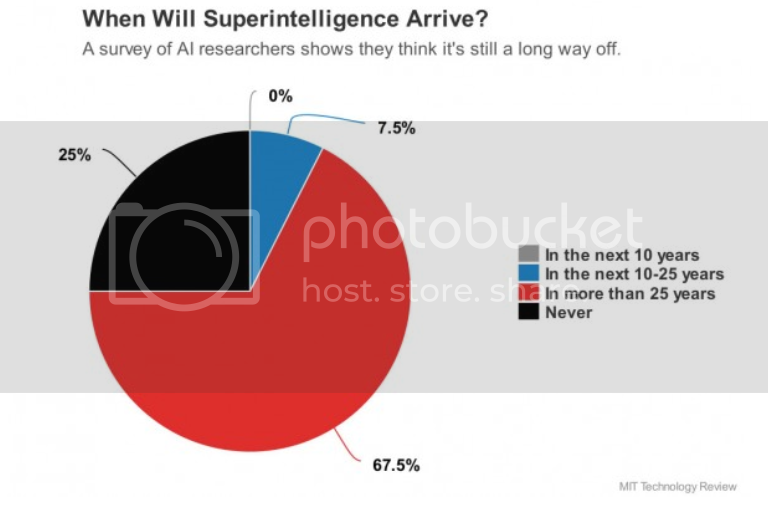

Just released is a survey of the Fellows of the American Association for Artificial Intelligence, a group of scientists who are recognized leaders in the field. The Question:

“In his book, Nick Bostrom has defined Superintelligence as ‘an intellect that is much smarter than the best human brains in practically every field, including scientific creativity, general wisdom and social skills.’ When do you think we will achieve Superintelligence?”

Oren Etzioni, Are the Experts Worried About the Existential Risk of Artificial Intelligence? in Technology Review

Here’s a pie chart of the results:

Only 7.5% said we’d have superintellignece within the next 25 years. Twenty-five years is a number usually taken to mean “too long for my career”. Fully 25% of these experts said “never”. So, 92.5% don’t believe superintelligence is foreseeable, some even impossible. As similar poll was taken some years ago of attendees at an A.I. conference in Europe, with similar results.

As Etzioni points out the basic problem is that researcher lack an adequate model of intelligence and consciousness in order to model the human mind (see, e.g. Consciousness beyond life for a discussion of the non-local nature of consciousness). Most researcher in A.I. are working on task-specific problems which they code machines to perform. The idea that that sort of A.I. would lead to an autonomous intelligence is a bit ridiculous.

The consensus is that creating an SAI is 1) probably impossible, without future very large breakthroughs, i.e. a true understanding of consciousness on which to build an emulation; and 2) an invitation to disaster. It’s probably a good thing that no one can create superintelligence because humans would immediately and permanently become number 2 on the food chain. We would lose control of our destiny – in fact, our destiny will have been to create an SAI with inscrutable motives, and be eclipsed by it.

Bye bye, humanity as we know it.

RedQ

P.S. Of course, even A.I. well short of 'superintelligence' is a big, big problem, to wit:

Of the roughly 10,000 researchers working on AI around the globe, only about 100 people – one percent – are fully immersed in studying how to address failures of multi-skilled AI systems. And only about a dozen of them have formal training in the relevant scientific fields – computer science, cybersecurity, cryptography, decision theory, machine learning, formal verification, computer forensics, steganography, ethics, mathematics, network security and psychology. Very few are taking the approach I am: researching malevolent AI, systems that could harm humans and in the worst case completely obliterate our species.

Roman V. Yampolskiy, Fighting malevolent AI—artificial intelligence, meet cybersecurity

Credit: shutterstock.com

Credit: shutterstock.com

There's a vast space between 25 years and never! I would've liked to see a more detailed breakdown. I mean, 26 years still seems very near for something as disruptive as AI.

Another great article from you, you just earned yourself another follower!

Thanks much! I desperately do need more followers . . .

Nice article here, see a lot of people worried about advanced AI its good to see an article summarizing why our technology just isn't there and likely won't be for a quite a while.

Kind words appreciated.

I worry about people that worry about AI, but I think it a smaller group than those that can't wait for SAI. The latter group see it as an easy path to all their dreams, when they live without purpose in Virtual Reality and have no unmet desire.