[Image Source: guoguiyan]

Hey starlord here,

If we connect a series of artificial neurons into a network, we get an artificial neural network, or neural network for shot. We can represent a neural network as a graph composed of nodes for each neuron, and edges for each connection.

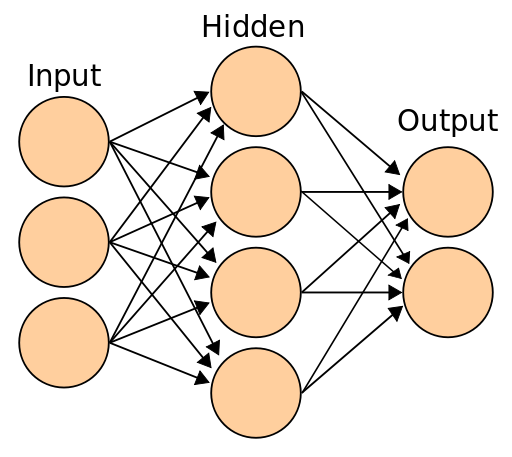

An artificial neural network is composed of an input layer, which the source data is fed into, an output layer, which produces a prediction, and we have one or more hidden layer in between. To train the weights of a neural network to make accurate prediction, we need to do bunch of math.

[Image Source: ©Cburnett Wikimedia.CC-BY-SA-3.0 licensed]

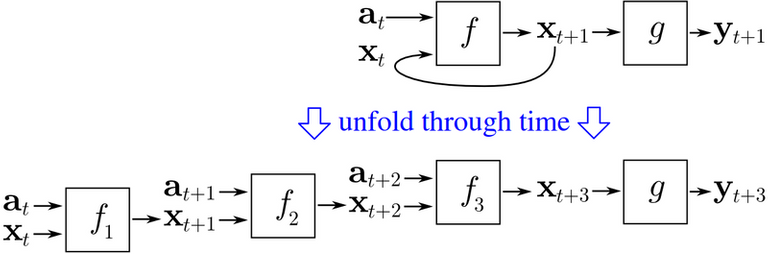

We’re going to skip over all of the math involved, but there are two main steps that we need to be aware of. First, we have a forward propagation step. During forward propagation, we use the network with its current parameter to compute a prediction for each example in our training dataset.

This involves all of the math that we’ve already seen so far. We use the known correct answer that a human provided to determine if the network made the correct prediction or not.

An incorrect prediction, which we refer to as a prediction error, will be used teach the network to change the weights of its connections to avoid making prediction error in the future.

Second, we have a backward propagation step, also known as backward propagation of error, or more succinctly, back propagation. In this step, we use the prediction error that we computed in the last step to properly update the weights of the connections between each neuron to make better predictions.

This is where all the complex calculus happens. We use a technique called gradient descent to help us decide whether to increase or decrease each individual connection’s weights, then we also use something called a training rate to determine how much to increase or decrease the weights during each training step.

[By Headlessplatter wikimedia.Public Domain]

Essentially, we need to increase the strength of the connections that assisted in predicting correct answers and decrease the strength of the connections that led to incorrect predictions. We repeat this process for each training sample in the training dataset, and then we repeat the whole process many times until the weights of the network become stable.

When we’re finished, we have a network that’s tuned to make accurate prediction based on all the training data that it’s seen. However, because we’re working with computers rather than a biological brain, we represent this network of nodes and edges using much more computationally efficient data structures, such as vectors, matrices, and tensors.

A vector is a one-dimensional array of values, and a tensor is an array with an arbitrary number of dimensions. For example, a three-dimensional array, like you see on the screen, is called a third-order tensor, since it’s an array with three dimensions.

TENSOR FLOW: As you imagine, this is where the deep learning framework TensorFlow gets its name. It’s a framework for modelling the flow of data through a computational graph, like a neural network, using tensors to store the calculated values. So now, let’s put all these concepts together to talk deep neural network.

So in my next chapter we will talk about deep neural networks.

Thanks for reading and learning

Refrences for further reading:

- "Artificial Neural Networks as Models of Neural Information Processing | Frontiers Research Topic"

- Artificial neural network | wikipedia

All images are CC0 Licensed and are linked to their sources

Artificial Intelligence is good method for future

@ankitag AI is future.👍

thanks for article

Always welcome @tutchpa

Peace, Abundance, and Liberty Network (PALnet) Discord Channel. It's a completely public and open space to all members of the Steemit community who voluntarily choose to be there.Congratulations! This post has been upvoted from the communal account, @minnowsupport, by starlord6414 from the Minnow Support Project. It's a witness project run by aggroed, ausbitbank, teamsteem, someguy123, neoxian, followbtcnews, and netuoso. The goal is to help Steemit grow by supporting Minnows. Please find us at the

If you would like to delegate to the Minnow Support Project you can do so by clicking on the following links: 50SP, 100SP, 250SP, 500SP, 1000SP, 5000SP.

Be sure to leave at least 50SP undelegated on your account.