This series aims to provide a thorough grounding in the science of machine learning using neural networks. These posts will try to explain the maths and concepts needed to work with neural networks from the ground up, whilst avoiding being overly technical where possible. My hope to provide a great beginner's guide to neural networks for anyone with some basic high school maths abilities. Let's jump in with some background to these mysterious beasts, where we'll find their humble beginnings that has seen many ups and downs.

Computers are an immensely powerful tool for executing strict, well-defined tasks extremely quickly. Computing power is ever increasing (thanks to Moore's Law), and with their growing computational capability, computers have become an essential part of modern society, performing tasks ranging from automated stock market trading, to managing our health records, auto-piloting airplanes carrying millions of passengers annually, and connecting the world through the internet.

However, a growing range of applications require computers to perform more abstract tasks which are not easily defined, or indeed may not be definable at all, using the strict logical rules that traditional computer programming paradigms allow. Increasingly, the ability for computers to reason about unstructured data in an attempt to understand the world and their environments (which adhere to probabilistic rules rather than deterministic rules) is becoming a requirement if computers are to broaden their societal reach.

The field of artificial intelligence attempts to provide the means for computer systems to perceive and understand their environment, so that they may take actions that maximize their chance at success in some task. Areas of artificial intelligence research include artistic content creation, natural language processing, and computer vision.

Machine learning (ML) techniques are currently a flourishing area of research. Artificial neural networks (ANNs) in particular have been the primary focus of many research efforts in the field of artificial intelligence (AI), with convolutional neural networks being utilized very successfully to produce state-of-the-art results in image classification tasks. More specifically, in recent years ANN topologies containing many layers – termed deep neural networks (DNNs) – have seen heavy use in a range of tasks from speech recognition to outperforming world class players in the abstract board game Go.

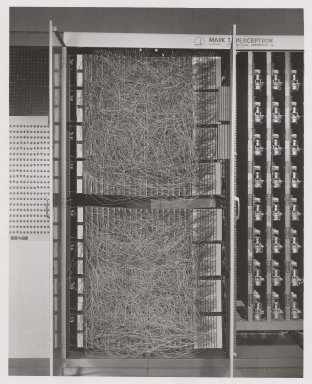

The basic concept of an ANN dates back to 1943, when McCulloch and Pitts introduced the first artificial neuron, called a Threshold Logic Unit (TLU), as a computational unit directly inspired by neurobiology research. The McCullock-Pitts Neuron allowed researchers to show that, given a sufficiently large array of these neurons, each with carefully chosen synapse weights, it was possible to implement an arbitrary logical function. Donald Hebb introduced the concept of Hebbian learning as a model of how neurons in the brain learn by reinforcement, which brought about the possibility of having networks of neurons learn a mapping function rather than having the neuron weights hard-coded by hand. In 1958, Rosenblatt implemented a single layer array of neurons that could learn a function to perform classification tasks, and in doing so created the first practical ANN. His learning algorithm, known as the perceptron, uses supervised learning to train neuron weights to perform binary classification – in his original application, Rosenblatt classified 20x20 pixel input images as either belonging to one particular class or not (e.g. whether the picture was of a cat or not). In the early 1970s, funding for research into neural networks dried up after Minsky and Papert published a paper showing that a single layer perceptron neural network could not learn the XOR function (or indeed any function containing classes that are not linearly separable), creating doubt as to the general utility of neural networks. The Mark 1 Perceptron hardware implementation is shown in the image below. The network parameters were modified by hand using the potentiometers (variable resistors) on the right hand side of the picture.

Rumelhart at al. brought about a resurgence of interest in neural networks in 1986 when they applied the backpropagation algorithm to networks with multiple hidden layers, allowing much more complicated network architectures to be successfully trained and much more complicated tasks to be tackled. In particular, investigation into CNNs has been accelerating rapidly ever since Yann LeCun et al. showed how the backpropagation algorithm could be applied to the task of training them in 1989. In 2006, a research team at the University of Toronto lead by Geoffrey Hinton brought about the modern wave of neural network research by presenting an algorithm that allowed DNNs to be efficiently trained. Research into neural networks and deep learning subsequently skyrocketed, as DNNs were quickly shown to outperform all other AI systems in a range of tasks. In 2012, history was made when Alex Krizhevsky et al. won the ImageNet Large Scale Visual Recognition Challenge (ILSVRC) for the first time using a DNN (called AlexNet), doing so by a large margin – the previous record was a top-5 misclassification rate of 26.1%, whereas Krizhevsky’s DNN achieved 15.3%. The competition has been won by a DNN architecture every year since 2012.

New papers on neural networks are being made available seemingly every second day, with powerhouses like the Google Brain team making innovations and new discoveries at a blistering pace. Clearly, neural networks are a big deal, and will be a continuing growth area in the future. So what better time to jump on board the bandwagon than right now?

Stay tuned for the next post, where I'll cover some of the fundamental building blocks of neural networks and start building the knowledge needed to become an ML expert in neural nets.

About me: I've recently finished my Bachelor's degree in Electronic Engineering, with my thesis focussed on a specific type of neural networks called Binary Neural Networks (BNNs). In September, I'll be starting research for a Masters degree in machine learning hardware. I enjoy teaching difficult subjects to people who think they aren't capable of understanding them by breaking them right down to the basic building blocks, and building it all back up again. We're all just big neural networks after all, we just need the right training data

Beautiful post

very informative looking forward to more of these

Congratulations @wysiati! You have received a personal award!

Click on the badge to view your Board of Honor.

Do not miss the last post from @steemitboard!

Participate in the SteemitBoard World Cup Contest!

Collect World Cup badges and win free SBD

Support the Gold Sponsors of the contest: @good-karma and @lukestokes

Congratulations @wysiati! You received a personal award!

You can view your badges on your Steem Board and compare to others on the Steem Ranking

Vote for @Steemitboard as a witness to get one more award and increased upvotes!