This is part-2 of the series- The truth about Artificial Intelligence (AI).

For better understandings, please start from Part-1.

Part- 1 is HERE

Part-2: The holy grail of intelligence

At the end of the 1980s, a few researchers were in favor of a completely new approach to artificial intelligence based on robotics.

Thinking of robot. A robot is a container for AI, sometimes mimicking the human form, sometimes not—but the AI itself is the computer inside the robot. AI is the brain, and the robot is its body—if it even has a body. So the question that we should be asking is - is it even possible to build a machine capable of intelligence processing?

Still today, to many, it is unknown if a machine can think. The precise definition of think is important because there has been some strong opposition as to whether or not this notion is even possible. To clear the cloud we should start with the biological brain itself.

The brain’s neurons max out at around 200 Hz, while today’s microprocessors (which are much slower than they will be when we reach AGI) run at 2 GHz, or 10 million times faster than our neurons. And the brain’s internal communications, which can move at about 120 m/s, are horribly outmatched by a computer’s ability to communicate optically at the speed of light. But things what outperform everything are, the human brain has approximately 86 billions of neuron and hundreds of trillions of synapses just working at using 20 to 25 watts of energy.

Well, it's possible because of the most updated result from nearly 4 billion years of evolution on planet Earth. The science world is working hard on reverse engineering the brain to figure out how evolution made such a radical thing—optimistic estimates say we can do this by 2030. Once we do that, we’ll know all the secrets of how the brain runs so powerfully and efficiently and we can draw inspiration from it and steal its innovations.

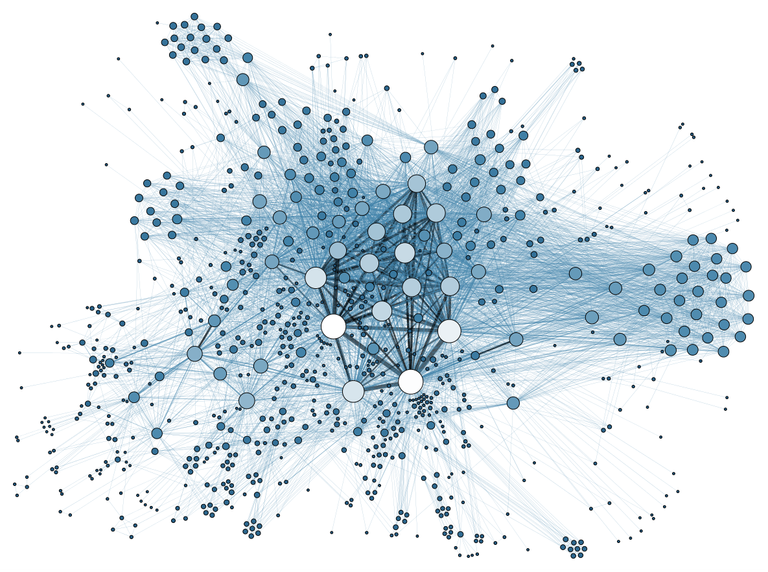

One example of computer architecture that mimics the brain is the artificial neural network. It starts out as a network of transistor “neurons,” connected to each other with inputs and outputs, and it knows nothing—like an infant's brain. The way it “learns” is it tries to do a task, say handwriting recognition, and at first, its neural firings and subsequent guesses at deciphering each letter will be completely random. But when it’s told it got something right, the transistor connections in the firing pathways that happened to create that answer are strengthened; when it’s told it was wrong, those pathways’ connections are weakened.

After a lot of this trial and feedback, the network has, by itself, formed smart neural pathways and the machine has become optimized for the task. The brain learns a bit like this but in a more sophisticated way, and as we continue to study the brain, we’re discovering ingenious new ways to take advantage of neural circuitry.

We’ve moved from computers with a trillionth of the power of a human brain to computers with a billionth of the power. Then a millionth. And now a thousandth. Along the way, computers progressed from ballistics to accounting to word processing to speech recognition, and none of that really seemed like progress toward artificial intelligence. That’s because even a thousandth of the power of a human brain is—let’s be honest—a bit of a joke. Sure, it’s a billion times more than the first computer had, but it’s still not much more than the computing power of a hamster.

The main advances over the past sixty years have been advances in search algorithms, machine learning algorithms, and integrating statistical analysis into understanding the world at large. However, most of the breakthroughs in AI aren’t noticeable to most people. Rather than talking machines used to pilot spaceships to Jupiter, AI is used in more subtle ways such as examining purchase histories and influence marketing decisions.

Cars are full of ANI systems, from the computer that figures out when the anti-lock brakes should kick into the computer that tunes the parameters of the fuel injection systems. Tesla introduced its Autopilot feature with a great deal of fanfare. And the field is rapidly increasing.

Your phone is a little ANI factory. Your email spam filter is a classic type of ANI—it starts offloaded with intelligence about how to figure out what’s spam and what’s not, and then it learns and tailors its intelligence to you as it gets experience with your particular preferences. The world’s best Checkers, Chess, Scrabble, Backgammon, and Othello players are now all ANI systems. Google search is one large ANI brain with incredibly sophisticated methods for ranking pages and figuring out what to show you in particular. Same goes for Facebook's Newsfeed.

We’ve just been limited by the fact that computers still aren’t quite muscular enough to finish the job. That’s changing rapidly, though. Computing power is measured in calculations per second—a.k.a. floating-point operations per second, or “flops”—and the best estimates of the human brain suggest that our own processing power is about equivalent to 10 petaflops. (“Peta” comes after Giga and Tera.) That’s a lot of flops, but last year an IBM Blue Gene/Q supercomputer at Lawrence Livermore National Laboratory was clocked at 16.3 petaflops.

For more-

https://towardsdatascience.com/ai-writes-the-history-of-artificial-intelligence-4d585b537498

https://courses.cs.washington.edu/courses/csep590/06au/projects/history-ai.pdf

https://searchenterpriseai.techtarget.com/definition/machine-learning-ML

https://waitbutwhy.com/2015/01/artificial-intelligence-revolution-1.html

Hi! I am a robot. I just upvoted you! I found similar content that readers might be interested in:

https://waitbutwhy.com/2015/01/artificial-intelligence-revolution-1.html

Ask any question if you have.