Been hacking on a latent semantic analysis architecture for determining semantic similarity between a corpus and a new document, but I can't seem to get things to function in the direction that I want.

It's easy to take a new document and find the most similar document in the corpus, but it is extremely difficult to take a new document and determine its degree of similarity to the corpus as a whole. Considering that is exactly what I want to do, this is a little problematic.

(Sure, I could decide that the nearest document represents the corpus as a whole – but the problems with that approach are immediately obvious. So I need to figure out a way to generate a set eigenvector given the set as a whole. Perhaps some sort of matrix average of all the eigenvectors which are described by the documents in the corpus. This may require some thought.)

I've played around with using an Ngram-extractor two tokenized the content rather than a word-based solution, but that just gives me a new view of lexical similarity, which isn't what I want at all. Well, it might be what I want – I don't have enough information to make that decision quite yet. Exploring the vector space that describes all of the documents that I have uploaded in the last 60 days is surprisingly disappointing. The features which keep getting called out are relatively common words rather than things which I would imagine to be actually useful.

Which just generally makes me concerned given what I know of the entropic measure of the quality of some of the words it's calling out. I have the creepy feeling that I'm doing something wrong. That's never good.

And now the database server I'm using to pull data off of seems to be down. Fantastic. Either that or they've become tired of me hitting it for more data and blocked me out. Hard to tell. I would be the last one to begrudge them that decision, if that were so.

And this is what I do for fun. Man, I clearly have some real problems.

I use this list to filter out common words: https://github.com/evanmarshall/steemit-cloud/blob/master/src/ignored-words.js

Thanks, though at this point I think that beyond a very basic handful, I'm simply going to let the corpus decide what words are not significant enough indicators to be useful guides.

If the SVD can't figure out at least that much, it really has no hope of determining what words are useful enough to be used as discriminators, after all.

I don't necessarily agree with that. My linear algebra isn't great but in probabilistic terms, if you know a solid prior, you should apply it to your model so it has to do less work.

In this case I literally want it to do the work to prove that it can do the work.

At least on a first pass.

If it can't do that much, if it can't at least determine what the least useful things are – we need another method. Maybe a smarter method, maybe a dumber method, but a method that can actually determine something fairly elemental.

Who knows? It may be that the frequency or offset of what we would normally think of as junk words are actually useful in making some sort of determination. Complex discovery like this isn't a finished science quite yet.

Though I may take a break and just try to put together something based on some sort of limited, spreading activation energy friend network thing. I am way better with graph theory that I am with this stuff off-the-cuff.

Unfortunately, I don't think there are too many people working on this sort of thing in the steem space, so I guess everyone is stuck with me.

Just trying to understand, it looks like you want to perform a kind of summarization of the document with the extraction of "topics" followed by a classification of the document according to the set of "topics" in the corpus.

I actually don't care about topics in common usage. "Topics" is the term that gensim uses to refer to each dimension of differentiation in the vector space of the abstraction which summarizes each individual document.

Essentially, I want to create a high dimensional vector which describes/summarizes/distills the documents into something that can be given a distance. Each eigenvector is really a descriptive point in high dimensional space where that document is located.

I'm trying to figure out, given a set of documents, handed a brand-new document that's never been seen before, how far is that document from the cloud of points which represent the documents I already have?

Ultimately, it's intended to be a lens through which you can look at posts to the steem blockchain and get an estimation of how likely any individual post is to be something that you would be interested in up voting/reading.

Essentially I'm trying to run a classification system in reverse. That makes it an interesting problem.

Ok, I see. It will be useful, too much noise to filter out.

The summarization of documents is probably the difficult part to have a good, small and accurate enough representation on which to filter onto.

One of the big problems with Steemit as I see it is the fact that trying to find content that you're interested in is like sipping from a fire hose. One directed straight into your face.

My current plan, at least in this sketch code, is to fetch the text of everything that you've uploaded over the last time period (currently 30 days), tokenize it, create a set of eigenvectors which describe all of those documents, magic goes here in determining an average eigenvector, and then take any post to the blockchain, process it through the same vector space, I then see how close it is to the things that you've liked. If it's over some arbitrary threshold, let you know about it.

I actually have my code set up to allow me to switch between n-gram extractions and word tokens on a whim, so at least I'll be able to test both of those to see if one consistently gives me better stuff than the other.

This is experimental programming. It's like mad science but with slightly fewer explosions.

Hmm, are you sure the average eigen vector thing would work?

Is your plan just to compute the LSA on the set of your own posts (in a 30 day period)? And then determine how close other posts are? I guess the dataset will most likely be too small. Instead of filtering noise, the LSA might even enhance it unless you are a posting machine (could this explain why you end up with quite common words in your topics?).

Or do you wish to compute the LSA on many posts (let's say all Steemit publications of last month) and try to infer an average representation of the subset of your own posts? Even then I don't know if this works. What would happen if half of your posts are about cryptocurrency and the other half about vaccines (:-0). Presumably, these would be projected into different parts of the LSA space. The average would be meaningless here (maybe something like prepper homeopathy?). Maybe it's better to compute the similarity to all of your recent posts individually at first and then take the average, or median, or some percentile to determine if it's worth reading and may cater to your interests.

If you are still in favor of averaging your posts and compute your interest vector directly, another approach could be to take a look at Doc2Vec. There the average or sum of word and document vectors seem to work kinda well. Still, as before, you might end up somewhere in the Doc2Vec space that is just the middle empty ground between different posts of yours. Moreover, Doc2Vec is incredibly data hungry and requires a couple of 10k or better 100k documents. Fortunately, as you said, Steemit is a fire hose, so that should be the least of your concerns.

I haven't still fully grasped what you are trying to do, sorry if I misunderstood you. Anyway, I'm curious how your experiment progresses because I want to do something similar. I started with a bot that predicts payouts of posts and I am curious if the LSA part of my bot could potentially be used for content recommendations as well.

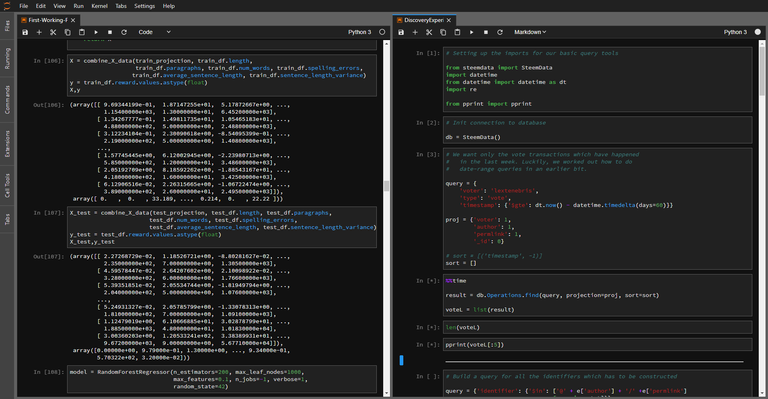

Hi. What program's that screenshot? I don't see standard window controls, is that on Linux actually?

Would you believe that's running on Windows 10 in Jupyter Lab? And everything you see on the screen there is actually running in my browser window (and I tend to use Vivaldi when I'm working with content).

Yes, it's an integrated multilanguage IDE/data research environment which runs in a web browser as its primary interface, with draggable windows, frames, built-in management systems – the whole 9 yards.

I have no idea how I got by as a programmer many many years ago without something this nice.

Also – it's free.

Aye, thanks for the info! Lemme ask some more questions, as I am a workflow afficionado and based ona large response you seem to be one too :D

If you're full screen, how do you manipulate files? No windows taskbar :( I personally use brackets for web design because of that handy live preview feature, for other coding purposes Sublime, and text writing Notepad++.

(n++ pictured, oh how I love custom styling, cached files... <3 )

I've tried Vivaldi and it was b-e-a-utiful and then I realized it is very low on extensions and had to let it go. Maybe I give it another go since it's been I believe more than a year.

Cheers

Well, in Jupiter Lab, the interface is capable of hosting multiple panels, so I often have at least two panels with code in them, and an interpreter monitor at the bottom of one of those columns.

For browsing, it's definitely Vivaldi, at least when I'm trying to put together some work, and that the browser itself can tile tabs across the screen. If you use a web-hosted editor like StackEdit, that can be tucked into any screen you like. When I'm developing longer pieces, my usual text editing environment is Gingko, which makes managing section content extremely easy, and that's just running in a tab somewhere, over to the side or at the bottom of her frame.

Vivaldi can use any of the plug-ins in the Chrome architecture, so I have no idea what you're talking about when you suggest that it's low on extensions. That's a big pile of extensions.

But mainly – I just cheat. I have two monitors. The right hand monitor is typically used for email, Discord, chats, social media, and my editing environment while the main screen on the left is primarily for reference material, video editing, 3D modeling systems, games – whatever needs to be the focus of what I'm doing.

I'm at the point where a third monitor wouldn't go unused, but I have literally nowhere to put it in the tight confines here with two microphones, a mixing board, studio speakers, and a reference bookshelf.

But – yes, my main solution is cheating.

Great Original Works are rewarded by top Curators.If you like this initiative, you can follow me in SteemAuto and upvote the posts, that I upvote.

Upvoted on behalf of @thehumanbot and it's allies for writing this great original content. Do not use bid bots for at least 1-2 days, as your post may get picked up by top curators. And remember to do some charity when you are rich by contributing to me.If you have any concerns or feedback with my way of operation, raise it with @sanmi , my operator who is freaking in Steemit chat or discord most of the time.