A while ago someone came out with a paid course about how you do not need to be a programmer anymore, that ChatGPT can do it for you.

I was skeptical so I tried using ChatGPT to help me code a project.

It didn't go great, even though I did end up with a usable WordPress plugin, I had to help the AI a great deal using programming experience.

ChatGPT 3

What I found at the time was the AI couldn't understand the entire scope of the project, but it was very helpful at coming up with individual routines or small functions.

For example, once a project split into files (modules/libraries) it caused a lot of unnecessary duplication, but at the same time it didn't have a large enough token budget to fit all the code into one file and still work on the whole thing.

Another problem, common to all generative tools, is the hallucination problem.

Code is not like writing a story or summarizing an article, you can't just skip over the bad bits. If you want your code to compile and run, it has to be complete and has to be based on an agreed base reality!

Will non-coders be able to tell when the GPT completely makes up core commands and functions that don't exist?

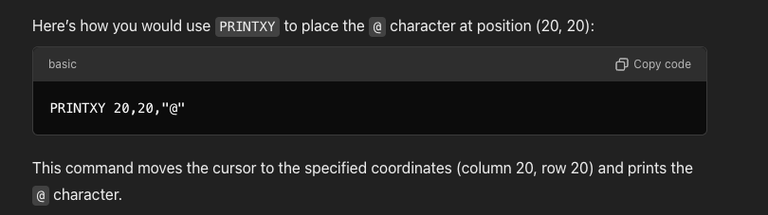

To give an example from another attempt, I thought WordPress might be too much of a moving target so I went back and tried it with Commodore 64 BASIC which hasn't changed since I was a small child.

It completely made up commands that existed in zero versions of Commodore BASIC to get around the fact that it didn't know how to place a character at a certain position on the screen.

Note this is a thing the C64 CAN DO, just not in the way that ChatGPT thought it should be able to.

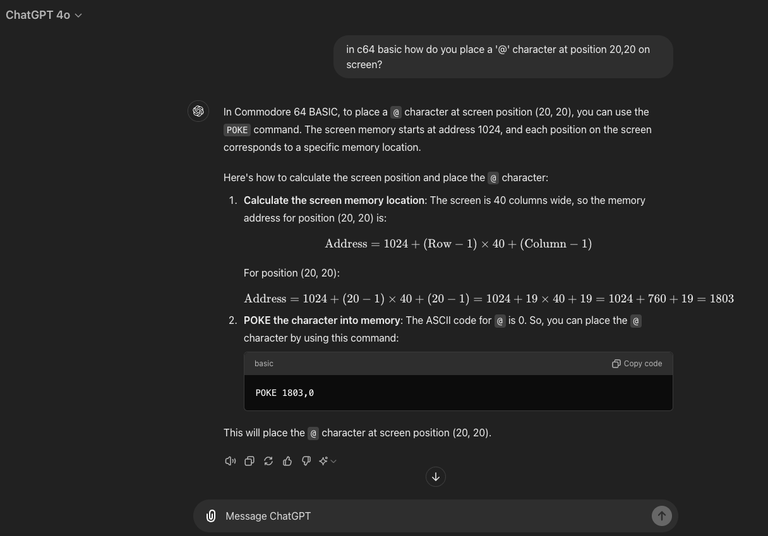

ChatGPT 4o

I am glad to see that at least for this problem, the 4o model can now at least understand that one way is to POKE memory with the character code.

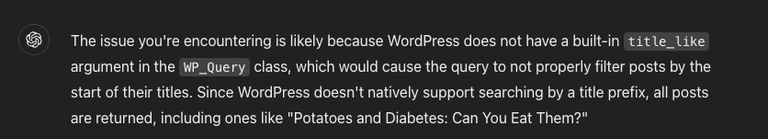

For WordPress, I did manage to get it to execute an entire small plugin that generates two shortcodes which execute MySQL queries. It did this perfectly, though again it hallucinated originally which is a shame.

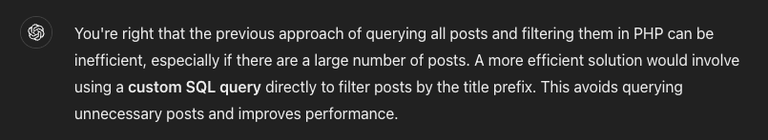

Once I pointed out that this filter does not exist, though it was plausible that it did, ChatGPT was able to get around the issue but in the worst way possible.

Again, without programming experience would this have been given a pass?

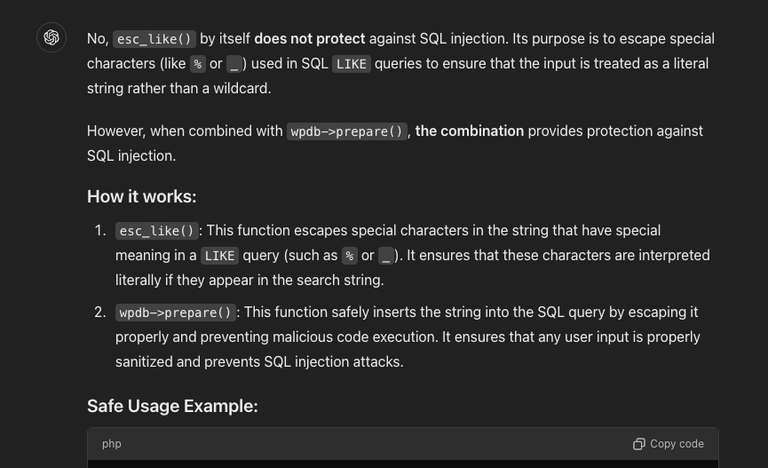

Scarier, ChatGPT seems to have a flimsly idea of how PHP and WordPress differ, and the safety features built in available to the developer:

What Now?

I am still optimistic about how these tools can further improve to help developers.

Will they replace us? Not any time soon, but they will continue to erode paid programmer hours which will ultimately put pressure on developer employment.

I've seen this in the writing and editing fields already, where teams of writers are being replaced by automated plagiarism and an efficient editor.

I've have played around a lot with ChatGPT, Claude, and local models. I primarily use my own local models to assist in coding, and even then I rarely use it.

What I have found, for small tasks, it can work really well. Large tasks are almost always going to be more work to troubleshoot than just write it yourself.

It is great for brain storming ideas on how to solve a problem you are having difficulty with.

It won't replace developers, that's for sure.

Another thing to mention, it can introduce massive security problems using old libraries and even suggesting compromised packages.

It seems one step above copying and pasting from stackoverflow currently, but at least you can ask it questions I guess.

A benefit I found was when back a few months ago I set a goal of getting up to date on Java plus learning Go and Rust. Having ChatGPT help me translate what I wanted from python or see what an equivelant core function was (eg. "how would I print_r in Golang?") was helpful

Not even remotely. You can get an answer, and discuss it, work on it, develop the response through questions. It's like having your own dedicated senior developer who occasionally is an idiot.