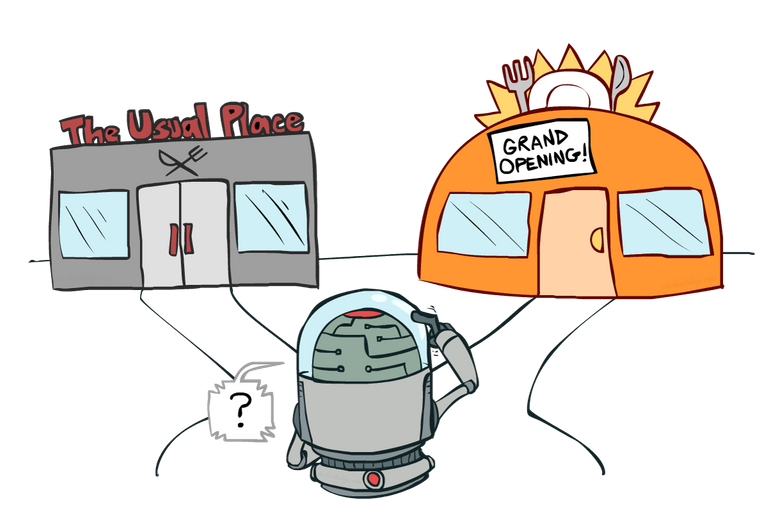

As the traveling season starts (at least for the North part of the Earth) many of us will be faced with the old philosophical question:

Should we keep on looking for another half an hour for the ideal place to eat or should we just finally eat at this far from perfect place?

We all know how difficult this question is and we also all know that it often leads to really not nice discussions between the otherwise always so happy travelers. And it's even more bitter that after you decide to "just eat here we won't find it anyway", you then find the perfect place right around the corner.

Anyway, this post is not supposed to be about the hardness of traveling it's supposed to be about the lightness of reinforcement learning. So, why am I bringing this up?

Exploration vs. Exploitation

As hard as the question seems to us - human agents - it is actually similarly hard for the artificial agents. Scientists are posing this question since long time ago: When should an agent explore the environment more and when should it just stick with the good old plan?

RL solutions

As you can imagine the question about when to switch between exploration and exploitation is not yet answered. It is not that surprising as even in our regular lives this is one of the toughest decisions to make.. Should I study master or is the bachelor degree just enough? Should we keep looking for the new flat or is this already the best offer we can get?... So how is it actually solved?

Epsilon-greedy strategy

In reinforcement learning the basic and most simple way how to not stick with the first found solution is the epsilon-greedy strategy. Using this strategy, the agent selects some random action in the "epsilon" cases, in the other cases the agent just follows its so far best found path. This ensures that the agent with some probability explores all possible states and therefore it can find some better path than it found before. This technique is very simple, but it has one big drawback that the exploration - random selection - selects from all states uniformly. This means that even the very bad options will be selected quite often and therefore the agent takes longer time to learn the optimal path.

Increased learning rate in disaster situations

One strategy that tries to solve the problem of training on the bad paths is the "increased learning rate in disaster situations". puts more weight on information about some very bad paths and therefore the agent tries to avoid those situations. The agent should with this strategy quickly learn to avoid very bad places/situations, but still keep exploring the rest of the environment.

Boltzmann exploration

Another standard exploration strategy is the Boltzmann update. The agent selects new state based on the probability of exp(-

V(s)/T) where V(s) is the value of state s (estimate of how good a state is - if it lies on the optimal path) and T is the temperature which decides how much the agent explores or exploits. With high values of T the agent tends more towards exploration and with low T the agent tends more to follow the best found path.

Cool, but why is this important anyway?

It's just immensely important and also immensely (or at least very) hard to solve. As reinforcement learning agents are meant to be working in the real world it would be quite useful if they didn't keep falling down the stairs, running in front of the cars or resetting your home air conditioning to sauna temperatures. On the other hand it is impossible to prepare them for every possible situation they can encounter so they have to at least partly learn - which means explore - as they go. And if this resembles you how children learn and develop it is of course very similar and artificial intelligence scientists do take quite a lot inspiration from them. And how cool is that! There are of course many more advanced techniques, but about those I might be talking in some next posts, and also after introducing some more advanced ideas about RL.

that's good to know I like this serie and I'm waiting for the part 6 thanks a lot for sharing and keep on posting ;)

very informative. thank you for the post