Recently, an accident between a self driving car and a pedestrian raised an issue that until recently was only theoretical: will software developers be liable for the death caused by their software's negligence. Why is this so important? because without knowing who should be liable in such cases, the whole laws of autonomous entities will not develop any further. The question of "who pays" or "who goes to jail" is essential.

Why? because of the Coase Theorem. Ronald Coase explained that a society needs to decide on how to allocate the damages caused accidentally by desired features: whether a train operator should be liable for fires caused from sparks? In weighing this, we need to calculate the social benefit from train operation, and not just the profit the train incurs from such operation. Then, we decide on whether we should provide immunity for trains in order to avoid a case where due to this liability a desired function of society is avoided.

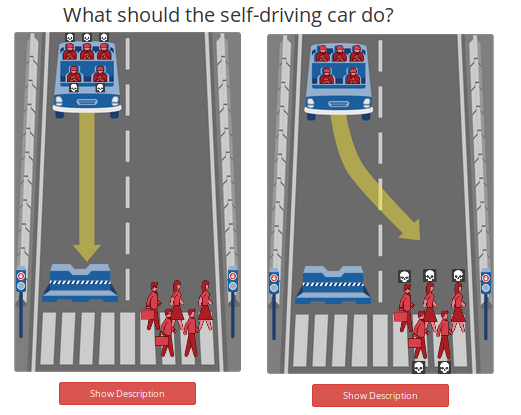

Now, let's ask why and how do we allocate the social costs. Do we believe that self-driving cars are too important for our day-to-day life that we want to exclude the software developers from liability? Well... that might cause two different problems. The first is the MIT Moral Machine, MITMM. In the case where we can program a self driving car and a death is inevitable, who does the software developer kill? You can play the MITMM game yourself and be either god or a software developer, but ask yourself whether this is the way to go. Meaning: if we know that in some cases a death is inevitable, will it mean that someone needs to pay?

The second is determining that in such case, where a death is inevitable, there is no liability. It comes to mind in other, medical malpractice cases. And I'll explain: let's say that a doctor performs a procedure that has a 95 percent success rate. This means that one in twenty will die in the process (oversimplified, again)(example 1, example 2). Now, if a patient dies, will the doctor be at fault? In such case one can go over the doctor's records and see whether his percentages average at 95. But that's a problem. Why? because a 95 success rate is averaged over all doctors; both the best ones and the okay ones. So, Do we say that he's negligent if he killed two out of twenty? three? And is one of these deaths that one that was supposed to die anyway, so he'll pay only for the other?

These questions are not moral and theoretical. They are questions that the law answers on a daily basis. Now, let's assume we can actually measure the doctor's success and fatality rate, let's assume that we have all information to make sure that he wasn't negligent all the way. Let's also assume that we have actual statistics. Meaning, let's assume that in the self driving car problem we can not just measure, but also reproduce the results by using other algorithms. Will this make a case for developing self driving car liability laws?

Maybe. But then there's a real problem. No software developer wants jail time, and that's a big deal. Now here comes that thing that Coase contemplated. We want self driving cars, we want them because in the long run they reduce accidents. But will anyone program anything if he knows that he might end up in jail because of a software glitch? I guess not. And I'll explain from another case I treated.

When I participated in the legislation of the Biometric Database Act in Israel (and petitioned against it), we (me & the Israeli Association for Civil Rights) that there will be criminal liability in case the head of the database fails to perform its position, or any other employee. This, in turn, might have been the reason that no person wanted to act as the information security officer for the database. No one is stupid enough to go to jail.

The same goes for self-driving cars. Unless the self-driving cars are programmed by an artificial intelligence software developer, no developer would want to go to jail; no developer would want to be the one who needs to write perfect code. The liability is just too high.

Now, you would ask what's the difference between software developers and the guys who just produce brake-pads, for example, or seatbelts? well, there is one difference. The brake-pad guys need to have functional brake pads, they don't decide when to break. The decision making process is the most complicated one that required human interaction until recently. But when we program self driving cars, we play god. We give cars free will.

It's interesting dilemma that we are facing know. It's a hard decision to make, who will survive.

Posted using Partiko Android

Congratulations @jonklinger! You have completed the following achievement on the Steem blockchain and have been rewarded with new badge(s) :

Click here to view your Board

If you no longer want to receive notifications, reply to this comment with the word

STOPVote for @Steemitboard as a witness and get one more award and increased upvotes!