Repository

https://github.com/to-the-sun/amanuensis

The Amanuensis is an automated songwriting and recording system aimed at ridding the process of anything left-brained, so one need never leave a creative, spontaneous and improvisational state of mind, from the inception of the song until its final master. The program will construct a cohesive song structure, using the best of what you give it, looping around you and growing in real-time as you play. All you have to do is jam and fully written songs will flow out behind you wherever you go.

If you're interested in trying it out, please get a hold of me! Playtesters wanted!

New Features

- What feature(s) did you add?

Previously when the user would request a new sound to play with on a synth track (the PGUP hotkey) it would be chosen purely at random from the set of available VSTs and presets on them. Now a record is kept of how long each preset is actually being used throughout the player's history. This record is used to determine which sounds the user prefers, in other words their synth "taste".

When a new synth is requested those presets that have been used for a long period of time will be more likely to be chosen than those that have not. In this way, the system will learn which you prefer and which you don't, gravitating over time towards the ones that you do.

If a synth preset has not been used before, it will still be subject to the original random selection, so that new, unheard sounds will have an equal chance and still come up fairly frequently. So more specifically, the process is that the original random selection will still always be made, but if that selected preset has been used before, a re-selection will be conducted taking into account all of the stored taste data. This means that once every preset has been heard before there will be data for all of them, and none of the selections will be purely random from that point forward (until, say, a new synth is added by the user of course).

- How did you implement it/them?

If you're not familiar, Max is a visual language and textual representations like those shown for each commit on Github aren't particularly comprehensible to humans. You won't find any of the commenting there either. Therefore, I will do my best to present the work done in images instead. Read the comments there to get an idea of how the code works. I'll try to keep my description here about the process of writing that code.

These are the primary commits involved:

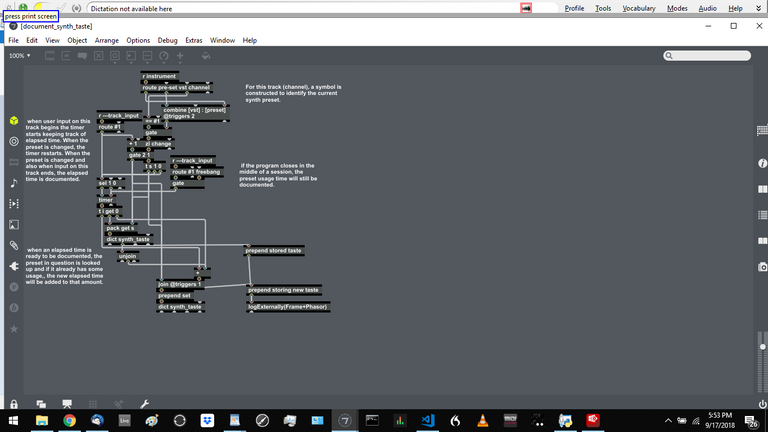

The first task was to document the amount of time spent using each of the VST and preset combinations. A new dictionary, synth_taste, was implemented for this purpose. A new subpatcher, p document_synth_taste, was added to vsti.maxpat. Since multiple VSTs can be played simultaneously in separate tracks, it was necessary to create an instance of this code for each track, and vsti.maxpat seemed like the ideal location.

the new subpatcher p document_synth_taste, added to the abstraction vsti.maxpat, which has 16 instances in the program, one for each track

This stored data then needed to be put to use. When a new preset is chosen, it is now run through the new subpatcher p tasted_yet?. If there is taste data already recorded for it, a new selection will be made based on all of the stored taste data. I explored different options for the way to accomplish this "intelligently random" selection, but settled on adding together the durations of every documented preset to get a total historical usage duration. A random number is then chosen in this range and the individual entries are then cycled through and added together again. When the random number is passed, that is the preset to be chosen. Presets with higher entries (presets that have been used more) will take bigger jumps as the addition takes place and be more likely to pass the random number.

p tasted_yet? and its subpatcher apply_taste, along with its subpatcher dump_ratings; all used in two places in soundcues.maxpat, once in p choose_new_sound and also in p new_samples/synthswith slight modifications.

Cleanup and commenting

Thanks for the contribution, @to-the-sun! Good to see you are still chugging along nicely.

I'd like to remind you of one thing I mentioned on your previous contribution: your commit messages could be improved. I'd suggest checking out this guide on how to write good commit messages, as it includes a lot of useful information.

Your contribution has been evaluated according to Utopian policies and guidelines, as well as a predefined set of questions pertaining to the category.

To view those questions and the relevant answers related to your post, click here.

Chat with us on Discord.

[utopian-moderator]Need help? Write a ticket on https://support.utopian.io/.

Thank you for your review, @amosbastian!

So far this week you've reviewed 2 contributions. Keep up the good work!

Okay cool. Didn't realize commits were supposed to have a whole body included with them.

They don't have to, I just think that in your case it's something that could be improved (yours are already fine, and I don't really have any other feedback to give, haha).

Hi @to-the-sun!

Feel free to join our @steem-ua Discord serverYour post was upvoted by @steem-ua, new Steem dApp, using UserAuthority for algorithmic post curation! Your post is eligible for our upvote, thanks to our collaboration with @utopian-io!

Hey, @to-the-sun!

Thanks for contributing on Utopian.

We’re already looking forward to your next contribution!

Get higher incentives and support Utopian.io!

SteemPlus or Steeditor). Simply set @utopian.pay as a 5% (or higher) payout beneficiary on your contribution post (via

Want to chat? Join us on Discord https://discord.gg/h52nFrV.

Vote for Utopian Witness!