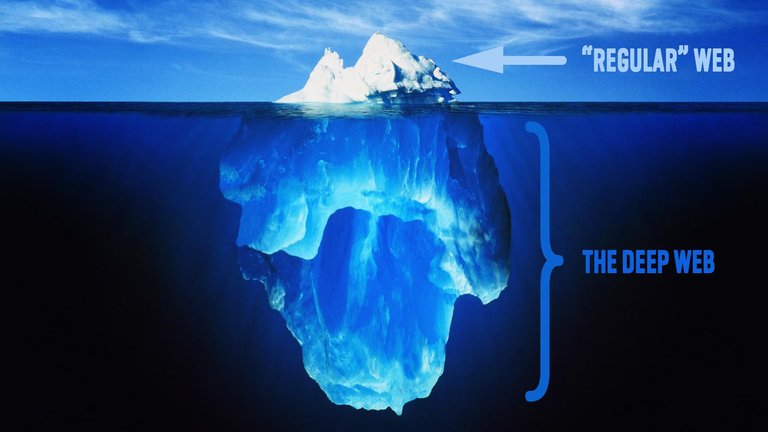

Searching on the Internet can be compared to searching for extraterrestrials with a single telescope and a computer. While a great deal of information may be learned, there is still a wealth of information that is deeper, and in the wrong light frequency range than you can see; Therefore this wealth of information is missed. Physicists estimate that up to 95% of the universe is comprised of ‘dark matter’ They can’t see it, and can’t prove it exists. But it has to be there for the universe to hold together according to the laws of physics. Fortunately, we can see the ‘Deep Web’. It’s just that the search engines cannot.

The reason the search engines cannot see it is simple: Most of the information on the Internet is disconnected from any other web site, or contained within dynamically generated sites, and in either case the search engines can not find it, or cannot properly utilize it. In the case of the disconnected web, someone built a web site, keeps adding documents, but never submitted it, never resubmitted it, never optimized it, never re-optimized it as search engine algorithms changed, never re-optimized for content changes, and lastly, never asked anyone else to link to it. They may also have received links from other pages which are not linked to by any sites within the visible web. Pretty complicated really.

More likely though is the chance that they are invisible because they are in a format that is unfriendly to the search engines. This could mean they are completely graphical, completely built in Flash, Framed, or have Dynamically generated pages.

How The Search Engines Work

Search engines create their indices by spidering or crawling web pages. To be read, the page must either be submitted, or linked to from another page that has already been spidered, and indexed. That’s only part of the battle though. To be indexed, the same web page must be in a format that the search engines can retrieve time and time again. many types of dynamic web pages cannot. Here’s why. Have you ever noticed on some web pages have a different url every time you visit? Visit some you know. Now delete your cookies (in MS Explorer go to Tools > Internet Options > “Delete Cookies”). Now in the same browser window one of the url’s you visited. The url you reached will have changed.

Search engine robots do not accept cookies. Some dynamic, cookie based sites, do not allow visitors unless they accept cookies. Others allow them, but in the case of a search engine, it may not matter. Here’s why: The search engine’s business model is based on being able to get their customers (searchers) to their destination quickly and efficiently. At its most simplistic, they do this by indexing the Internet, and bookmarking every page they deem relevant, so that when someone queries them, they can give that response. Part of their bookmarking process is to check that the page continues to exist, and that’s where the problem starts. They need to be able to send clients to exactly the same spot every time. But with dynamic pages, they just can’t, simply because from their perspective, the url changes each time. So the search engines see that website as a failure. Thus they refuse to deliver traffic to these web pages. They will still read through the content, and factor it into the overall site score, but if you have a large web site, it’s likely that details from deep with the website will be missed, or not viewed as important with respect to the whole.

In regards to framed pages, the search engines see none of the content within the frame. They can only see what is within the source code – click ‘View > Source’ If it’s not there, they won’t find it. Flash sites and graphics only sites are just as bad – A picture is worth 1000 words to you and me, but not a single one to a computer. So essentially, it boils down to the fact that search engines can not properly “see” or retrieve content from the Deep Web – those pages do not exist until they are created dynamically as the result of a specific search, and they do not exist for any longer than it takes to display the page.

Search Engine Optimization and The Deep Web

Making this deep web visible to the search engines is part of the search engine optimization process. For many of our e-commerce clients, it’s a key part – all of a sudden their products and services are visible – something they never experienced before. Because search engine bots cannot probe beneath the surface, the Deep Web remains hidden. The Deep Web is different from the rest of the Internet, in that most of the Deep Web content is stored in searchable databases that only produce results dynamically in response to a direct request. If the most coveted commodity of the Information Age is indeed information, then the value of Deep Web content is immeasurable.

How Big is the Deep Web?

It is estimated that the Deep Web contains up to 500 times the information seen in the regular Internet! That means that while the largest search engine has indexed 1.4 billion web pages, (Google has 1.4 billion web pages, 900 million images, and 900+ million usenet newsgroups postings in its index), there are over 700 billion potential documents out there waiting to be indexed. It’s easy to see this happening. Everyday Hewlett Packard adds 1 page per employee to its web site. They have 100,000 employees worldwide, which means their site alone grows by 36 million documents per year. The search engines are not keeping up. Here’s some stats on the Deep Web:

The Deep Web is the fastest growing segment of information on the Internet.

Deep Web sites tend to have copious amounts of content.

Deep Web content is highly focused and relevant.

More than 50% of Deep Web content resides in specialty databases.

Password protected, and secure content is not considered to be part of the Deep Web.

Will My Web Site Get Found?

Within the strict context of the Web, most users are aware only of the content presented to them via search engines. 85% of web users find websites for the first time via the search engines. A similar number use search engines to find needed information, but nearly as high a percentage cite the inability to find desired information as one of their biggest frustrations. Without optimization, your website will never get found. At its most basic, this means that without anyone linking to your website, your website may never be found, or attached to the fabric of the net.