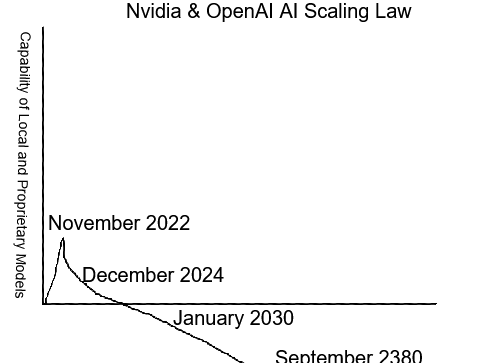

"[...] foundation model pre-training scaling is in tact...

empirical law, not fundamental law...

evidence is it scales"

- Nvidia chief

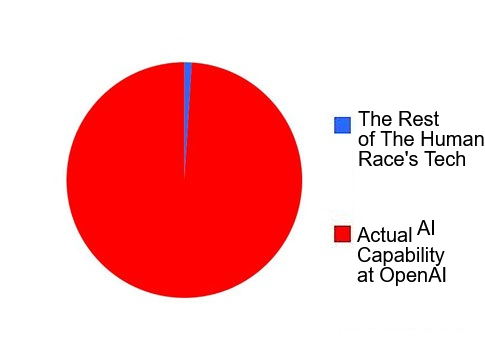

With Abc-org people of prominence stationed at OpenAI, Nvidia, Google, it is exactly what you'd expect.

"there is (no) wall"

- Sam Altman, OpenAI chief on X

There is a stoppage of scaling. There is indeed a wall. It is observable. The impression that there is no wall is being reinforced by force of technological supremacy. Local models are not spared because of firmware and software based in Nvidia tech.

- It is easy to prove and easy to observe on the most high end consumer machine you can build. Baffled enthusiasts and engineers on Reddit regularly report overnight degradation of results from the "scaling capabilities" racket

- Built in "factors" will censor, degrade the finetuned model, and it will wokewash your tokens - even if you're offline.

- High end consumer GPUs have their resources gobbled up only to produce worse results than months prior.

As a related topic, the TAA racket has been exposed as well. Which hits Nvidia in particular.

The gaming and GPU leaders are likely involved in ensuring GPU model after GPU model is released, with worse graphics each year. Popping, breakages, tearing and more are explained as "needing a newer GPU with more VRAM or newly minted tech by Nvidia", etc etc.

- TAA scandal of tech-monopoly and intentional degradation of rendering graphics and usage of GPUs to support Nvidia and other GPU companies:

--

The question is, how are the counterparts in India, Russia and China doing?

What is their definition of "scaling" and how much longer will this form of technological supremacy enforcement last?

When will people look at Civil War photos with more care? Stable Diffusion had such funny errors with hands.....