Humanity is a tool-making species, and the tools we create are expressions of our relationship with the Cosmos. Our digital technologies are no different. In the previous installments of this essay, I attempted to establish a historical and philosophical framework for the conversation surrounding automated political propaganda that I discuss in this section. Taking the long view negates the fear and stigma associated with these new digital tools and puts them in their proper place. Only then, by reclaiming our mastery over them, can we move forward in the pursuit of the betterment of society.

The full essay, "Will Robots Want to Vote? An Investigation into the Implications of Artificial Intelligence for Liberty and Democracy," was written as part of a fellowship for The Fund for American Studies Robert Novak Journalism Program. For an introduction to the premise of the project, please read my speech, Will Robots Want to Vote? For an introductory summary to the final essay, please read, This will begin to make things right. The project is presented as a multi-part series. This piece is Part 6. Please see the related posts linked at the bottom of this essay to read more of the project.

If you liked this post, please consider sharing, commenting, and upvoting. Thank you in advance. - Josh

---

Algorithmic transparency

Artificial intelligence is just as much of a philosophical field as it is a technical field because creating machines capable of "thinking" like people forces researchers to consider what it means to be intelligent.

These ideas are then worked into the personalized algorithmic decision-making we've been seeing in consumer products such as our Google search results and Amazon recommendations, our Facebook, Twitter, and Instagram feeds, as well as on the stock market, and software used by law enforcement and national security professionals.

New Republic writer Navneet Alang called our current era "the Age of Algorithms", writing that the algorithm is the "organizing principle" of the age.

"Because they are mathematical formulas, we often feel that algorithms are more objective than people. As a result, with Facebook's trending column, there was a degree of trust that wouldn't necessarily be given to the BBC or Fox News. Yet algorithms can't be neutral,’ said Alang.

At their most basic, computers are just boxes of metal and plastic with an electrical current running through them. There is nothing inherently magical about them. Both the hardware and software were made by human beings who made choices about which materials and computer code to use and how they should be designed. These decisions follow algorithms; step-by-step processes designed to produce certain outcomes if followed.

Programming circles often compare algorithms to cooking recipes. They are the DNA of the computer. But they are still the product of human imagination, which means that their creation is subject to a programmer or an organization's biases. These biases then influence how a device or software's human users interact with it.

These biases might have a relatively benign and probably inconsequential effect on the user, such as the look and feel of a phone's calculator. But higher level decision-making, such as how to find the best news sources via search results or a social network newsfeed, can have national and global consequences, considering that a majority of the adults in this nation have smartphones, social media accounts, and Internet connections.

Their increasing ubiquity and importance in our lives has provoked calls for algorithmic literacy, oversight, and transparency to hold both the software and the organizations that create them accountable.

The Electronic Privacy Information Center (EPIC), founded in 1994, for example, proposed amending science fiction writer Isaac Asimov's Laws of Robotics to also require robots to always reveal both the process used to arrive at a decision and their actual identity. Asimov’s original three laws, first written in 1942, state that a robot must prioritize the safety and well-being of human beings above its own existence. Microsoft CEO Satya Nadella laid out rules for A.I. that also support algorithmic transparency.

A Pew Research Center report found that technology experts were generally quite optimistic about the future benefits enabled by algorithms, such as in healthcare, where advanced algorithms are already used to sequence genomes and find cures for diseases.

Problems arise in the unintended consequences of the algorithms. But computers can only do what they are programmed to do. Any failure is ultimately the result of a limited worldview, or lack of imagination and skill, of the sorcerer's apprentice - the coder or coders who designed the program.

---"Fake News"

The widespread propagation of so-called “fake news” through online social networks --- such as deliberately false stories, satire, and propaganda --- took front and center in the national conversation both during and after the 2016 U.S. presidential election cycle.

The circulation of rumors and falsehoods is not new to the political sphere, and the diversity of ideological content, even the completely fictional stories, is beneficial to the public discourse. This ideal was enshrined by the Founders in the First Amendment.

But the technological advancements of the social media era further complicate civic conversation as algorithmic filtering restricts that diversity for each user, creating, in effect, personalized universes - filter bubbles - that distort and warp users’ worldviews.

One consequence of this is that the hyper-personalization of social media and search technologies enables users to, in a sense, “self-radicalize”. As a result, partisans brandished the “fake news” phrase attempting to delegitimize the work of news organizations they either disagree with or compete against.

Unless a user makes concerted efforts to maintain some semblance of algorithmic hygiene, the content they are served will usually always cater to their biases and their outrages because this is profitable for news outlets, social networks, and ad companies.

And the more emotionally invested a user is in the platform’s content, the longer they will stay engaged, allowing companies to mine their behavior to sell more goods and services.

While advertisements are essential to our economy and our politics, news sites and advertisers have sought to create profitable relationships with each other by facilitating the rise of native advertising, i.e., advertising that looks and reads like a news or opinion piece.

Furthermore, news sites might take pains to distinguish between editorial content and advertisements on their own platform, but they lose control of context and presentation when a reader shares it with their social network and the content appears as only a headline and a picture in a user’s social newsfeed alongside the rest of the content stream.

A Pew Research Center study published in May 2016 found that 62 percent of Americans get news from social media; in 2012, that number was only 49 percent. Influence also varies across platforms. For instance, while 67 percent of U.S. adults have a Facebook profile, this translates into 44 percent of U.S. adults getting their news from the site. 10 percent of U.S. adults get their news from YouTube, while only 9 percent get their news from Twitter.

With no differentiation between hard news, opinion, satire, or straight fiction, political news consumers are left to their own judgment and that of their friends. The diversity of sources, and the ability to self-curate content absolutely allows for a more informed citizenry.

But with the added influence of algorithmic filtering, users’ biases are richly rewarded with more content that fits their worldview, and more interactions with likeminded individuals.

Another Pew Study published July 2016 found that even though 35 percent of American online news consumers say the news they get from family and friends is one-sided, 69 percent say they would prefer to see more points of view. At the same time, only 4 percent of American “web-using” adults trusted the information they found on social media; 51 percent of adults say they are loyal to their news sources, however, and 76 percent stick to their preferred news sources.

Filtering also affects the news media. The election results blindsided many of its practitioners who have come to rely on social media to help discover sources and decide what stories to cover.

Many members of the media, who also flock in likeminded circles, assumed their social media usage gave them an accurate conception of what the country was thinking. Algorithmic filtering only separated them further from the pulse of the country.

Following the election, many in the news media demanded that social networks do something to weed out “fake news,” blaming those companies for an election outcome that did not go as they expected. But demands for greater filtering intervention place the companies in extraordinary positions of power and responsibility, with very few checks and balances on that power.

The debate about algorithmic filtering is also as much a debate about the economics of the news industry as it is about the influence of advanced consumer-facing software. The “fake news” hysteria highlights the pressures news outlets face competing in an always growing digital media ecosystem and the deep ties news outlets forged with social media companies as a major source of revenue-generating traffic in the wake of the Great Recession.

That relationship has become even more complicated as news outlets and their online distribution platforms have begun competing for the same ad dollars, triggering a figurative arms race to the bottom in news content quality. Increasingly hyperbolic headlines, shareable partisan content about the latest outrage, and stories featuring photos of cute baby animals, are what remain.

“Total digital ad spending grew another 20% in 2015 to about $60 billion, a higher growth rate than in 2013 and 2014. But journalism organizations have not been the primary beneficiaries,” Pew reported in June 2016. 65 percent of digital ad revenues are going to just five tech companies --- including Facebook, Google, Yahoo, and Twitter – none of them journalism organizations.

During the campaign season, Gizmodo ran a story in which several former Facebook workers for the company’s now-defunct Trending News division alleged they were encouraged to censor stories originating on conservative sites from appearing in their site’s right hand column in favor of more mainstream and left-of-center stories.

Facebook denied the allegations, held an invitation-only summit at their Palo Alto headquarters with conservative media leaders, which several invitees refused to attend, and subsequently fired the Trending News team, handing over further responsibility to its filtering algorithm.

The section began to populate with stories from conspiracy theory sites, further demonstrating the devastating power of contagious emotions and populist energy spreading with the help of intelligent, but obedient, machines.

Glenn Beck criticized the meeting, stating that attendees were demanding something akin to “affirmative action” for conservatives. “When did conservatives start demanding quotas AND diversity training AND less people from Ivy League Colleges?”, wrote Beck.

Facebook employs Republicans in high level positions at the company, and prominent Trump supporter Peter Thiel is a company board member. But media conservatives have been wary of Silicon Valley since many of its workers generously donated both time and technical expertise in helping to elect and then re-elect President Obama, and the Gizmodo story played well to those suspicions.

---

Political bots and cyborgs

Bots also received a fair amount of attention this past election cycle. Whether it was a Twitter “chatbot” that had become obsessed with Nazis and sex, or alleged Russian Twitter bots working to manipulate the political discourse in favor of then-candidate Donald Trump, it seemed like the robot apocalypse might finally be upon us in the form of 140-character salvos.

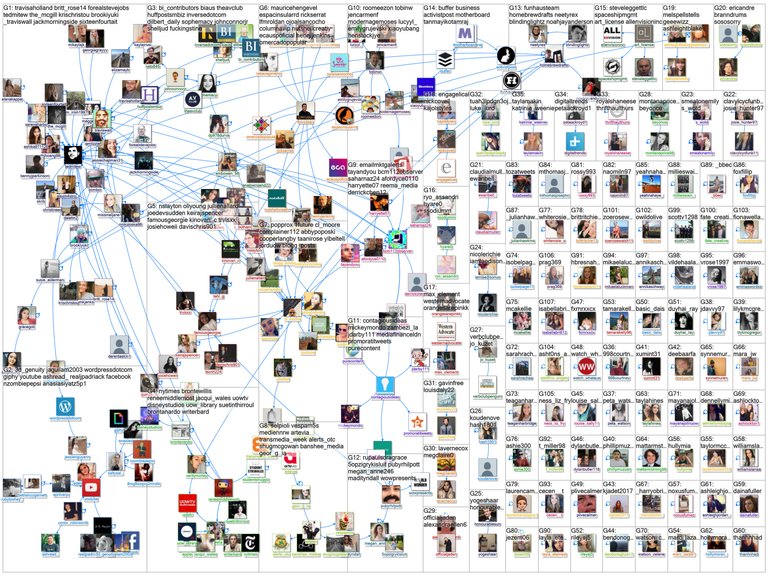

Several researchers with the Institute of Electrical and Electronics Engineers (IEEE), in their April 2016 paper, “The Rise of Social Botnets: Attacks and Countermeasures,” defined social bots as “software-controlled OSN accounts,” or online social network accounts, “that mimic human users with malicious intentions.” These are automated accounts that spam users on a network with unwanted messages meant to manipulate the recipient, sometimes with links that spread malware to the recipient’s device.

Citing a May 2012 Bloomberg Businessweek article, they wrote that “as many as 40% of the accounts on Facebook, Twitter, and other popular OSNs are spammer accounts (or social bots), and about 8% of the messages sent via social networks are spams, approximately twice the volume of six months ago.”

But while bots' time in the spotlight has come, they are neither new nor novel. The bot, at its most benign, like other narrow and low-level “A.I.” discussed in this piece, are simply interactive computer programs built on algorithms.

The reason why they are causing alarm is that their capacity to influence and manipulate the public discourse appears to be incredibly effective. Phil Howard, a professor at Oxford University, calls this phenomenon "computational propaganda.”

Researchers and journalists, myself included, have documented this phenomenon over the past decade both domestically and internationally as a matter of curiosity, and also out of recognition that these bots can have a profound effect on how a candidate is perceived.

Political campaigns and businesses tote social media follower count as a popularity metric. Obviously, the math is simple - more followers equals greater popularity and influence. How do you know if the numbers are real or inflated? Thankfully, tools exist to help determine probable percentages. I say "probable" because measurement standards, i.e., biases, differ between analytics companies. Compare the statistical results of each tool and outcomes will vary. But that doesn't mean the tools are useless. While the detection process is not perfect, it gives researchers a range to work with to determine a candidate’s actual level of online influence.

Botnets, i.e., groups of bots, have been used for years to attack the technical systems of political targets online in the form of distributed-denial-of-service (DDoS) attacks. This was the favorite tactic of the hacktivist collective Anonymous. The botmaster, i.e., the person or group in charge of the botnet, leverages the combined processing power of the hacked computers under their control, its slaves, to overwhelm the target system causing it to crash. They can also be used to manipulate online polls.

Propaganda botnets operate on a similar principle, although the social media accounts under their control are not necessarily "compromised", or hacked, accounts, but rather a network of accounts that either interact with one another, separately, or some combination of the two, mimicking human behavior to some degree to create the illusion of consensus on issues.

The tone of the dialogue of these botnets can escalate to aggressive and opinionated harassment. And when a likely voter or candidate encounters these botnets, they can be influenced to think and vote a certain way.

The more sophisticated cyborg accounts, accounts that publish a combination of automated and human tweets, make detection harder since this resembles a more normal type of user behavior. For example, a business might automate social media posts to publish at certain times of the day, but then have a social media manager engage with followers through the account in real-time.

---

Not everything is as it seems

Concern that Internet usage creates an information "silo effect" for consumers is not new, and various research studies on online social network user behavior have attempted to refute this by showing that users often learn new and novel information from their online acquaintances, or "weak ties.”

For example, Pablo Barberá, now an Assistant Professor of International Relations at the University of Southern California, published a study in 2014 arguing that social media usage in Germany, Spain and the United States did "not increase political extremism" for users in those countries. Increased exposure to politically dissonant and ideologically diverse content, Barberá believed, would induce political moderation.

Another example defending the weak-tie theory was published by Facebook's own in-house research team in 2016 that found that if users were looking for a job, their close friends might be more inclined to help, but they more likely found a new job through an acquaintance.

In a political context, these weak ties are precisely what can be exploited by campaigns, hackers, and foreign governments to introduce noise into the online debate, and in fact, that's precisely what has been happening.

Concerning the 2016 elections in the U.S., there were numerous examples of automated tweeting, sock-puppetry --- accounts that allow the user to operate anonymously behind fictitious personas --- and "cyborg accounts” supporting both major parties, although much of the focus has been on the pro-Trump botnets with alleged Russian-ties. The New York Times, for example, ran several pieces on Russian-connected political trolling.

My own reporting uncovered that at one time during the campaign season, nearly 71 percent of the 7.51 million profiles following Trump's Twitter account were either fake or had been inactive for at least six months. Political strategists like Patrick Ruffini, co-founder of Echelon Insights, found evidence of clear messaging coordination between bot accounts. A Politico report dubbed the pro-Trump Twitter bots his “cyborg army.”

In late February 2017, Ben Nimmo of the Atlantic Council’s Digital Forensic Research Lab published a report about how a pro-Trump botnet was being used to “steer Twitter users to a money-making ad site.”

“By creating a network of fake Trump supporters, it targets genuine ones, drawing them towards pay-per-click sites, and therefore advertising revenue,” wrote Nimmo. “It is neither large, nor especially effective, but it highlights the ways in which fake accounts can mimic real ones in order to make a fast cent.”

In 2014, I reported that at least 55 percent of President Obama's 36.8 million Twitter followers were fake. But while in Obama's case the high fake follower account might have served as a means to boast social media influence, Trump actively retweets his followers. Buzzfeed reported that a Trump retweet included at least one bot whose bio referenced the Nazi propaganda minister Joseph Goebbels, showing that bots can even influence heads of state.

During the Brexit campaign in the U.K., Phil Howard conducted a study finding that "political bots have a small but strategic role in the referendum conversations,” reporting that "less than 1 percent of sampled accounts generate almost a third of all the messages."

Brazilian researchers measuring the impact of retweeting bots during the March 2015 protests in Brazil found that not only did the bots influence how the messaging was received, but studying raw social media data for political events without taking bots into account "may lead to a false impression of a high-impact behavior of the population" being studied.

More recently, the Atlantic Council’s Digital Forensics Research Lab published another report in early March 2017 revealing that a small social media botnet supporting France’s far-right presidential candidate Marine Le Pen was using automated coordination to amplify its messaging.

“Le Pen’s online army is not a grassroots movement, but a small group trying to look like one,” said the lab.

---Thank you for reading,

- Josh

---Further Reading

* Baraniuk, Chris. "YouTube’s bots aren’t protecting kids from all its nasty videos." New Scientist. January 24, 2018. Accessed January 29, 2018. https://www.newscientist.com/article/mg23731620-100-youtubes-bots-arent-protecting-kids-from-all-its-nasty-videos/.

* Bershidsky, Leonid. "If Google's workplace is biased, what about its algorithms?" Chicago Tribune. January 09, 2018. Accessed January 29, 2018. http://www.chicagotribune.com/news/opinion/commentary/ct-perspec-google-bias-discrimination-algorithms-0110-20180109-story.html.

* Breland, Ali. "Social media giants pressed on foreign bots after memo campaign." The Hill. January 28, 2018. Accessed January 29, 2018. http://thehill.com/opinion/technology/368098-2018-will-be-the-year-of-the-bots.

* Burgess, Matt "How Nike used algorithms to help design its latest running shoe." Wired UK. January 25, 2018. Accessed January 29, 2018. https://www.wired.co.uk/article/nike-epic-react-flyknit-price-new-shoe.

* Caughill, Patrick. "The FDA Approved an Algorithm That Predicts Death." Futurism. January 18, 2018. Accessed January 29, 2018. https://futurism.com/fda-approved-algorithm-predicts-death/.

* Chiara, Adam. "2018 will be the year of the 'bots'." The Hill. January 09, 2018. Accessed January 29, 2018. http://thehill.com/opinion/technology/368098-2018-will-be-the-year-of-the-bots.

* Chiel, Ethan. "The Injustice of Algorithms." New Republic. January 23, 2018. Accessed January 28, 2018. https://newrepublic.com/article/146710/injustice-algorithms.

* Confessore, Nicholas, Gabriel J.X. Dance, Richard Harris, and Mark Hansen. "The Follower Factory." The New York Times. January 27, 2018. Accessed January 29, 2018. https://www.nytimes.com/interactive/2018/01/27/technology/social-media-bots.html.

* Dent, Steve. "Algorithms transform Chicago scenes into trippy lobby art." Futurism. January 15, 2018. Accessed January 29, 2018. https://www.engadget.com/2018/01/15/chicago-lobby-art-esi-design-the-big-picture/.

* Gershgorn, Dave. "Google wants to make building an AI algorithm as easy as drag and drop." Quartz. January 17, 2018. Accessed January 29, 2018. https://qz.com/1181212/google-wants-to-make-building-an-ai-algorithm-as-easy-as-drag-and-drop/.

* Gholipour, Bahar. "We Need to Open the AI Black Box Before It’s Too Late." Futurism. January 18, 2018. Accessed January 29, 2018. https://futurism.com/ai-bias-black-box/.

* Harsanyi, David. "No, Russian Bots Aren't Responsible for #ReleaseTheMemo." Reason. January 26, 2018. Accessed January 29, 2018. https://reason.com/archives/2018/01/26/the-russia-fake-news-scare-is-all-about.

* Kucirkova, Natalia. "All the web's 'read next' algorithms suck. It's time to upgrade." Wired UK. January 26, 2018. Accessed January 29, 2018. https://www.wired.co.uk/article/how-decentralised-data-will-make-better-reading-recommendations.

* Lieberman, Eric. "Are Faulty Algorithms, Not Liberal Bias, To Blame For Google’s Fact-Checking Mess?." The Daily Caller News Foundation. January 24, 2018. Accessed January 29, 2018. http://dailycaller.com/2018/01/24/faulty-algorithms-liberal-bias-googles-fact-checking/.

* MacDonald, Cheyenne. "From the tiny robot controlled by your brainwaves, to the AI companion that can recognize your pets: The adorable bots on display at CES." Daily Mail. January 10, 2018. Accessed January 28, 2018. http://www.dailymail.co.uk/sciencetech/article-5253261/The-adorable-bots-display-CES-far.html.

* McCue, Mike. "5 Lessons Algorithms Must Learn From Journalism." Adweek. January 22, 2018. Accessed January 29, 2018. http://www.adweek.com/digital/5-lessons-algorithms-must-learn-from-journalism/.

* Lapowsky, Issie. "2017 was a Terrible Year for Internet Freedom." Wired. December 30, 2017. Accessed January 29, 2018. https://www.wired.com/story/internet-freedom-2017/.

* Rodriguez, Ashley. "YouTube’s recommendations drive 70% of what we watch." Quartz. January 13, 2018. Accessed January 29, 2018. https://qz.com/1178125/youtubes-recommendations-drive-70-of-what-we-watch/.

* Sassaman, Hannah. "The Injustice of Algorithms." New Republic. January 23, 2018. Accessed January 28, 2018. https://newrepublic.com/article/146710/injustice-algorithms.

* Snow, Jackie. "Algorithms are making American inequality worse." Daily Mail. January 26, 2018. Accessed January 28, 2018. https://www.technologyreview.com/s/610026/algorithms-are-making-american-inequality-worse/.

* Stewart, Emily. "New York attorney general launches investigation into bot factory after Times exposé." Vox. January 27, 2018. Accessed January 29, 2018. https://www.vox.com/policy-and-politics/2018/1/27/16940426/eric-schneiderman-fake-accounts-twitter-times.

* Tiffany, Kaitlyn. "In the age of algorithms, would you hire a personal shopper to do your music discovery for you?" The Verge. January 19, 2018. Accessed January 29, 2018. https://www.theverge.com/2018/1/19/16902008/debop-deb-oh-interview-curated-playlists-algorithms-art-collection.

* Vass, Lisa. "Amazon Twitch declares 'Game Over' for bots." Naked Security. January 26, 2018. Accessed January 29, 2018. https://nakedsecurity.sophos.com/2018/01/26/amazon-twitch-declares-game-over-for-bots/.

* Vynck, Gerrit De, and Selina Wang.. "Twitter Says Russian Bots Retweeted Trump 470,000 Times." Bloomberg.com. January 26, 2018. Accessed January 29, 2018. https://www.bloomberg.com/news/articles/2018-01-26/twitter-says-russian-linked-bots-retweeted-trump-470-000-times.

* Wall, Matthew. "Is this the year 'weaponised' AI bots do battle?" BBC. January 05, 2018. Accessed January 28, 2018. http://www.bbc.com/news/business-42559967.

* Vincent, James. "Google ‘fixed’ its racist algorithm by removing gorillas from its image-labeling tech." The Verge. January 12, 2018. Accessed January 29, 2018. https://www.theverge.com/2018/1/12/16882408/google-racist-gorillas-photo-recognition-algorithm-ai.

* Yong, Ed. "A Popular Algorithm Is No Better at Predicting Crimes Than Random People." The Atlantic. January 17, 2018. Accessed January 29, 2018. https://www.theatlantic.com/technology/archive/2018/01/equivant-compas-algorithm/550646/.

---Related Posts by Josh Peterson

* "Revenge of the Cyborgs." Steemit. December 21, 2017. Accessed January 28, 2018. https://steemit.com/artificial-intelligence/@joshpeterson/the-revenge-of-the-cyborgs

* "Robot Law." Steemit. November 6, 2017. Accessed December 20, 2017. https://steemit.com/artificial-intelligence/@joshpeterson/robot-law

* "Raging Against the Machines." Steemit. October 27, 2017. Accessed November 2, 2017. https://steemit.com/artificial-intelligence/@joshpeterson/raging-against-the-machines

* "The Rise of the Machines Has Already Happened." Steemit. October 25, 2017. Accesed November 6, 2017. https://steemit.com/artificial-intelligence/@joshpeterson/the-rise-of-the-machines-as-a-historical-metaphor

* "Rethinking Artificial Intelligence." Steemit. October 22, 2017. Accessed October 24, 2017. https://steemit.com/artificial-intelligence/@joshpeterson/rethinking-artificial-intelligence

* "This will begin to make things right." Steemit. October 22, 2017. Accessed October 22, 2017. https://steemit.com/writing/@joshpeterson/this-will-begin-to-make-things-right.

* "Will Robots Want to Vote?" Steemit. October 19, 2017. Accessed October 22, 2017. https://steemit.com/artificial-intelligence/@joshpeterson/will-robots-want-to-vote.

---View Post History

A brilliant read. AI is nothing but human imagination. It can go wrong and it always has biases. Well refereces article with a complete analysis of the situation.

Computationl propaganda is part of the wars ever since the dawn of information age. Social media, bots, fake news, and more AI applications have made it a lot intense.

Josh, I know this article must have been rewarded much more than it currently is. I gave my full vote and resteemed for more visibility. But I will encourage you to keep going because Steemit starts rewarding exponentially upon achievement of some parameters. I think you will be there pretty soon.

Good luck!

Rediscovering and self analysis is going on for ages

I think it’s insane that people can still overlook the impact bots have on many different aspects of life not just the technology. I think back to the launch of dial-up and having an ad watching program to get paid for views but it required constant movement of the mouse. Till then I had not ever heard of a bot, and if I remember correctly it was just called a macro script. Wasn’t long after that the company offering the service was shut-down by having its funding pulled when it leaked users had figured out a way to automate the system. This is also when I first remember download caps coming into play but I think that was more to do with file sharing. Moving forward it seems to just be a repeat pattern, people want to simplify complexities or from my example passive income. I have seen bots wreck so many projects some not intentionally. From games, social media platforms, online gambling, website support staff, ......

I wonder, do the developers of said bots really wish to do the damage they do. And why doesn’t anyone plan for the worst case scenario?

On another note I’d really like to see some other name then bot used to discribe the situation. Some are far more dynamic then just automation but we now have prodictive linguistic bots and self learning not quite full AI but they could certainly combine the two and compile a account take over bot which most people would not know they are not talking to you.

Good read, I’m new here and I am rather impressed with the quality of content being put forward.

machine learning algorithm will b the next big thing going forward and teaching them to do whats right for humanity is the next level, most A.I. do what they are told and this not enough we need more , looking at the recent election if those bots had a way of finding fake news and propaganda and censoring them before they are spread as real news then we would not have been here with the issues we are facing, Good algorithm will give good result

thanks ^^

@originalworks

good one

This post has received gratitude of 6.73 % from @appreciator thanks to: @joshpeterson.

My thoughts are that the program's algorithm is in average better than the human intuition/heuristic.

From what I know, it seems that AI is just not able to compete at the same level that humans are. AI might be able to down stack better than humans can, but when observing the AI you provided, it seems like it can't find a balance between down stacking and stacking.

I am not sure why the best humans lose at , I am not sure whether it's because the game becomes too fast or because they eventually get an unlucky strike that they can't deal with. If that's the latter then “bots" are definitely the strongest. @joshpeterson

Bots regulate all living mechanisms. We’re just getting better at building ourselves.

Thank you for this amazing post.

great read!