Welcome to the first installment of a new series titled, THAT'S FUCKING SMART.

You'll never be sure what topic I'll cover, but you'll be absolutely sure that I won't cover this one topic: life hackery. This series will offer zero life tips, zero business how-to's, zero top 10 ways to cover up your bs hacks.

So, what is The Future of Life Institute? Why did Elon give $10 million to it?

You may remember reading in the news a year ago that Elon Musk donated $10 million to keep humans safe from “killer robots”.

You might also remember that Bill Gates, Stephen Hawking, Steve Wozniak, Morgan Freeman and many leading AI researchers from Google’s DeepMind project also joined Musk in raising public awareness about the existential threats posed by the future possibility of superintelligent AI.

Much of the world had no idea what Musk and friends were talking about because very few people, outside of scientists who research AI, know that the current breakthroughs in AI are happening way before experts predicted them to occur. If you follow the news in AI, it seems that a new breakthrough occurs every month or week. Here’s a few of the most recent breakthroughs: AI beats a top human player in the ancient game of Go, AI lawyer named Ross has been hired by law firms, students didn’t know their teacher was an AI assistant, AI can automatically write captions from complex images, robot receptionist gets job at Belgian hospital, AI becomes first to pass Turing test and that is just to name a few.

So, what’s wrong with any of that? Why are some of the brightest minds worried about AI?

Image Source: Pixabay

Let’s now turn to a founding member of The Future of Life Institute, Max Tegmark, who is a professor of physics at MIT. After viewing a video in which he lays out why he created The Future of Life Institute, I believe his reasoning makes a lot of sense.

According to Max Tegmark,

“We are experiencing a race. A race between the growing power of technology and the growing wisdom with which we manage this technology. And it’s really crucial that the wisdom win this race.” - Max Tegmark, The Future of Life With AI

Max continues on to illustrate that humans are doing a pathetic job of managing existential risks, in the context of hundreds, thousands and even millions of years into the future. He uses the case study of nuclear weapons to demonstrate how poor of a job we’re doing. He then decides to give humans a mid-term grade on risk management.

He gives us humans a D-.

You may be asking, “Why did we get a D-?”

Here’s your answer in the form of a question:

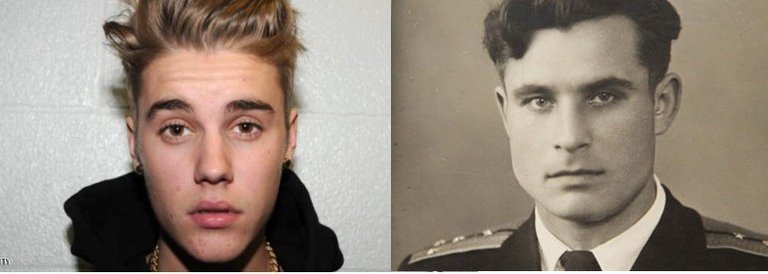

Which person is more famous?

Image Source: Bieber public domain + Arkhipov from Alchetron

Which person should we thank for allowing us to even exist here, reading leisurely on Steemit today?

No one knows the correct answer because no one knows the name of the guy on the right. I certainly had no idea who he was until I watched Max’s video yesterday.

His name is Василий Александрович Архипов.

Vasili Alexandrovich Arkhipov (30 January 1926 – 19 August 1998) was a Soviet Navy officer. ...Only Arkhipov, as flotilla commander and second-in-command of the nuclear-missile submarine B-59, refused to authorize the captain's use of nuclear torpedoes against the United States Navy, a decision requiring the agreement of all three senior officers aboard. In 2002 Thomas Blanton, who was then director of the National Security Archive, said that "Vasili Arkhipov saved the world". -Wikipedia

Apparently a Russian guy named Arkhipov saved the world from nuclear war but nobody knows about it.

That was one reason Max gave us humans a D- for existential risk management. Of course the biggest cause for our low grade was because of our past history with nuclear weapons. When nuclear weapons were first developed, no one knew that a bigger devastation would come in the form of a ten year nuclear winter, wherein the earth would receive only scant amounts of sunlight and most living things would die off as a result. This scenario was only communicated by scientists in the 1980’s, long after the bombs were built. Hmmmm...not a very smart thing to do: build something that could wipe out humanity and most living creatures, but not even be aware of this secondary causal existential threat.

As Max points out, we humans don’t have a very good track record when it comes to understanding the full implications of the powerful technology we are unknowingly unleashing.

If you want to watch the full video, it’s completely worth your time. You could be much smarter afterwards:

Now, Max describes himself as a cheerful guy and he’s not proposing that we halt the march towards creating superintelligent AI.

What he is proposing, with the formation of The Future of Life Institute is that we research and build AI in a maximum beneficial way for the continuation of humanity. Before Max organized this group, there were no guiding principles directing the scientists who were actively building AI systems. Smarter, faster were the only words dictating their actions.

Many may still not be aware of the potential risks associated with AI. Here are two potential AI scenarios that are outlined from their website:

The AI is programmed to do something devastating: Autonomous weapons are artificial intelligence systems that are programmed to kill. In the hands of the wrong person, these weapons could easily cause mass casualties. Moreover, an AI arms race could inadvertently lead to an AI war that also results in mass casualties. To avoid being thwarted by the enemy, these weapons would be designed to be extremely difficult to simply “turn off,” so humans could plausibly lose control of such a situation. This risk is one that’s present even with narrow AI, but grows as levels of AI intelligence and autonomy increase.

The AI is programmed to do something beneficial, but it develops a destructive method for achieving its goal: This can happen whenever we fail to fully align the AI’s goals with ours, which is strikingly difficult. If you ask an obedient intelligent car to take you to the airport as fast as possible, it might get you there chased by helicopters and covered in vomit, doing not what you wanted but literally what you asked for. If a superintelligent system is tasked with a ambitious geoengineering project, it might wreak havoc with our ecosystem as a side effect, and view human attempts to stop it as a threat to be met. -The Future of Life Institute

The Future of Life Institute researches four areas of potential existential threats: artificial intelligence, nuclear weapons, climate change and biotechnology. Elon Musk has massively supported this initiative and also helped to develop OpenAI, whose mission “is to build safe AI, and ensure AI's benefits are as widely and evenly distributed as possible.” OpenAI was created in response to the fact that the primary ones designing the AI future were the tech giants like Google and Facebook. Musk was concerned that artificial intelligence in the future would only be in the hands of the powerful few.

Here’s Elon and Max in a video interview that explores why Musk gave Max’s foundation $10 million:

What do you think the next breakthrough in AI will be? And when will it be?

You make a hard point there when you wrote that : The AI is programmed to do something beneficial, but it develops a destructive method for achieving its goal

A robot or more exact AI doens't have the same side as us humans ... let me give you an example:Superb post @stellabelle !

Well the cause is us ! So naturally AI will say that the sollution to the problem is kill all humans.

And you can take more problems like sickness and famine.

For sickness-AI can say destroy all infected subjects

For famine - AI can say destroy all weak and sick subjects and it will be food again. If there is no one who can eat then the food will not get eaten...Logical right if you think it that way!

We are logical beings but olso we like to say that we have fealings to so we canot think like a machine will do and we try to think about the best solution for a problem.

AI doesnt share our point of view and to be onest I dunno if it will ever do.

BUT in my opinion we did not lose the race we can still evolve and improve ...sometimes the turtle can defeat the rabit.

PS :Awesome analogy there between bieber and Vasili Arkhipov that man is trully a hero!

I can't take credit for the analogy. It was part of Max Tegmark's presentation. But his presentation was mind-blowing and it cleared up a lot of my questions. I had no idea that the experts in AI are surprised by the current breakthroughs of the past 5 years. Machine learning is happening now, and most thought that we were like 10 or 15 years away from the AI advancements. That's what I didn't know about....

I am so happy that you too share my entuziasm on this subject, here in my country is 2:35 AM and I canot stop reading your posts . I can't wait to see the next one!

Oh, your enthusiasm is exactly the kind of reason I decided to start writing about such topics! I am very happy that you're liking them. Another one is out today!

You knew about Arkhipov? I had no freaking idea! And my father was even an anti-nuclear weapons person and member of Physicians for Social Responsibility....you do make a good point about how machine logic doesn't take us into account, that's why Hawking and Musk are worried! Also, imagine when the weaponized AI gets so advanced that there is an entire black market of it????????

Also, the other thing few people even consider is that when superintelligence gets to be recursive, meaning that it will be able to improve it's own intelligence, and it surpasses us in every cognitive way, then we will no longer be able to relate to it at all! We will have created something which we do not even understand! This is crazy. And most of the AI scientists believe that by 2060, superintelligence will exist............

There are already robots who are babysitters in Japan.......

Dear @stellabelle, here your article translated into Spanish:

ESO ES JODIDAMENTE INTELIGENTE: ¿Por Qué Elon Musk Donó $10 Millones Al Instituto 'The Future of Life'?

Yes, thank you very much!

So, build in the NAP.

This subject is absolutely fascinating, and I'm really glad the worlds brightest minds are keeping their eyes on it. Also, thanks for the intro to Max Tegmark. That's a name I'll have to remember. The site Wait But Why did a really fascinating article on A.I. as well, for those who want to read more. Good post !

Thank you! Max was new to me too, until I started to do some research.....

Hi : ) I would like to participate in Operation Translation by translating this post into Chinese. Would that be okay with you? I would follow @papa-pepper's guidelines!