Nvidia Jetson Nano is a developer kit, which consists of a SoM(System on Module) and a reference carrier board. It is primarily targeted for creating embedded systems that need high processing power for machine learning, machine vision and video processing applications. Nvidia is not a new player on the embedding computing market - the company has previously released other boards for machine learning applications, namely Jetson TK1, TX1, TX2 and one monster of embedded computing, Jetson AGX Xavier.

A few things are distinctly different about Jetson Nano though. First of all, it is impressive price tag, 99$. Before if you wanted to buy a Jetson series board, you would need to shell out at least 600+ USD for TX2. Secondly, Nvidia is being extra user-friendly this time with tutorials and user manuals, tailored for beginners and people with little knowledge about machine learning and embedded systems. It is especially evident to me, a person who used Jetson TX1 in 2017 for developing a companion robot. At that time, it was almost impossible to develop anything significant on that board without

1)help of embedded systems engineer

2)spending hours on Stack Overflow and NVIDIA forums, trying to narrow down the source of the problem.

Seems that things are going to be different this time :) At least Nvidia is making an effort to do so. This board signifies the company's push to bring AI-on-the-edge to the masses, same way Raspberry Pi did with embedded computing.

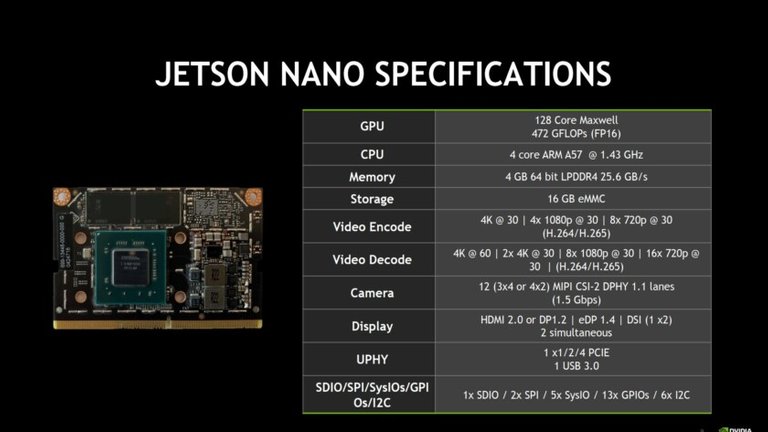

Now let's have a good look at what's "under the trunk" of Jetson Nano.

The system is built around a 128 CUDA core Maxwell GPU and quad-core ARM A57 CPU running at 1.43 GHz and coupled with 4GB of LPDDR4 memory. While the ARM A57 CPU is quite fast and 4GB memory also sounds quite generous for 99$ board, the cornerstone of machine learning acceleration capabilities of the board is in it's GPU. As we know GPUs, with their high parallelism architecture and high memory bandwidth are much more suitable for training and inference. If above mentioned phrase "128 CUDA core Maxwell GPU" doesn't make much sense to you, you can think of those CUDA cores the same way as you think of CPU cores, they allow to do more operations simultaneously.

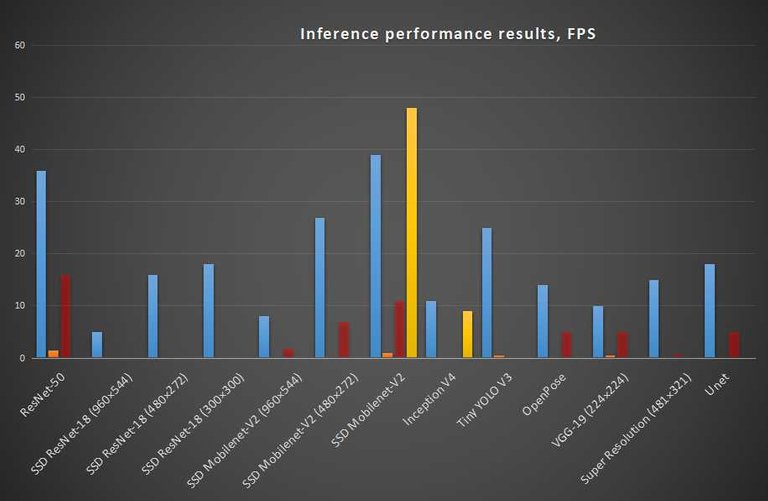

Nvidia claims the Jetson Nano GPU has processing power of 472 GFLOPS (Giga Floating Point Operations per Second). While it is an interesting number, unfortunately it is more useful for comparing Nano to other Nvidia products, than to comparing it to its direct competitors, since when determining the actual inference speed multiple other factors are involved. A better benchmark would be direct comparison of them running popular machine learning models. Here's one from NVIDIA's website.

Jetson Nano is the blue bar, Raspberry PI Model 3 is the orange bar, Raspberry Pi 3 + Intel Neural Compute Stick 2 is red bar and Google Edge TPU Coral board is yellow bar.

As we can see Jetson Nano clearly has the edge before Raspberry Pi 3 + Intel Neural Compute Stick 2. Considering comparison with the Coral board, it's too early too say, many benchmarks for it are not available yet.

Jetson Nano is NVIDIA's take on "AI embedded platform for the masses". It has all the characteristics and the price tag necessary to achieve success. Time will tell how it performs against it's direct competitors.

Hardware overview follow-up

Detailed video review of Jetson nano hardware

Nvidia has detailed description of the pins and interfaces of the example carrier board in this manual.

There are a couple of things that need to be emphasized:

- Despite there's no fan included, you really want to consider buying one separately, since the board is getting noticeably hot during operation, especially under the load.

- The support for WiFi dongles is lacking now. I tested three different ones, and two of them didn't have drivers for Jetson Nano's architecture.

- Officially HDMI-to-VGA adapters are not supported, but some models work. Since there's no list of adapters known to work, it is advised to have a screen that supports HDMI input.

- There are 4 ways to power the boards: Micro USB(5V, 2A), DC barrel jack(5V, 4A), GPIO and PoE(Power over Ethernet). There are jumpers on the board to switch it from default Micro USB power mode to DC barrel jack or PoE.

Software review follow-up:

Detailed video review of first boot preparation and demos

It was surprisingly difficult to find and run the demos the default image comes with. Since the target group for Jetson Nano is the people with beginner's and intermediate knowledge of Linux, I would say it should be made easier to run and (possibly) build upon different demos that come with the image.

But anyways, as of now, you can find the following demos:

CUDA samples in usr/local/cuda-10.0/samples Particle, smoke simulation, etc.

Copy this one to your home folder, then go to the directory of sample you want to run and build it with make command. Then run with /.name of the executable

Visionworks samples usr/share/visionworks/sources/demos Video processing, motion estimation, object racking and so on

Copy this one to your home folder, then go to the demos directory and run make command. Executables will be built together and placed in ~sources/bin/aarch64/linux/releases

There are also TensorRT demos, which are machine learning focused, I left them out of the video, because most of them are executed in command line and not really interesting to watch.

I am currently using Jetbot framework to build a fun project with Jetson Nano, a Deep Learning Quadruped robot!

Add me on LinkedId if you have any question and subscribe to my YouTube channel to get notified about more interesting projects involving machine learning and robotics.