May 2019. Every Saturday Paris gets engulfed in chaos, due to the Yellow Vests protests. Social strain grows constantly, people insist on the resignation of Macron and his government. Suddenly, a video clip starts to spread on social media in a flash. Emmanuel Macron is slowly talking to the camera:

I can no longer tolerate the chaos and destruction of my country. From today, streets will be patrolled by the army. They will open a fire to everyone, who participates in protests against France.

Those words are the last straw - France slides into a vicious civil war.

Source

Fortunately, this is a fictional scenario - Macron would never publish such message. However, it could quickly become a reality using deepfake - a technology allowing to put any words into the mouth of any person, including politicians.

Like surprisingly many of new technologies, deepfake had its beginning in the pornography industry. In 2017, an anonymous Reddit user published a porn video with the "pasted" face of Hollywood actress. This caused a sensation in the world of technology - many people started to develop their own Artificial Intelligences intended to use the deepfake technique.

President Trump is total and complete dipshit

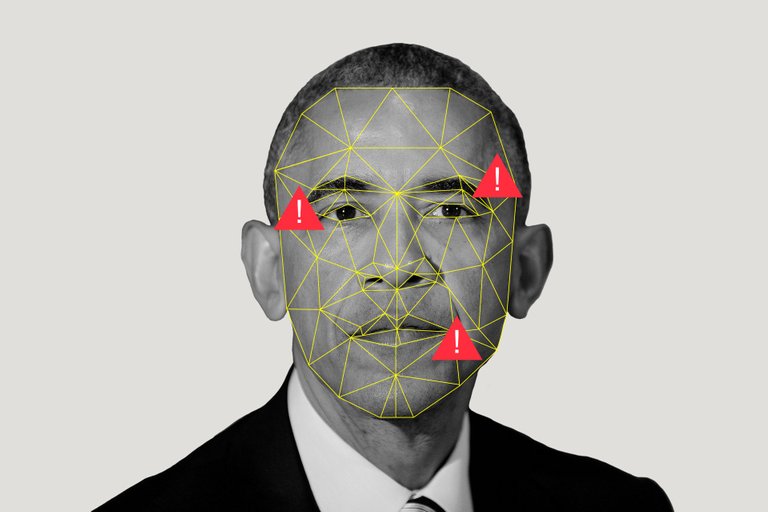

This speech of Barack Obama seems to be real, excluding the words he is saying. In fact, it is a performance of an actor, who uses deepfake technology in order to convert his mimic and facial expression into the Obama's face.

This may look like harmless joke, but United States Department of Defense treats this problem really seriously. Recently, they started an intense research on detecting deepfake, in order to prevent manipulations in the upcoming elections.

Revolution on the big screen

Film industry has also understood the importance and potential of deepfake. Princess Leia's face from the first episodes of Star Wars has been scanned and analyzed by Artificial Intelligence. Then, the model has been fitted into the face of a double, imitating the appearance of the actress from before 40 years. If you take a closer look, this trick is still slightly visible, however, deepfake improves really quickly, so in a few years we may not be able to spot the difference.

Source

We can no longer trust our eyes

Political campaigns proved that materials containing falsified data or content (aka fake news) can have a massive impact on the public opinion and political situation. They can spread incredibly fast on social media, infecting millions of people with socially dangerous information.

We absolutely believe our eyes, because it helped us to survive through thousand of years. When camera was invented, it quickly became our primary source of information about the reality. However, some people already question events like the moon landing, despite clear video materials. If deepfake become a part of our reality, making people believe they cannot trust their eyes, the problem of conspiracy theories and misinformation will get worse. Even the biggest and trusted institutions may decide on a slight manipulations, which will be really hard to detect.

Source

Source Deepfake may also have an impact on each of us, individually. Assume you apply to a new job, and someone posts a video of you insulting the company. Today, in order to create such video, Artificial Intelligence would need to analyze hundreds of pictures of you, but with the technological progress it will be becoming much more effective. Therefore, we need to be really careful about photos and data posted on social media, as we cannot predict for what purpose they will be used.

Share your view!

Do you think that deepfake poses a real threat to social structures? Maybe researchers will find an effective way to detect it or public opinion will simply not believe in abstract news? I would be happy to hear your opinion!

The technology you said,

The original original technology, It should have been applied to animation more than ten years ago!

Especially the movie/cartoon/TV series,

At that time, that old technology, I look at it with my eyes, I already can't to know is it true or false?

And the latest deepfake you mentioned, even the professional computer technology can not detect the true and false,

For the public, there is actually not much difference.

Because :

Some is easy deceiving, it’s easy to believe in media coverage.

Some is difficult deceiving, he didn’t believe the media reported.

Only live broadcast will make much peoples to believe.

Thank you for your reply dear @cloudblade

You are right, in terms of the movie industry this technology opens up amazing possibilities. However, I'm curious if actors feel threatened, as they may be replaced by AI in the future.

I get your point, but there is a significant problem. If computers become powerful enough to run deepfake AI in real time, they will be able to manipulate live broadcasts. There is always some delay between the captured reality and your computer monitor (about 2 seconds) and this time will be sufficient to run deepfake.

Quote neavvy :

You are right, in terms of the movie industry this technology opens up amazing possibilities. However, I'm curious if actors feel threatened, as they may be replaced by AI in the future.

my thoughts:

I don't think so, I have seen the actors' performances~~~

The director must hire an actor. After the actor finishes, he uses the technique to modify the appearance of the performance. Therefore, it still requires the actor to perform, but after the performance, the appearance can be modified, so it still has to have those actors, and add instead Expenditure on those technology...

If have any actor is threatened, that is he has not been invited to participate, and has been replaced by other actors. is not AI replaced him, is another characteristic actor replaced him.

Friend @neavvy, thank you for this super great information, I'll tell you something I'm a passionate of the greatest futuristic visionary, well that's my personal opinion which I share here,. The great Jules Verne, many of his visions of the future I think all are already a reality.

A big greeting from Venezuela.

Thank you for your comment dear @lanzjoseg!

Yes... I also love visions of Stanislaw Lem, he was pretty accurate in his view on the future.

Deep fakes will be used to incite violence, and probably have. The situation with the yellow vests is quite possible. I would imagine it would be the reverse though; the establishment will use a video of a rebel leader using deep fake tech to justify their violence against others. I started hearing about this last summer, it’s interesting stuff.

I think blockchain can save us in this instance though; videos from original posters uploaded to the blockchain from verified and known accounts. It wouldn’t be able to be modified so that’s the real one. If it’s not posted in that manner then it’s fake. Just a thought of a way to combat it.

Posted using Partiko iOS

Thank you for your reply @cmplxty. I am really amazed by the fact that you are so responsive.

That's also really interesting scenario.

Interesting point. Don't you think that people in blockchain lack of trust? There is no "strong" authority in here which would bring some sort of control, so many people are really careful. After all, there are a lot of scams and frauds in blockchain.

Hi @cmplxty,

A fantastic comment. Really, you've given a simple solution to a really complex problem @cmplxty.

Posted using Partiko Android

I think I have watched that years ago. It was, and still is shocking information. People rather do not even think about that, because it would hurt too much, thinking how many times they were faked by the "news"

Thank you for your comment @worldfinances

Yes, sometimes it's better not to think about that :)

Threat. And we have to understand that, over technological adoption is not good for human beings specially it will hurt Privacy. Stay blessed.@neavvy, Without any doubt these kind of Technology is a

Posted using Partiko Android

Thank you for your constant support @chireerocks. I really appreciate that :)

Yes, this is crucial :)

Welcome and that's true. Have a productive time ahead. Stay blessed.

Hi @neavvy,

Right from the day #1, all of the blogposts sent by you are very informative and interesting and this needs no special mention!

As usual, the problem lies with the pipl's mindset. This is the bitter truth and those hardcore criminals are trying to cash in

on this pessimism.

Generally speaking, when someone is speaking about a person's good qualities, we're least bothered by it. If suppose, when someone is speaking ill of another one, all of our ears will listen to it carefully! As said by @chekohler, we will not do the much required research to ascertain the fact before coming to a conclusion.

Why this partiality? We always love to hear great about us and worst about others. This ill-minded mindset is the one and only reason for creation and existence of all of the problems existing all over the world.

But we aren't ready to accept the truth when someone has torn our real face in front of millions and millions of pipl living all over the world. It is okay for others, but not for us. So our mindset is biased.

For example, a deep(shit)fake video circulated in WhatsApp showing a morphed video of a minority (caste) member violently injuring a person of another caste and this ignited a bloody trail of communal violence. This was wantonly done by some miscreants who perpetrated the same causing communal unrest.

Be it slightly accurate video or a video as a result of latest technological advancement like this deep(shit)fake, the problem is the same.

So, if our minds are filled with impartial love, this Superior Quality will spread all over the world and so let's rectify our minds FIRST and then think about nullifying the side effects of this technological advancement LATER!

Posted using Partiko Android

What an amazing reply @marvyinnovation :)

It's a pleasure to read comments like the one you wrote. Steemit definetly need more people like you.

ps. would you believe that @neavvy is only 17 years old? I couldn't believe it until finally we've met a while ago.

Cheers, Piotr

Thank you for your kind words, @crypto.piotr...... 🙂

Posted using Partiko Android

Thank you for such an amazing comment dear @marvyinnovation! I love the fact you are so responsive :)

Thank you. I am really happy to hear that.

Yes, I fully agree with this statement.

I think that's the correct approach. We need to be aware that technology (including deep(shit)fake :) ) can't be good or bad itself. It's all about what we, humans, do with those tools. So if we want to make technology more secure and harmless, we need to start from ourselves.

Thank you @neavvy for your kind words.

Posted using Partiko Android

Dear, very good information you share with us.

Wooo friend, the increase in technology is surpassing us. Being honest is the first time I hear about this, but I can say that all this technology has its risks, I imagine that over time people will get used to it and they will be able to detect when the news is true or when it is false.

Thanks for sharing this information.

Pr EV

Thank you for your constant support @fucho80

I am not sure if it will be possible, but I hope so :)

The implementation and scope of AI can be scary at times. Imagine Trump declaring missile launch on Russia? End of the world for sure.... This the not so great side.

Definitely, good for movie, animation and related fields. Can you imagine doctors studying facial muscles for different pronunciations? Could help in developing speech in mute children.. Some of the obvious benefits. I hope we have the positive aspects growing than negative.

Posted using Partiko Android

Thank you for your reply dear @oivas

That's frightening vision.

I hope so too :)

The solution to this problem isn't really that difficult, except that it will be difficult for authority to accept because to solve this problem requires them relinquishing control. The lesson here is that the position of president is obsolete. It is based upon trust. But with Bitcoin we have, "don't trust, verify".

To the extent that we all have copyright control over our own "image", such replications beyond this is a violation of copyright, but how could this be enforced? Simply by not trusting anything and using verification instead. If something isn't signed with the correct hash / signature, then it simply isn't valid. We've been doing this in the world of PGP for nearly 3 decades now.

Also, it's time for people to get over rage based social media militarized propaganda techniques. These people are going to start looking very stupid in the near future.

The best answer is to localize your business. Globalization just magnifies the problems (but makes politicians / parasites very rich). The vote is just an excuse to globalize problems. There's no reason why someone casting a vote thousands of miles away should have any impact whatsoever in my house.

The ideal range of anyones voting power should not average much more than about 5 acres. Anything beyond that is meddling in other peoples business. To the extent that there are such issues that require global attention (such as nuclear power), they should be governed by consensus algorithms and singled out from congressional bills and voted on individually (the mass packaging of bills is just another political trick to avoid accountability and make parasites very rich).

Dear @zoidsoft

One of the best comment I've read in that topic so far. Seriously thanks for sharing your view with us.

I'm not sure if I understand you well. Does it mean that if I migrated for some period of time in order to look for better life, then I shouldnt be allowed to vote?

Have a great upcoming week buddy

Yours, Piotr

No. It means that some things shouldn't be voted on at all but are a matter of sovereignty. Should I be able to vote on what you're allowed to do in your bedroom? How about all of us voting on how much of a raise we give to ourselves from your wallet? (this is theft, but we call it taxes instead) The brainwashing of society is severe and as a result, humanity consents to the insane.

Thank you for your kind comment @zoidsoft and solid explanation

Appreciate it a lot.

Yours

Piotr

Thank you for this amazing comment @zoidsoft! I agree with Piotr, this is indeed one of the best I've read in this thread.

Interesting conclusion. I generally agree with the concept of living locally, but don't you think that globalization is somehow unavoidable nowadays? Localized business means smaller "range" and smaller profit.

Yes, I got your point. Absolutely agree.

Such dispersed democracy is really interesting concept. I would like to see it in reality, but I'm afraid that it will never be implemented - our governments are too centralized.

Thank you again for your insights and have a nice day :)

The internet is global, so is the air supply. That doesn't mean that I breath in air from the whole planet at the same time. Accessing the internet is a voluntary act and therefore under your control. The disease is coercion and use of force. Some think that they have a right to dictate to you what you're allowed to see and think. These are the rising technocrats. Mr. "Don't be Evil" himself said this...

In other words, give them all your data so that they can tailor search results to what you expect. This is what I have referred to as a "digital thought ghetto" in my blog. Giving this kind of power to any centralized entity will produce extreme corruption. They can manipulate your emotions, get you to buy anything, vote for anything. Imagine giving this kind of power to Rev. Jim Jones? What could go wrong?

The result is that people won't grow and this is already happening in the social media echo chambers. Google isn't the only one doing this. Facebook, Twitter, Amazon, Microsoft, Apple, etc are all getting in on this.

Government has recognized the importance of this and wants to "regulate" it, not for your protection, but for their control over you.

Test... Have you heard of the "Yellow Vests" (Les gilets jaunes)? If not, why is that? Who is trying to filter that information out of your conscious awareness? Why might they want to do that? This is "soft fascism".

Localized government means smaller parasites. Global business is OK as long as it "does no harm". Anything that could affect someone else negatively should be held under a consensus algorithm. Spreading joy globally, not an issue, unless you're a government curmudgeon invested in Raytheon or Northrup Grumman (military contractors) who makes money on spreading war.

Socially this can affect us more or less depending on the position we occupy, but what if it is something that is very easy to elaborate any of our environment could do and is that at the rate we are going all technology is perfecting it to the point of doing it simpler for its use in some cases facilitating it to all (in some cases). I think that to detect when those messages are false, there is going to be a lot of work in this, since we would have to develop an artificial intelligence that is exclusively responsible for it or adapt the program that is already used to create by means of reengineering for that purpose.Greetings @neavvy, great article, a few days ago I was reading about this precisely, and it is that draws much attention to where you can focus the use of this technology, because as in everything there is its good side and its bad side, and of course it is necessary to emphasize that as many look for the good there are those who look for the evil and sometimes harming or distorting for what really this technology was created ... what so to say it dirty the face to what perhaps can be something good, because suppose we have some very famous artist and you have a song that fascinates you, with this technology although the artist is deceased could create again a music video which for all the technology at disposal probably has a brutal quality, compared to the original and not It's wrong, but well, as I told you before, there's something wrong with the case you raised about Macron's supposed message ...

Thank you for such an amazing reply @jjqf

I agree in 100%, this an ultimate truth that always needs to be considered. Especially in terms of technology development.

AI detecting deepfake is a really interesting conception, but I'm curious about its efficiency. It would probably work like computer viruses - they are always one step ahead of anti viruses.

As such, I share your opinion @neavvy, and that is how it will be, but with time we hope we can make it work as well as possible and avoid misunderstandings.

Definitely :) I think we will be able to do that at least on some level.

What an amazing reply @jjqf :)

It's a pleasure to read comments like the one you wrote. Steemit definetly need more people like you.

ps.

Would you perhaps consider using "enter" from time to time? To separate blocks of texts? It would make it much easier to read.

Cheers, Piotr

Yes, with regard to the organization, if I know how they would say here "mala mia" similar to my mistakeThanks @crypto.piotr for considering my comment as such, for me a compliment coming from someone like you.

Dear @jjqf

Stop it .... I'm already blushing :)

Cheers, Piotr

Somehow I feel shocked but I know it's true and I know it is, just like fake news, a real threat. It will not change the social structure that already happened years ago.

No matter how smart people claim to be, they still believe gossip and only a few try to find out the truth.

We love it if someone is accused of...

We simply don't care about the truth.

We already see it in politics since years (ages). We all know politicians lie and do not care about us, still we vote on them.

And if it comes to Macron. He will say this sooner or later and I hope the French will not be as stupid as the Dutchmen who let themselves scold by their prime minister each time again.

Posted using Partiko Android

Indeed @wakeupkitty - it is a real threat, and noone seem to know how to protect themselfs

Thank you for amazing (as always) reply @wakeupkitty

Yes, this is very strange situation. But do we have any better choice? I guess that's the best social structure, at least for now. I think that people generally tend to choose politicians that will be the most harmless in their opinion, this is really sad.

No people choose for what they are used too. Most are afraid for changes. It is the same as we see in so many misogyne relationships... the victim finds it easier/better to be beaten up by the partner as starting a new life alone which takes courage.

Posted using Partiko Android

That's also true. Changes are the least desired thing in our society.

Your memo about deepfake got me here.

Wow this is so alarming right!Hi @neavvy thanks for the memo

Now let us take a few steps back and try to see thinks more objectively.

Yes all the cases you bring forth point towards the possibility of the abuse of technology to create fake videos and news.

However if we trace the invention of photoshop which lead to the ability to produce doctored images. Soon experts came into existence that helped us distinguish the original from the doctored or photoshoped ones.

Now lets look into the future and try to find out the solution.

with the bitrh of the tech that could abuse the system by planting false news, I am sure with automation and AI we would be able to create a bot army which would be able to identy and block any fake news created

indeed @thetimetravelerz. It is very alarming and bloody scary :/

Caramba! I have been terrorized by the information. I have feared this subject of AI because human intelligence sometimes exceeds limits. These actions can be in my view of great social danger. Citizens with these simulations we can become human beings unbelievers and uncertainties will be greater and worse every day. The IA should deal with things less eccentric like these. I think that there are more important things to walk by falsifying information through these simulators. Greetings, thank you for the invitation.

This sounds very terrible! Such kinds of technologies can harm us very badly. Everything can be manipulated and it can trigger a state of chaos in which people will die in a state of frustration and lack of trust.

Thank you for your constant support dear @akdx. It's always pleasure to read your comments :)

Yes, this is extremely dangerous.

You have already cited out few examples of how this technology poses a treat to humanity.

like it is, fake news spreads faster than the real news and I think scientists need to come up with an easy and effective way to detect this technology ASAP in order to counter the spread of the false information in cases where it has been used.

Like someone Elon Musk once quoted "All these AI technologies that we are using and implementing will end up killing us one day"

I think we are heading to that direction.

Posted using Partiko Android

Indeed @akomoajong, fake news are spreading faster than the real ones.

How scary and amazing is it ....

Cheers, Piotr

Thank you for your reply dear @akomoajong :)

I'm not sure if killing is a proper word, but definitely they pose a real threat and we need to be aware of it. And stay extremely careful :)

Hi, neavvy

Really if it can be easy to deceive the human eye, using the Deepfake very well can confuse those who are not very attentive to the physical features of a person when issuing a statement or giving orders, although there are people who they watch or watch the video and pay attention to what they are saying there are also people who only listen and do not watch the video or pay attention to these things.

With regard to public opinion, if those who provide the news confirm the source very well there can be no problems, because everything will be in a false news or to create controversy, so it is good to verify the information before sharing it in the media.

I used Google translator

I am really grateful for your reply dear @suanky

Yes, this is definitely a great practice. And we, as individualities, need to check every source of information in order to prevent social misinformation.

Wow! This technology is a real pain in the ass (sorry for my french). It can cause from a global as an individual catastrophe.

You could manipulate entire communities. Imagine if they do that in my country? And they start sending videos of the rulers to start a civil war. As things stand today, a small match would start a nationwide fire.

Of course, wars could also be stopped using the same technology. We'll see where it will lean.

Thanks for sharing my dear @neavvy

Thank you for your comment dear @jadams2k

No problem buddy :D

That's definitely right. I suppose fake news are incredibly common and dangerous in your country. Especially politicians are trying to make people as misinformed as possible. This is really sad.

That's sadly right, my friend

Hi @neavvy,

The AI Foundation is working on a web browser plugin called "Reality Defender" that will flag potentially fake images and videos automatically.

You can read about it here: http://www.aifoundation.com/responsibility

Hi @devann,

It's really a great news. But those cybercriminals are acting a little bit smarter than us.

If we find a solution for a problem of some magnitude, then those fellows will come out with innovative thoughts and pose a threat that is more advanced than the magnitude of the problems we are facing now!

You are quite right, my dear @marvyinnovation.

Thank you for your reply and constant support dear @devann. This seems to be an interesting solution but I'm curious if efficient enough. It will probably work like computer viruses - they are always one step ahead of anti viruses.

I'm glad to see "deep fake" enter the private sector. State agencies have had a monopoly on deception for too long.

Now that it's entering the public space, people will finally realize that trusting only what you can experience directly first hand is the ONLY way we'll right the wrongs of the globalists and their puppets.

People still believe what they see on the TV... and that needs to be corrected, one "woke" individual reclaiming his/her senses at a time.

Hi @jbgarrison72

Are you really glad that deep fake is more accessible right now? I'm not sure if I share same optimism.

Again, I wouldn't be so optimistic about people realizing those things. I strongly believe that majority of the population will not come to any realization. They will continue believing whatever lies they are being fed.

Cheers

Piotr

I do at least share your abhorrence for fraud of any kind @crypto.piotr . :)

Thank you for your comment dear @jbgarrison72.

Interesting point. So maybe deepfake will have a positive impact, as people will stop to believe in television, which always tries to manipulate us somehow. Maybe it's time for decentralized sources of information.

Decentralization of control is critical for a free market... "of the senses" ...to operate and provide the best possible outcome.

I think we have here a clear relationship with the controversial facial recognition systems that are intended to be installed in commercial establishments and streets in certain cities.

This initiative has found a lot of detractors.

At first I could only relate to the idea of the invasion of personal privacy. But with this publication of yours I could see that the consequences can be catastrophic.

The problem is not the technology itself, the problem is man.

Thank you for your comment @juanmolina. I really appreciate that.

Definitely. Did you know that some of these systems can even recognize human by the "style" of walking?

That's generally crucial point. AI itself can't be good or bad (Terminator vision is really unlikely). It's rather all about what humans do with this technology.

Totally and absolutely agree with you, brother.

Hi @neavvy. This is a real threat because it causes disinformation.

Thank you for your comment @danielfs

Yes, we definitely need to be aware of this technology.

Hello :)

To be honest, with or without technology's help, I already can't trust most of communications seen and heard through screens.

We're in an era in which the corporate way to talk - you know : that weird inner-company-management language for which getting the last word is more important than being right - has already perverted most of everyday communications.

Nowadays, the word "truth" could as well mean "lie" or "bacon"... well : any meaning that would be convenient to people willing to spread their message.

IA and Deepfake technologies would be some more steps forward on a path we are already following since long.

Lack of sourcing, lack of transparency, perverted information and wild use of neuro-science tricks are already deep threats to a smart and clear public opinion. I don't think more tools added to this list would change anything.

At the opposite, when sources of information are excavated and help translating the fake language, well... some people start to wear yellow jackets and protest against this.

It doesn't take long though, before a bit of the already mentioned ingredients easily transform 70 year old grannies into violent agents of chaos and allows the use of skull-breaking, eyes-exploding, members-shredding "non lethal weapons."

There's no need to fear IAs and Deepfake technologies as public opinion manipulation tools : everything is already deeply rooted for this.

Dear @berien

Amazing comment. One of those worth reading. Steemit definetly need more people like you.

Lack of TRUST is becoming a very serious issue in current age.

Yours

Piotr

Deepfakes are one of our newest arms races. A university professor has developed software to identify video deepfakes by using a target's true videos to identify expressions associated with different kinds of speech. Deepfakes reveal themselves by mismatches. However, deepfakers are aware of this and adapting. This is the beginning of a race of measures and countermeasures.

As for pictures, seeing is not believing. As people become aware of fakery, they won't accept at face value. This is hardly new, witness the famous picture from which Stalin had Trotsky excised. The big problem now is political polarization that has removed constraints on confirmation bias. Now, truth is often what people want it to be, like Trump.

Posted using Partiko Android

Dear @rufusfirefly

Amazing comment. One of those worth reading. Steemit definetly need more people like you.

Interesting. I never heard anyone who would put it this way.

Yours

Piotr

Thank you for your comment @rufusfirefly

Yes, I am afraid that they will always be a step ahead of technologies trying to prevent them.

That's frightening, but I must agree on this point.

This is a great concern because there will be great confusion. We are all gullible and even at this point in time with the fast spreading of fake news we are easily deceived because these fake news affirms the things that we want to believe. We want to believe what we want to believe.

Thank you for your amazing comment @leeart

That's the point, I 100% agree.

It does make one paranoid and have trust issues. I just saw another a video how Chinese women use video filters which makes one wonder what is true and not.

I've known this issue for a while just didn't know the name is deepfake as proposed by you.

This thing didn't start today, it's been on the silver screen for a while, used in movies, mimicking and action like the original. But the challenges you posses here never occurred to me.

I guess new age new challenges, we will surely find a way through this..

Thank you for your reply @botefarm!

That's right, however, this technology has stated to develop rapidly recently. We didn't have sufficient technology to use deepfake, but nowadays everyone is able to use it on personal computer. That's way the problem is much more interesting.

I honestly don't watch a whole lot of television as it is, but it does make you wonder what really is.

Posted using Partiko Android

Thank you for your comment @enginewitty :)

Yes, that's probably a good choice nowadays.

It's another weapon in the hands of the propagandists. Weapons know no ideology. However, being computer code, producing convincing enough deepfakes is within reach of almost anybody.

One solution is to have people digitally sign their communications so the sources can be challenged - but this also creates an environment of plausible deniability. A figurehead can say something controversial, not sign it, then disavow the statement if called out.

Another would be to trust the sources that vet info and pass it onto us. However, trust in the mainstream media is extremely low.

Perhaps a distributed approach would be to use a web-of-trust style system and have known notaries mark copies as authentic or not. It would be up to the public to decide with notaries they trusted. We already do something similar for documents like birth certificates.

Posted using Partiko Android

Thank you for your comment @eturnerx

That's the frightening true.

That's interesting conception. And sometimes video materials are not intended fur public opinion, but journalists sometimes manage to get them, not necessarily in a legal way. Such materials would definitely not have such digital sign.

That's true, especially when nowadays most mainstream media are targeted on certain political opinion. There are plenty "trusted" TV stations in Poland which regularly manipulate facts.

Hi @eturnerx

Great comment buddz. One of those worth reading. Steemit definetly need more people like you.

Would you perhaps consider using "enter" from time to time? To separate blocks of texts? It would make it much easier to read.

Yours

Piotr

This technology using AI is very troublesome indeed.

First government law enforcement agencies, their cybercrime department should already conduct research and countertechnology to detect deepfake images and videos.

Second, information to the public should be made done inorder for potential victims could be more tech savvy with their data online. Security and privacy should be thoroughly practiced by each individuals in the nearest future.

Laws should be further improved in order to cope up with emerging technologies like these. And this should start now. Government policies, rules and regulation of companies and organization should also be kept abreast with new threats like these on their people and as an entity as a whole.

Thank you for your reply @guruvaj.

They already do so. US recently started an intense research on deepfake in order to prevent abuses in the upcoming elections.

I 100% agree. Awareness of the threat must definitely grow.

Unfortunately governments seem to be rather afraid of technology thus focusing on completely eliminating it rather than educating people.

Dear @neavvy,

The link you sent in the memo is not working but, I visited your page anyway hoping that I am in the "right post." If not, then just resend/edit the memo again and I will be very happy to look through it.

For me, deepfake while it can be a very useful and entertaining tool especially in the movie industries should not be used for any mudslinging campaign. That being said, it cannot really be avoided that as our technology becomes more developed, more and more cases would resurface. Its like the crop/edit of pictures a few years ago when it was used as a tool to undermine and attack anyone's credibility. Today, anyone can verify if a picture is edited or not. Why not develop the same technology for deepface?

Thank you for your reply dear @nurseanne84. I really appreciate your constant support :)

Wow, thank you for your effort.

Interesting point. Generally I think that developing AI detecting deepfake is possible, but I'm curious about its efficiency. I suppose it would work like computer viruses - they are always one step ahead of anti viruses.

I have been aware of this technology for a while as I worked in animation film.

I believe we can not trust our eyes in person or in media. the visual world around us could be manipulated, scenes can be staged in order to sway opinions one way or the other. This goes beyond just the video someone talking. With enough people together and paid actors (*there is a company that does this) you can make a political rally look sold out, you can pay protesters to protest good causes... So what we see cannot really believe is true.

It's a lot about taking a reactionary approach or a logical approach. it's about looking beyond the veil and asking what's really happening here. This unfortunately takes a lot of time. and generally after the truth has been revealed, it's been a while past the incident, minds have been made up, and people disregard the truth. The echo of media echoes the lies and the damage has been done, to counteract this takes a long time.

Look at trump. He lies every day, and well people call them out a few days later on these lies, his voter base sees him denying things that he has done and they believe. It's all very sad. To counteract this would require reprogramming of the human psyche and how we approach internal acquisition of truth.

That's just my thoughts!

What an amazing reply @jacuzzi :)

It's a pleasure to read comments like the one you wrote. Steemit definetly need more people like you.

Indeed. Those days, problem of TRUST is becoming world issue number one.

Cheers, Piotr

With deepfake, nobody can ever trust video again. Perhaps, video taking should come with blockchain or some watermark technology, all alterations can be traced back to its original clips. Viewers will be notified that the clip is altered with deepfake technology.

Posted using Partiko Android

Thank you for your comment @wyp.

Hopefully. Unfortunately we are not always able to track the source of a particular clip, but maybe we will come up with some technology detecting deepfake.

This has been around for years.

Yes, it's some very serious shit, especially when combined with the wholly unjustified faith which law enforcement places in facial recognition technologies. On Wednesday the authorities here in Argentina brought online a facial recognition tied to HD cameras surveiling the streets of Buenos Aires. And the claimed accuracy of the system is only 92%, which means the real world accuracy is probably down around 50% or completely unreliable.

Thank you for your reply dear @redpossum

Interesting... I've recently heard that in China they can recognize people by their way of walking if the face is hidden.

these deep fakes are highly distressing I mean if fake news articles are already doing so much damage what can fake videos do, people are too lazy to Do their own research and this could cause insane cases of mistrust or misinformation

Thank you for your comment dear @chekohler.

That's quite right, and what's worse some people want to believe in fake news and videos. There is even no video needed for thousands of people to believe in flat earth "theory".

I think this is why the 'influencer' model will eventually die and decentralization ('node') model will take over.

Thank you for your comment @ecoinstant.

That's interesting point of view. Do you think that people (especially young) will be able to stay without these "virtual" leaders?

I do wonder about our transition, but there is evidence that more education increases distrust in 'authority'. I only hope this means that they grow out of it

Fake videos began LONG before 2017. That's just when the term "deepfake" was popularized.

And yeah, no more spam message transfers, please.

Thank you for your reply @drutter

Well, Artificial Intelligence also began LONG before 21st century. Terms that we are talking about are actually pretty old, but for a long time we haven't sufficient technology to implement them. Obviously fake videos have long history, but in the past they were made using, I would say, "analog" methods. Nowadays technology enables NORMAL people to perform advanced "digital" video manipulation using resources from their personal computers! In the past deepfake was rather theoretical concept.

As you wish.

There is so much fake news in the world already, and deepfake, will make it seem much more credible, and a lot of people will be fooled by this. I really, don't like this as it will become to difficult to distinguish between the truth and a lie!!

Thank you for your comment and engagement @rynow :)

You are definitely right.

Yes this is already a huge problem on YouTube.

I used to like watching videos of UFOs.

Some were good some not so.

But today you can never be sure because the fake videos are so profesional. So I no longer watch them.

So in the future if this new technology becomes available to the General population. What and who will you beleive.

Just another step towards control of the people.

We will have to find ways to verify everything we hear.

Posted using Partiko Android

Thank you for your constant support @andyjem :)

What's most scary deepfake is already available to the General population. Everyone can download free software allowing to create deepfake video of virtually anyone. Fortunately, those amateur technologies are not yet advanced enough to pose a real threat, but it's just a matter of time I think. In the future it may become really dangerous.

Now i am worried 😬

Posted using Partiko Android

@crypto.piotr has set 2.000 STEEM bounty on this post!

Bounties let you earn rewards without the need for Steem Power. Go here to learn how bounties work.

Earn the bounty by commenting what you think the bounty creator wants to know from you.

Find more bounties here and become a bounty hunter.

Happy Rewards Hunting!

Dear @neavvy

Excellent choice of topic :) I'm sure you knew I will say that hahaha :)

I absolutely love how you introduced us to this topic. Seriously you kept my attention all the way.

It shouldn't surprise us. We're entering world of global distrust and noone seem to have any idea how to avoid it or adapt.

For that particular reason I don't even want to think about becoming a youtuber. So bloody risky.

ps. I wont be promoting your post even more. You have enough quality comments already.

Cheers,

Piotr

Thank you for your reply dear @crypto.piotr!

Haha :)

Sure. Thank you for the Bounty and engagement in comments :)

Pienso que este tipo de tecnologías como todas puede usarse de muchas formas...algunas muy perjudiciales y otras no...lo que está claro es que no es posible detener el continuo desarrollo de esta, pero desde siempre cada vez que se inventa un arma se crea un escudo para defenderse, pronto llegará algo que haga a Deepfake algo obsoleto, por lo que pienso que la mejor idea aquí es que no te alarmes...al contrario, busca información de este tema y otros similares...ya que la mejor forma para prevenirse de casos como este es conociendo que existen y cómo funcionan de antemano.

Thank you for your reply @iiic-137.

I agree with you, technology itself can't be good or bad, it's all about the way that we use it.

Ps. could you maybe consider writing in English so everyone can enjoy your comments? You can also use google translate :)

indeed

Thank you for your feedback @yossefgamal :)

Hola @neavvy. Excelente artículo. De verdad estamos viviendo tiempos peligrosos, y el mal manejo de todas estas herramientas puede conllevar a muchos a cometer serios delitos, por esto es importante no creer todo lo que vemos o escuchamos a la ligera. Un abrazo amigo.

Thank you for your reply @jorcam :)

I agree with you, we definitely need to be extremely careful about what we see nowadays.

Ps. Could you consider writing in english, so others will be able to enjoy your comments without using translators? :)

Keep spreading the Steemit loveNice @neavvy - people really need to be aware of this tech. Personally, I gave up movies and television 99.9% with the onset of CGI years ago (STAR WARS). Human perception is more keen than you realize, fortunately. It just might be our saving grace - or A.I. our destruction (?)

Thank you for your feedback @lanceman :)

I also gave up television, I think it's no longer applicable in my generation. What's wrong with CGI in your opinion? :)

Already seen this, but thanks for the reminder, @neavvy.

No problem @lighteye :)

👍

Posted using Partiko Android

Thank you @good-darma :)

Dear @neavvy, of course fake news made many trouble in our life, but this new evolution of fake news could make some much more trouble!!

Thank you for your reply dear @intellihandling

Yes, you are unfortunately quite right.

This is really educative.

I don't know more about this but at least I have an insight about it.

Posted using Partiko Android

I hope so :)

Thank you for your insights and engagement dear @abidemiademok21. Really appreciate that.

@neavvy @ziapase thanks for information about this post !!

Thank you for your quick response @steemadi. Hope you enjoyed it :)

This use of AI is quite scary. We can no longer trust video. LOL now we will have to interact IRL to be sure🤯

Posted using Partiko Android

Thank you for your reply dear @mytechtrail

Haha, perhaps it's a positive consequence :D