It has been a while since a published an update to my project. I started a new job as product manager for Seeed Studio/Chaiohuo Makers and moved to a new place. I was still working on my robot arm project and despite I didn't have enough time to complete it, I actually made a great progress with computer vision and would like to share it here.

There are a few approaches you can take when trying to build an arm for pick/place of real objects in ROS.

First one would be use one package for finding the object location in 3D world and then using MoveIt Pick and Place pipeline to generate and execute valid grasp.

Second approach would be an end-to-end system that takes a depth image or point cloud and outputs a robot pose for grasp.

I ultimately decided to go with second approach. Quick research found two suitable projects, both in research stage:

1)Berkley's Autolab CQ-CNN(Grasp Quality Convolutional Neural Networks) (Tensorflow)

https://github.com/BerkeleyAutomation/gqcnn

2)Andreas ten Pas Grasp Pose Detection (GPD) package (Caffe)

https://github.com/atenpas/gpd

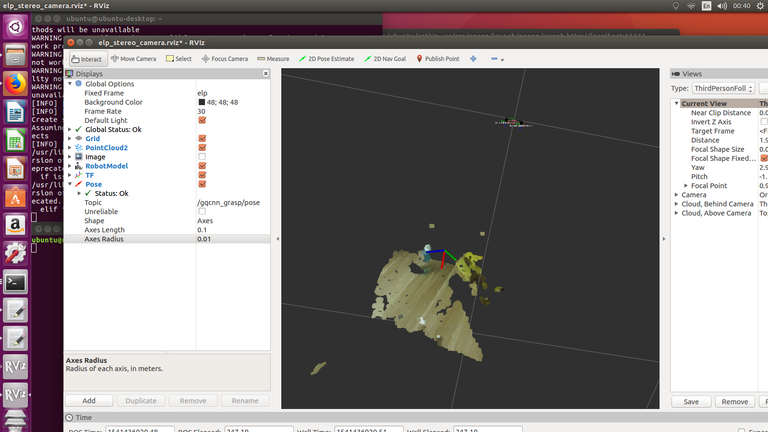

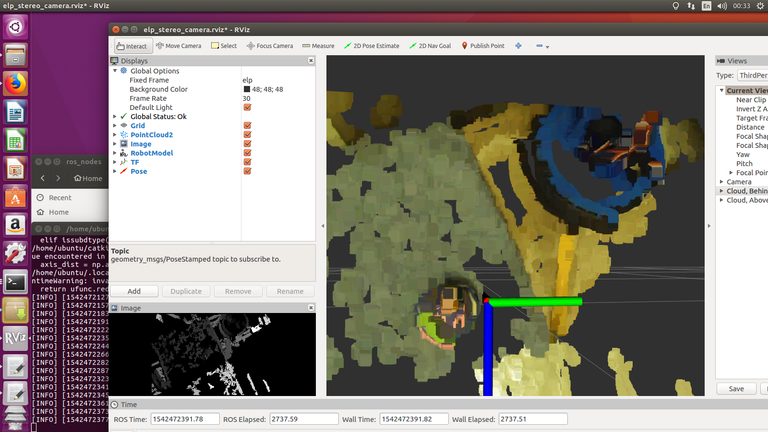

Both packages are in research phase and boast very robust grasp success rate. I originally started with Berkley's Package and found it quite easy to install and get started with. Project's main researcher, Jeff Mahler was very helpful in getting things to work. Here are some results from working with GQ-CNN ROS service.

But unfortunately it seems the reason it didn't quite work for me was bad depth image quality from my stereo camera (ELP-1MP2CAM001) and the complicated pipeline of obtaining it (camera driver -> stereo_image_proc PointCloud -> disparity_image_proc DepthImage).

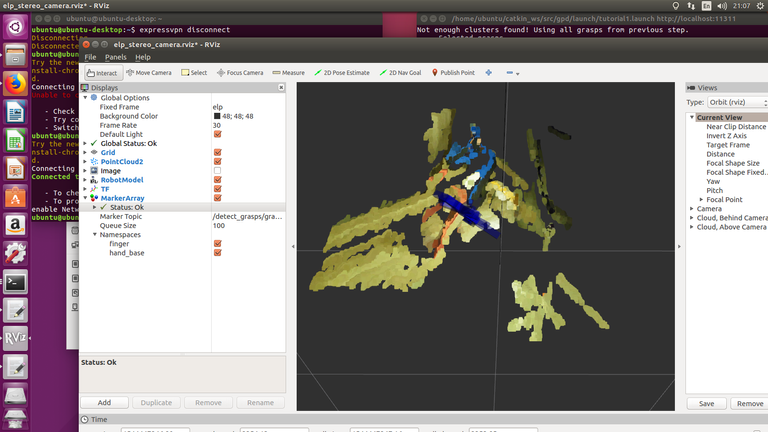

So I continued my search and stumbled upon GPD package. The installation process wasn't as straightforward as with GQ-CNN, but when I got it to work I was pleasantly surprised with prediction quality. Even with my poor camera the grasp poses seem much more spot on!

I will get better screenshots for my next article. Right now I got computer vision to work and can get valid grasp poses and then convert them from camera frame to robot frame. The problem I'm battling right now is IK for my 5DOF arm.

I have redone my robot's URDF and made sure all the joint and links are in correct location. But even after that all the numerical IK solvers I tried so far(KDL, TRAK-IK, BIO-IK) are failing to solve IK for the poses generated by GPD. IKfast can generate analytical IK solver for Translation3D and Rotation3D types, but fails to generate it for TranslationRotation5D, which is the type I need.

Right now, I'm actually contemplating to build a 6DOF arm :) Very likely it would be the easiest solution to the problem. Other solutions might involve continuing to work on IKfast TranslationRotation5D or using ML for IK solving(MORE MACHINE LEARNING FOR THE GOD OF MACHINE LEARNING!)

If you have any information on how it might be possible to get robust IK solver for 5DOF arm, please, don't hesitate to contact me.