Setting the Scene

link, link). I think this is an important issue to chat about because public trust and involvement in the institutions of science that they fund is essential for big science projects. Scientists should be held to high standards by the public, and an educated public about the issues and what is being done to fix them is a good first step.Why is science wrong sometimes? This is the question that I want to address, largely as a follow-up to @kyriacos‘s recent articles (

I’m going to be mainly focusing on my field of cognitive neuroscience and some of its neighbors in psychology because that is what I do and what I know best. I’m going to discuss some of the reasons that science is not optimal and has room for reform. Then I’m going to talk about what I see as things that the institutions of science are doing right, but can still lead to public distrust. And finally, I’ll discuss a few of the efforts that we as scientists are embarking on to make scientific results more robust.

Institutional problems in science

In my experience, scientists in academia are mostly enslaved to their extraordinary curiosity and passion for research. The main perk of being a professor with your own lab is that you get to propose and conduct the research that you are interested in. To have your own lab at a top research university is extremely competitive, both within the institution (attaining a sort of job security called ‘tenure’), and outside of the institution (competing with other scientists for limited funding resources).

Because there are so many applications from extremely qualified scientists for both faculty positions and grants, it is very helpful to be able to demonstrate your own competence and productivity. This is most commonly assessed by looking at someone’s publication record. How long have they been in science? How many papers have they published in that time? How many of those papers were of high quality, which is usually determined by the number of times other scientists cite their work?

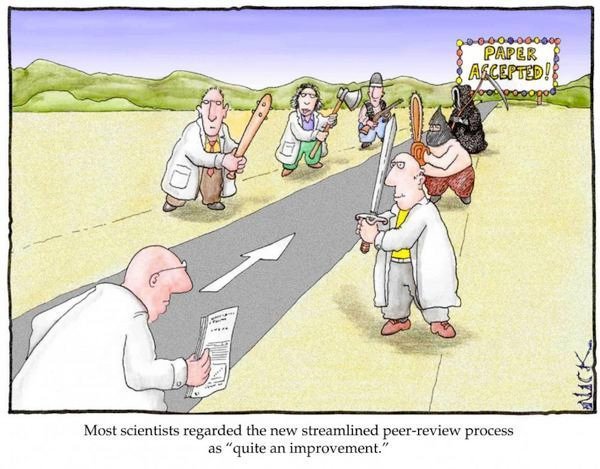

Because of this pressure to publish a lot and flaunt your success to get a job, there are some aspects of the process of science which could be improved. I’ll focus on three of the biggest points.

First, not many people are interested in publishing pure replication studies and checking someone else’s result. Why? There’s no glory in it. Not many people will cite the work, many journals won’t even publish the study, there is no demonstration of original and creative thinking on the part of the scientist. All that time could have been spent on a different study working towards a better chance of a good career in academia. Replication studies are important in science because they help check whether a result was just a fluke or if it is more likely to be real.

Second, we often use statistics to make inferences in science. In rare cases, scientists make up data, or hide negative results on purpose according to some agenda. This is not common in academics, and individuals caught doing it will end their careers in science. To most scientists, this is one of the most disgusting things that you could do. A more common problem is that sometimes a statistical result is on the border of what the community has regarded as statistically significant. In these cases, often slightly different statistical procedures, which are also considered valid, will produce results that are on the “good” side of that border. Since multiple analyses are attempted, but only some of them are reported, the threshold that researchers have agreed on for making an inference is not being respected. Even worse, those who do decide to publish the “non-significant” result will often find that journals are uninterested or that their papers sit unread in some very low-impact journal. This is called the “file-drawer problem,” where non-significant results end up in a closet somewhere instead of out in the world.

Lastly, I have a hunch that the amount of making yourself look good that you have to do to get a good job makes people too invested in their own ideas. If a good scientist publishes a result and doesn’t replicate it, they will publish that failure to replicate, too. They will change their minds in accordance with the best evidence. But there is a cost to this. Often doing that makes past work look flimsy. The line of research that you might be trying to build your career off of begins to crumble. The reason that made you famous in science and a hero to many is gone, and now you have to sheepishly go around talking about why you think you were wrong. It takes a lot of courage to do that, but even more courage when the atmosphere is so competitive.

Enter the dramatists

There are real problems in science, and in a bit I’ll talk about the ways that some of those problems are being addressed. Before that, though, I want to talk about something that I don’t think of as a problem in the scientific institutions themselves, and yet, causes distrust with the public: that sometimes the result of an experiment turns out not to be real.

The idea that some positive results will be wrong is inherent in statistics and has become natural to the science professional, but isn’t exactly intuitive. Scientists think in terms of evidence from many studies. They make inferences about effects based on an agreed upon statistical threshold. This threshold is meant to make it unlikely that we will find an effect when there isn’t one. But it is also meant to not be so strict that we are missing interesting effects when they do actually exist.

Some fields have stricter thresholds than others. For instance, in physics, there is often less uncontrolled noise in an experiment than in psychology, where there are many other factors that could influence the effect. These stricter thresholds make results in physics often more reliable than results in psychology. It’s not that psychology has decided to constantly publish unreliable results. The psychologists just realize that there are a lot of uncontrolled factors that produce noise surrounding the effects that they are interested in, so they have adopted a more liberal statistical threshold to promote progress in the field.

Therefore, rather than thinking in terms of scientific facts, scientists think in terms of useful models. Strong effects make them more confident in the model, and more likely that the effect is replicable in other conditions. When there is very strong evidence, and many replications, differing opinions tend to converge to a “scientific consensus”.

These studies, which contribute to structures of evidence, are presented very differently by the media. It is important for scientists to engage with the public and share scientific progress. However, coverage of scientific results can be misleading because the context for the evidence is often not provided. The strength of the scientific effect is left out. The volume of past and sometimes contradictory research is left out. Sometimes, conclusions that a scientist would never make is made by the media source. Political opinion often skews an interpretation of data at this stage. For example, one of my past articles took a look at a study that was incorrectly touted as a “win” for gender equality movements.

The goal of the science writer is to add some drama into a story to make it read by more people. The drama of dangerous climate change is already there; it doesn’t need to be spiced up. But the article providing preliminary evidence for components in food that make them harder to resist eating is kind of boring and needs some work. What we’re left with is a situation where “Cheese is as addicting as cocaine” is difficult to differentiate in seriousness and the magnitude of evidence from “Human-impact on climate reaches dangerous level.”

The toolbox

Research practices are changing rapidly to fix problems in the amount of results that cannot be replicated in certain fields. Most of these are being driven from within the field, often led by researchers within the field who have devoted their careers to addressing the problems.

Stats

First, statistical practices are evolving. I once heard a scientist named Gregory Francis propose an interesting problem at a talk. It goes something like this:

There is an effect, that many psychologists believe is real, called the bystander effect. This is when, under certain conditions, when there are a lot of people around, an individual is less likely to help someone in trouble. Out of 10 studies of the bystander effect, only 6 of those studies could make an inference regarding the existence of the effect. There is another effect, pre-cognition (seeing into the future), which barely any psychologists believe is real. However, out of 10 published studies on pre-cognition, 9 of those studies could make an inference regarding the existence of the effect. Now, if you hypocrites believe in replicability so much, why do you not believe in pre-cognition?

Well the issue here is that we have no idea how many non-effects were not published in either case, so we must look at the size of the effect for more information. The effect-size of the pre-cognition effect is tiny, and out of 10 studies, we would only predict that 5 of them would show significant results with the sample sizes used. Therefore, we can at least say that there is something fishy about the data. In contrast, the effect size of the bystander effect is small, and out of 10 studies, we would predict that 6 would find evidence for the effect. That is exactly what is seen in the literature, so it’s more believable.

Many journals are adopting policies where rather than focusing on statistical thresholds for inferences, authors are encouraged to focus on the size of the effects that they saw.

Journal requirements

Publication standards are also changing a lot to prevent the bad practices that are induced by the incentives to publish more. First, certain journals are simply accepting more replication studies. Other journals have sprouted up for the sole purpose of publishing null results.

In addition to this, many journals are requiring that authors provide access to their data. This is important because it makes it easy for other researchers and reviewers to check their analyses for themselves and help point out any mistakes or catch foul-play.

To prevent scientists from fiddling with the statistics, many journals are adopting the choice to pre-register the study. Pre-registration means that you explicitly state the hypothesis you are going to test, the sample size, and the statistics that you are going to use before the study begins.

Conclusion

If there’s anything that I want anyone to take from this, it’s that the institutions of science are trying to evolve and self-correct, largely because of passionate people who are curious enough about the world to demand that we learn about it as efficiently as possible. Some of the problems of science need to corrected from inside the scientific institutions, including the political institutions that provide funding. Some of the problems need to be corrected on the outside, by the public demanding a more careful analysis of how science is presented. Together, we can improve the efficiency of publicly funded research. Find your Action Potential!

Great stuff and well explained.

What do you think about a problem I've been alert to: the design and sample size of many studies suffer due to insufficient funding. So we end up with many poorly funded little studies. If the authors had more funding, they would probably conduct very long-term studies with thousands of subjects, multiple metrics, more rigorous procedures, etc. The quality of many studies seems to me to be severely limited because of the limited funding.

Yes, you're right and good point. Many grants require a power analysis now, which helps you decide on the sample size you need if you have a hypothesis about effect size. Many studies are still underpowered, but I think that there is a growing understanding of the problem.

Hey. I like your post.

Please, check my last story, I hope you would like it! :)

Yes

Hello! I just upvoted you! I help new Steemit members! Upvote this comment and follow me! i will upvote your future posts! To any other visitor, upvote this post also to receive free UpVotes from me! Happy SteemIt!

Nice write up