- The “Trinity,” the Cray supercomputer at the Los Alamos National Laboratory’s Advanced Simulation and Computing (ASC) program is one of the most powerful computers ever designed.

- In an age of supercomputers scientists and physicists are tackling problems and solving problems never before possible.

As a technology writer I'm always looking for an exciting topic to research and share with my readers. Sometimes it can be quite difficult to find technology news worthy of being explored and explained in detail.

On a recent hunt for a new topic to write about as the @ADSactly technology writer, I came across a few articles about the current state of supercomputers being developed and used in the world at the present moment. In this article I'll discuss a few of the world's greatest supercomputers and what they are being used for.

First up is the Cray XC50 supercomputer developed in Japan. It was designed for a specific purpose. It was built to specifically conduct nuclear fusion research, which will begin production this year. Although the Cray XC50 supercomputer isn't the most powerful supercomputer on the planet, it is going to make history regardless considering the fact that it is the most powerful within the field of nuclear fusion research.

The National Institutes for Quantum and Radiological Science and Technology picked out the computer for its research, and the computer will be installed at the Rokkasho Fusion Institute, one of the institutes’ centers for nuclear research.

It will be used for local nuclear fusion science experiments, and it’ll also play a role in supporting ITER, a massive multinational fusion project headed by the EU that’s halfway to completion. Over a thousand researchers from Japan and other countries will be able to use the system, mainly for plasma physics and fusion energy calculations.

This is interesting that scientists are still pushing ahead with this new and undeveloped technology and now have access to supercomputers like the Cray XC50 to conduct their research. Humanity has the possibility of making monumental changes based on new technology and its use in research and development and this case is a good example of that idea in use.

/cdn.vox-cdn.com/assets/747622/cray-titan.jpg)

Another interesting thing to note about this new development is the fact that Japan has decided to decommission its previous, older system, called Helios. A little fun fact about Helios is that it had been ranked 15th most powerful supercomputer in 2012. What we are seeing is exponential advancements in this new and exciting field of supercomputer design.

Fusion energy is still a while away, as ITER’s first plasma reactor is slated to become operational in 2035 and will cost billions of dollars in investment. Still, advocates say that once it’s here, nuclear fusion could cover the world’s need for energy for over a thousand years at least and not bear the same climate change side effects as using fossil fuels or radioactive threat from nuclear fission.

Some readers may not be familiar when it comes to the difference between nuclear fission (the current method being used for power generation in the world) and humanity's goal of using nuclear fusion in the future. Basically it is the difference between splitting atoms (fission) to create energy vs merging atoms to create energy (fusion). It is believed that fusion could be exponentially more effective in creating and capturing energy.

In regard to the topic of supercomputers I'd like to make the point that although it does seem like Japan may be a few laps ahead in the race to dominate the role of top supercomputer producer of planet Earth, the US isn't sitting on their hands here! They have also made incredibly large investments in the supercomputer arms race. In fact they pumped in $258 million last year alone in funding for companies that include but are not limited to Cray, AMD, Intel, Nvidia, and others. The US fully intends to be a major power and assert its dominance in the ownership and use of large exascale computers that can perform a billion billion calculations per second.

So far I've established that Cray is a major player in this new and exciting field of supercomputers. I've also demonstrated the fact that both Japan and the USA are heavily involved in a sort of supercomputing arms race. But one thing I haven't mentioned yet is some of the stiff competition that Cray may face moving forward.

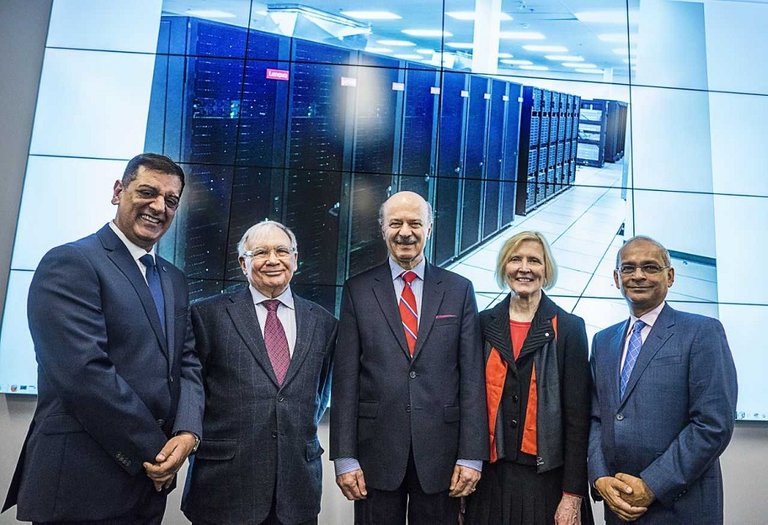

SciNet and the University of Toronto recently unveiled the “Niagara,” Canada’s most-powerful supercomputer. As you can see above it is a massive system that utilizes a 1,500 dense Lenovo ThinkSystem SD530 high-performance compute nodes.

Now I'm going to get even more techy in saying that the Niagara is a beast that delivers 3 petaflops of Linpack performance. Basically its a processing powerhouse capable of performing calculations beyond the wildest imagination of PC users trying to render their latest youtube video.

According to a recent article published by HPC Wire, the Niagara serves researchers across Canada. It assists researchers in fields that include artificial intelligence, astrophysics, high-energy physics, climate change, oceanic research and other disciplines that require the use of big data.

“There isn’t a single field of research that can happen without high-performance computing,” said SciNet’s CTO Daniel Gruner in a video presentation. “All Canadians will benefit from Niagara because basic research, fundamental research, is so important to the development of what we now call applied research. Without the basic research nothing can happen.”

Niagara is one of four new systems being deployed to Compute Canada host sites. The others are Arbutus, a Lenovo-built OpenStack cloud system being deployed at the University of Victoria; Cedar, a 3.7-petaflop (peak) Dell system installed at Simon Fraser University, and Graham, a 2.6-petaflop (peak) Huawei-made cluster, located at the University of Waterloo.

As far as I understand, both Cray and Compute Canada are in direct competition creating supercomputers that blow the standard PC out of the water! Competition is good as it encourages speedier development and reduces overall costs of deployment in fields involving technology.

There's a large demand for supercomputers primarily coming from governments and giant multi-national corporations so there's plenty of investment to go around in this field as well.

With a peak theoretical speed of 4.61 petaflops and a Linpack Rmax of 3 petaflops, Niagara ranks among the top 10 percent of fastest publicly benchmarked computers in the world today. System maker Lenovo has made both HPC and supercomputing a priority since it acquired IBM’s x86 business for $2.1 billion in 2014 and its ambitions aren’t stopping.

It’s deployed the fastest systems in Spain, Italy, Denmark, Norway, Australia, Canada, and soon in Germany with Leibniz Supercomputing Center, and it counts 87 Top500 systems (inclusive of all Top500 Lenovo classifications, i.e., Lenovo (81), Lenovo/IBM (3), IBM/Lenovo (2) and this collaboration). This puts it in second place by system share, behind HPE, and in third place after Cray and HPE based on aggregate list performance.

As you can see the stakes are huge and there are many big players including Lenovo, Cray and HPE. All of these corporations have huge budgets and the scale of their research and development departments is incredible. In the world of supercomputing its all about the petaflops!

What do you think about this supercomputing arms race? Do nations need to come together in terms of pooling their resources in an effort to improve co-operation? Do you think this new craze to create the most powerful supercomputer in the world will end well for humanity? What sort of use cases can you see being assisted through the use of these amazing supercomputers?

Thanks for reading.

Authored by: @techblogger

In-text citations sources:

'Japan is commissioning the world’s most powerful nuclear fusion research supercomputer' - The Verge

'SciNet Launches Niagara, Canada’s Fastest Supercomputer' - HPC Wire

Image Sources:

The Verge, HPC Wire, Pexels

I know that in order to unlock the supercomputer potential of ARM architecture, Cray had to develop appropriate software, including ARMv8 compilers and other development tools, as well as runtime libraries for mathematical, scientific, and communication applications. According to Cray, in two-thirds of 135 tests, its arm compiler provides at least 20% better performance than LLVM and GNU open compilers. Servers on ThunderX2 can be mixed with servers on Intel Xeon-SP, Intel Xeon Phi and Nvidia Tesla. And because it is very interesting that is something which has developed at Cray for ARM? How is the coordination of different units of software across platforms of different firms and manufacturers? What about GNU?

In such a cutting edge field I would imagine each use case regarding 'the coordination of different units of software across platforms of different firms and manufacturers' is going to be different and require a unique approach until a protocol is developed in which all software companies / firms and manufacturers can agree upon. In regard to GNU please clarify your question for me and I'll give it a shot! Thanks for your thoughts.

About GNU, the question was rhetorical. If Cray supercomputers have decided to use their ARM compiler, why compare it to the GNU compiler?

First of all, thank you for sharing such valuable information, secondly (answering your questions): 1.- I think it is a great technological advance for humanity and that all human resources from all nations should participate in it. 2.- However, the excessive competition for having a reasonable leadership in the race of supercomputing weapons is an indication of the struggle for total power in the world; whose power can lead to unreasonable conflicts. Regarding the last question; the most impressive case for me would be to materialize neutral ideas in seconds issues through a direct connection between our brain and these supercomputers, of course these ideas must be evaluated by the supercomputer and carried out if they correspond to the values, morality and the natural course of life, but for this not only must have a supercomputer, but also a super desire to do good by all humanity. Regards!

By the looks and sound of it, it must be really expensive!

technological progress can not be avoided, big countries are racing to create new resources or highly sophisticated weapons. that's a positive thing! but sometimes I feel afraid of excessive technological advances. because it will have many negative impacts that result if it falls into the wrong hands. hope it never happened. amen

Try to stay away from CAPS in your comments. It can come across as unfriendly. In regard to your ideas about technological progress I completely agree. Is it a positive thing that governments are racing to create bigger and more deadly weapons... I'm not quite sure how that could be viewed as a positive but I'll accept that it is just the way things are and will continue to be. Good effort in trying to add to this discussion.

Very good post, thanks for sharing the information.

It is amazing to see how new technologies are developed, strengthening existing principles and taking them to a new level

Nice post , i like it , good job .

Your post is always different i follow your blog everytime , your post is so helpful . I always inspire of your post on my steem work . Thank you for sharing @techblogger

Follow my blog @powerupme