TML will be a major breakthrough in computer science, not just in crypto. Explaining it is the challenge because apparently even people with Phds have difficulty grasping the potential. This category of people had difficulty grasping the potential of blockchains and smart contracts as well.

I will try to give a brief description of what TML will do.

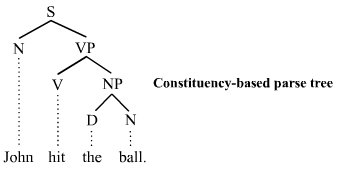

The first major breakthrough we will see is self interpretation. That is when we look at TML we are seeing a compiler-compiler which means it is a technology which allows you to input two documents. The first input document is the grammar which defines the syntax of a programming language. This is typically what we call a context free grammar but it can be any sort of grammar as a programming language is really just a formal language. The other second input document is the source code. The parser takes in the information to create a parse tree, and this parse tree is translated into an abstract syntax tree.

By Tjo3ya (Own work) [CC BY-SA 3.0 (https://creativecommons.org/licenses/by-sa/3.0)], via Wikimedia Commons

To explain the importance of parsing, we have to understand "strings". Programmers probably understand "strings". These allow for you to define the words which are used in the source code of a programming language. So for example in C we would have the common key words like bool, or return, or int, and these all have meaning for people who know C, while in Python we may see Def for instance. These all have to be defined in a document and then you have the parser which basically creates a kind of tree based off the key words in the source code document.

Source code scanner

In the phase called the analysis phase we have what is called syntax analysis. This is where the source code document is analyzed and passed through the parser. The parser takes an input and breaks it down into it's components, so it basically processes the input to pass to the next step. In the case of TML this will be the Earley parser which is one of the best most powerful kinds (certainly the best for TML).

So in the analysis phase the source code document is put through syntax analysis, based on a symbol table which represents what gets manipulated by the logic component. Partial fixed point is the logic component of TML which manipulates the symbols according to the rules of the language. Source code is essentially just a stream of characters, a bunch of symbols, strings, these get processed.

Semantic analysis

This happens after the parse tree is generated. This guarantees that the parse tree is following the rules of the language as defined. TML allows you to define the rules of any language. Earley parser allows you to parse any language. So when Earley parser outputs a parse tree, the semantic phase is where it gets checked, and this is usually where type checking takes place for instance. This is where the logic becomes important.

The symbol manipulation phase

This is where partial fixed point logic comes in. The parse tree is manipulated using the logic of partial fixed point. The output result is called an abstract syntax tree. The parse tree is the concrete representation of the input while the abstract syntax tree is the abstract representation of the input. The input is the source code. The abstract syntax tree contains only the essence, the main symbols, of the source code.

By Dcoetzee (Own work) [CC0], via Wikimedia Commons

Self interpretation of TML

The breakthrough is when TML itself is used to interpret itself. This means the language of TML will be defined through TML itself.

A self-interpreter is a programming language interpreter written in a programming language which can interpret itself; an example is a BASIC interpreter written in BASIC. Self-interpreters are related to self-hosting compilers.

If no compiler exists for the language to be interpreted, creating a self-interpreter requires the implementation of the language in a host language (which may be another programming language or assembler). By having a first interpreter such as this, the system is bootstrapped and new versions of the interpreter can be developed in the language itself. It was in this way that Donald Knuth developed the TANGLE interpreter for the language WEB of the industrial standard TeX typesetting system.

Think of the interpreter in TML as another input. The document representing the interpreter is supposed to be fed into the partial evaluator along with the source document to output a program. So for instance an abstract syntax tree could be the source document and the other document could be the interpreter for TML.

I may have left some stuff out but I'm trying to outline the phases. Syntax analysis, and semantic analysis, are the two main phases. The goal is to achieve self interpretation as this would be the defining breakthrough and proof of success.

Way too confusing....😽 Can I get some chicken with it? I'm just a cat! Meow Brrrrrrrr.....

Times like this I wish Steem had a laughter upvote for jokes.

Thanks for putting this together @dana-edwards. While I'm still cracking my head over the self-interpreting concept on a dynamic, changing language, I understand that TML is made to be agnostic (if that's the right word for it) and usable regardless of whatever timeline we find ourselves in. I've been writing some draft about the abstract idea - would it be right to say that the protocol is made to be both ephemeral and eternal? Big words, but that's the gist that I'm grokking from the project.

I put together this for even more info for those who want a bit of direction on research: https://steemit.com/tauchain/@dana-edwards/for-all-who-are-researching-tauchain-tml-to-understand-how-it-works-a-nice-video

An interpreter is what tells a program how to run.

Yup I get that having worked with them before, just dont fully comprehend it under TML's context..

It has to do with Futamura projection and the self defining language component of TML. If I think of Futamura projection as transforming an interpreter into a compiler, and then we see TML has that capability of partial evaluation with Futamura projection, then it would seem it allows TML to compile itself in a way, and that is I think what self interpretation does for Tau.

But I think Ohad might be best to explain it. I try to but there are so many details and concepts involved. If I cannot explain it precisely then it probably means I don't have a good enough understanding of it.

Ah, you got that right. Think I've seen this before, but it's much clearer now reading it again: http://blog.sigfpe.com/2009/05/three-projections-of-doctor-futamura.html

To add, the benefits of processing Futamura's third projection is two-fold: high-level language expressiveness and low-level language speed. So that's the compiler-compiler(?). Kinda amazing this hasn't been applied in any blockchains afaik, although most haven't gone through this level of sophistication. These information are just out there in the open..

Hey @kevinwong and @dana-edwards! I've been following your posts regarding this coin and I'm having trouble understading if AGRS is the same as TML. If I buy this coin, will it be the coin you are both discussing? https://wallet.bitshares.org/#/market/OPEN.AGRS_BTS

Sadly, I've already missed that $0.9 price but am interested in picking some up if you can please confirm.

Yes, it is. AGRS is going to be built using TML.

I'm not sure if open.agrs is the real deal on bts?

In what way is that breakthrough?

The concept was researched by Alan Turing in 1930s (UTM).

John McCarty published LISP paper in 1960 where he described, among other things, LISP interpretation logic encoded in LISP itself.

Metacircular interpretation used to be a part of intro programming course in MIT, it's not very complex and pretty much every person who is good at CS should be able to do self-interpreter or a thing like that.

Fair question and I'll try to address your concerns below.

You are correct. Self interpreting is not a new concept and I never implied that. The major breakthrough in computer science comes from the context in which this concept is being applied. If we look at hash tables, or SHA-265, or HashCash, these concepts existed prior to blockchain. Can we still agree blockchain was a breakthrough in computer science?

Universal Turing Machine is not the right concept for TML. In fact, not every language from TML will even be Turing complete. So this really has very little to do with UTM unless you're aware of something specifically.

TML stands for Tau-Meta-Language. It's not best described as a singular language but more a means of creating languages. Of course I may have to find a better description, but this is just to say it's possible to support Turing complete languages ( perhaps not using partial fixed point), this may require some other underlying logic. The Earley Parser can parse all CFGs, it can handle natural language.

Lisp can do self interpretation and I never said the concept of self interpretation by itself is the breakthrough. It's a breakthrough in how TML will be applying it. TML is the breakthrough.

This is beside the point. Being able to code a self interpreter or even understand the concept does not mean you understand how it's being used in TML. It's TML which is unique, while the milestone "self interpretation" is the last major milestone, similar to when Bitcoin achieved it's genesis block. The ability to actually begin using TML to do something practical begins after this milestone.

TML having self interpretation a milestone because it will allow collaborative development to take place over social media (like Steemit or Facebook). Languages are the key behind scaling discussion. Discussion which can translate what we say in informal natural language, LIKE THIS DISCUSSION, and turn it into models which can be structured in such a way that this can become a formal specification, and eventually allow program synthesis.

Stuff like this exists only in academia, and were never before done collaborative. Never before has there been a way to scale both discussion and collaborative development. If you look at TML right now the code base looks like a compiler-compiler which uses (PE) Futamura projection. The Earley Parser is strategically chosen for Tau, but I can understand how just looking at it, it is going to appear to be another YACC but I can assure you there is nothing else like TML out there.

Go and check, I challenge you to find something like it. If you can, I'll bring it to Ohad myself. I think once TML can be used by developers then it will quickly become obvious the advantages it offers. I also accept I might not be the best at explaining this stuff, but I can tell you to keep your eye on it.

I've been wondering about this part whether or not it can be used in any platforms!