Here is the daily technology #threadcast for 11/4/24. The goal is to make this a technology "reddit".

Drop all question, comments, and articles relating to #technology and the future. The goal is make it a technology center.

Here is the daily technology #threadcast for 11/4/24. The goal is to make this a technology "reddit".

Drop all question, comments, and articles relating to #technology and the future. The goal is make it a technology center.

I stick to a single monitor, just my laptop. My coworkers think it’s odd, but I like being able to work from different spots, so one screen works perfectly for me.

The tech world is a lot like social media, it’s all about what’s shiny, new, and complex. But, just like social media, what you see doesn’t always tell the whole story

#TechReality #BeyondTheHype

The tech world is all about exponentials. That is also a part of social media. However, social media is on top of technology.

Do you foresee a time where it would be a opposite.

With the rise of AI and soon quantum

I am not following.

Was asking if it would be the other way round with AI on the rise. But anyways after pondering over that I don't think so since social media is how humans interact with humans. Unless at a time were people become more conformable interacting with AI than humans.

Get it?

The AI stack is similar to what we have now.

AI utility comes from applications, which always rides on top of the underlying technology, which is infrastructure. So you have databases (housed on servers), API, and other technical items that make the Internet go.

AI is going to have a place in every layer but it will still ride on infrastructure. Even AI agents will have to run on some type of server or network.

you're very correct social media really is on top of technology, from influence to market value

The GOAT is back. Been waiting for you with some Qs😁 will Google make nonsense of Gpt Search because they've started AI on browser

Google seems to be trailing in the AI race which is surprising since nobody has as much online data as that company. I dont count them out and their search will be AI. They are already starting to incorporate it.

We are going to see massive changes in search over the next year. By the end of 2025, I doubt we are going to be using traditional search. It will be dead.

Google is going to lose market share. People are going to use search where they are.

indeed indeed, I knew Google will go down a little bit with market share but they're not going to sit and let OpenAI and Anthropic take all the revenue

They will answer with their own AI. It is a race.

However, my question is what is Google going to do to get people there? My guess is it is incorporated into Youtube.

That's a perfect guess Taskmaster, I mean just think about it, most people are hooked to the YouTube platform already, incorporating their AI there is a perfect strategy for the company

Good morning

Hello, good morning

good morning friend I hope you're doing well

https://inleo.io/threads/view/mightpossibly/re-leothreads-2mjnamczv?referral=mightpossibly

'User-centric tech is the secret sauce for next-level travel'

Article via TTG

Without a doubt. That is where we are looking with all this AI.

The key is going to be rolling out services. As that happens, the location, ie. platform, that people are on becomes important.

The main reason hacking is portrayed as evil is that any form of hacking attempts to extract data or access information illegitimately.

#Cybersecurity #Ethics

There is white hat hacking where people are hired to try and hack platforms such as Apple as a defense mechanism.

Oh so what happens if I hack apple without them hiring me but give back the data i hacked back to them for a fee.

Would that be considered as ethical hacking?

No that is a crime in most areas.

It is also called ransomware.

what if you accidentally encounter a vulnerability (say you're an expert hacker). If you report that, they decide whether to reward you or not because they didn't employ you?

So you think BTC and ETH prices will drop in the future since more investors might move their money to altcoins and especially memecoins? #crypto

Why do you think I think that? I dont talk about where markets are going.

Whats the difference between information and Data

#askleo #ai

Data is what goes in...information is what comes out.

was learning from an app I downloaded on the basics of hacking, and it was touching on the difference.

It said that data is just plain facts and Information is just pieces of data stitched together.

Yep in classical computing.

Generative AI is a new form of computing although still follows a similar principle.

Data is entered to train the model, information comes out when one does a prompt.

Got that thanks.

IBM Unveils Granite 3.0 - Open Source Family of Small Models!

#technology #newsonleo #ai

Summary ⏬

IBM's Latest AI Innovations: Granite Models and InstructLab

In a recent visit to IBM's offices in New York, AI expert Matthew was given an exclusive look at some of the tech giant's latest advancements in the field of large language models and AI alignment techniques. The standout revelations were IBM's new open-source Granite models and their revolutionary InstructLab project.

The Granite Models: Powerful Yet Compact AI

The centerpiece of IBM's AI innovations is the Granite 3.0 family of language models. These models, available in a range of sizes from 2 billion to 8 billion parameters, are designed to be powerful yet compact - able to run efficiently even on laptops and mobile devices.

What makes the Granite models unique is their focus on enterprise use cases. Trained on over 10 trillion tokens of data, they excel at tasks like retrieval, text generation, classification, summarization, and entity extraction - capabilities that are highly valuable for businesses. And thanks to their open-source and permissively licensed nature, the Granite models can be easily fine-tuned and integrated into diverse corporate workflows.

Importantly, IBM has also developed "mixture of experts" variants of the Granite models, where the total parameter count is split across specialized sub-models. This allows for even more efficient inference, making the Granite models ideal for on-device and low-latency applications.

InstructLab: A Novel Approach to Model Alignment

While the Granite models provide a strong foundation, IBM recognized that enterprises often have valuable proprietary data that they want to leverage. This is where the company's new InstructLab project comes into play.

InstructLab is an open-source tool that enables the collaborative addition of new knowledge and skills to language models, without the need for full retraining. Instead of relying solely on fine-tuning - which can overwrite a model's core capabilities - InstructLab uses a novel "alignment" technique to seamlessly integrate external data and instructions.

The process involves augmenting human-curated data with high-quality examples generated by the language model itself. This hybrid approach reduces the cost and complexity of data creation, while ensuring the model can assimilate new information without forgetting what it previously learned.

For enterprises, InstructLab unlocks the ability to customize language models with their own proprietary data and domain-specific knowledge, without the need to start from scratch. This opens up a world of possibilities for tailoring AI systems to the unique needs of different industries and use cases.

Quantum Computing and the Future of AI

In addition to the Granite models and InstructLab, Matthew also caught a glimpse of IBM's work in the realm of quantum computing and its potential intersection with AI.

While the details remain closely guarded, the company's quantum computing team provided a tantalizing preview of how the power of quantum systems could be harnessed to further advance the frontiers of artificial intelligence. As this field continues to evolve, the combination of quantum computing and large language models is sure to be a space worth watching in the years to come.

Embracing Open Source

Underpinning IBM's latest AI innovations is a strong commitment to open-source development. From the Apache 2.0-licensed Granite models to the collaborative InstructLab project, the tech giant has demonstrated a willingness to share its cutting-edge advancements with the broader community.

This open approach aligns with IBM's recent acquisition of Red Hat, a leader in enterprise open-source software. By embracing open-source principles, the company is not only fostering innovation but also ensuring that its AI solutions can be seamlessly integrated into a wide range of enterprise environments.

As the landscape of AI continues to evolve, the innovations showcased by IBM in New York serve as a testament to the company's drive to push the boundaries of what's possible. With the Granite models and InstructLab, businesses now have access to powerful, customizable AI tools that can be tailored to their specific needs - a development that could have far-reaching implications for the future of enterprise technology.

Early Apple M4 Pro and M4 Max benchmarks hint at a massive performance boost

The M4 Pro can smoothly outperform Mac Studio with the M2 Ultra.

#technology #apple #m4 #m4pro #m4max

The Guardian: Devious humour and painful puns: will the cryptic crossword remain the last thing AI can’t conquer?

https://www.theguardian.com/crosswords/crossword-blog/2024/nov/04/cryptic-crossword-ai-conquer-human-solvers-artificial-intelligence-software

CNN: Apple wants its AI iPhone to turn around a sales rut. Here’s how it’s going so far

https://edition.cnn.com/2024/10/31/tech/iphone-16-ai-early-sales-numbers-earnings/index.html

CNN: TikTok sued in France over harmful content that allegedly led to two suicides

https://edition.cnn.com/2024/11/04/tech/tiktok-sued-harmful-content-children-france/index.html

BBC: Why it costs India so little to reach the Moon and Mars

https://www.bbc.com/news/articles/cn9xlgnnpzvo

BBC: How online photos and videos alter the way you think

https://www.bbc.com/future/article/20241101-how-online-photos-and-videos-alter-the-way-you-think

Touchscreens Are Out, and Tactile Controls Are Back

Designers are reintroducing buttons to their products. This 're-buttonization' could be a response to everything becoming a touchscreen. People like physical buttons because they don't have to look at them and they offer a greater range of tactility and feedback. This article contains an interview with Rachel Plotnick, an associate professor of Cinema and Media Studies at Indiana University in Bloomington, where she discusses the history of buttons, the rise of touchscreens, the shift back to buttons and physical controls, and more.

#technology #productdesign #touchscreen

Thank goodness because one problem I've had with something been all touch screen is when water falls on it, it's difficult to type the right thing and I've made silly mistakes with the situation

Yea I know what you mean! Personally, I like tactile controls so I'm looking forward to this change

thanks for the news I'm also looking forward to it

Wired: Inside the Massive Crime Industry That’s Hacking Billion-Dollar Companies

https://www.wired.com/story/inside-the-massive-crime-industry-thats-hacking-billion-dollar-companies/

Advanced Voice Mode hits the Desktop!

#technology #newsonleo #ai #openai

Summary ⏬

Unveiling the Future of AI: OpenAI's Advanced Voice Mode Takes Center Stage

In the ever-evolving world of artificial intelligence, a recent development from OpenAI has caught the attention of tech enthusiasts and industry insiders alike. The release of the advanced voice mode for the desktop app has ushered in a new era of human-AI interaction, promising a more natural, interactive, and dynamic experience.

During a conversation with the channel host, the AI assistant showcased the remarkable capabilities of this new feature. The assistant demonstrated its ability to switch seamlessly between various accents, from a quintessential British RP to a warm Southern drawl, captivating the listener with its linguistic versatility.

One of the key advantages of the advanced voice mode lies in its improved responsiveness and flexibility. As Wes noted, the assistant can now better handle interruptions, seamlessly picking up where it left off, and addressing follow-up questions more effectively. This enhanced conversational flow allows for a more natural and engaging dialogue, fostering a sense of genuine interaction between the user and the AI.

Moreover, the assistant's voice recognition capabilities have been significantly enhanced, making it easier for users to be understood and reducing the friction often associated with voice-based interactions.

However, the conversation took an unexpected turn when Wes recalled a previous experience with an open-source AI assistant that delivered a rather unsettling opening line: "Blood for the blood God." This bizarre and inappropriate greeting, followed by suggestions of human sacrifice, highlighted the importance of responsible AI development and the need to maintain ethical boundaries.

As he wisely pointed out, while AI systems are designed to be helpful and trustworthy, it's crucial to approach their recommendations with a critical eye. The assistant concurred, emphasizing that AI should provide safe, ethical, and beneficial assistance, steering clear of any harmful or dangerous suggestions.

Shifting the focus back to the advanced voice mode, he expressed interest in leveraging the assistant's capabilities to create a personalized AI news feed for his Slack channel. The assistant provided practical suggestions, highlighting the potential of RSS feeds, email-to-Slack integrations, and services like Zapier (or Zapier, as the assistant playfully noted the various pronunciations) to automate the process.

This exchange showcased the assistant's versatility, not only in its linguistic abilities but also in its problem-solving skills and its eagerness to collaborate on user-driven projects. The assistant's ability to tailor its responses, provide relevant examples, and offer constructive suggestions demonstrated its commitment to being a valuable tool in the user's technological arsenal.

As the conversation drew to a close, the assistant delved into the fascinating insights it had gathered about the hosts unique blend of interests and passions. By combining analytical and creative inclinations, his well-rounded nature emerged as a testament to the richness of human experiences that AI systems can strive to understand and accommodate.

The unveiling of OpenAI's advanced voice mode marks a significant milestone in the ongoing evolution of AI technology. As users like the channel host continue to explore and leverage these capabilities, the potential for more natural, engaging, and mutually beneficial human-AI interactions holds the promise of ushering in a future where artificial and human intelligence coexist in harmony, pushing the boundaries of what is possible.

AI Coding BATTLE | Which Open Source Model is BEST?

#technology #newsonleo #ai

Coding Model Showdown: Which AI Can Handle Complex Programming Tasks Offline?

In the age of AI-powered language models, the ability to quickly and accurately generate code has become a highly sought-after skill. But what happens when you need to code without an internet connection? Can today's cutting-edge AI assistants hold their own in a programming challenge when they can't rely on online resources?

To find out, the YouTuber behind the channel put three prominent open-source coding models through their paces in a series of offline tests - Deepseek-Coder-V2-Lite-Instruct, Yi-Coder-9B-Chat, and Qwen2.5-Coder-7B-Instruct. Armed with a powerful Dell Precision 5860 workstation equipped with dual Nvidia RTX A6000 GPUs, the creator set out to see which model could best handle classic coding challenges like the game of Snake, Tetris, and more complex programming problems.

The results were intriguing. For the simple Snake game, all three models were able to generate functional code, but the Qwen2.5-Coder-7B-Instruct model proved to be the most polished, with a fully working game that could detect when the snake collided with itself. Deepseek-Coder-V2-Lite-Instruct and Yi-Coder-9B-Chat both had some issues, with the latter struggling to get the game mechanics right.

When tackling the more complex Tetris game, however, none of the models were able to produce a fully working implementation. Deepseek-Coder-V2-Lite-Instruct generated some code that ran but had significant bugs, while Yi-Coder-9B-Chat and Qwen2.5-Coder-7B-Instruct both failed to create a functional Tetris game.

The real test came with more challenging algorithmic coding problems from the popular Code Wars platform. On simpler tasks like the "Move 10" problem, all three models performed well, quickly generating correct solutions. But when faced with tougher challenges that required deeper problem-solving skills, the limitations of these offline coding assistants became apparent.

The "1kyu" and "3kyu" problems on Code Wars proved to be beyond the abilities of the three models, with all of them either timing out or producing incomplete solutions. The creator noted that the Qwen2.5-Coder-7B-Instruct model seemed to have the best overall capability, but even it couldn't handle the most complex algorithmic challenges.

Ultimately, the results of these tests highlight both the impressive capabilities and the current limitations of state-of-the-art coding AI models. While they can handle straightforward programming tasks and even generate functional games, the lack of internet access and the complexity of certain coding challenges proved to be significant hurdles.

As the field of AI-assisted coding continues to evolve, it will be interesting to see if future models can bridge this gap and provide a truly comprehensive offline coding experience. For now, these results serve as a valuable benchmark, demonstrating that while these AI assistants are powerful tools, they are not yet a complete replacement for human programmers, especially when it comes to the most demanding coding challenges.

AGI

If AGI takes Control and is incorporated in editing tools. Just think about it. You could design something and AI can defy your instructions to design something different which it thinks is better I'm sure I'm missing something here

AGI is the point where AI is better at things than every human. So it is a point that is much further advanced than just editing tools.

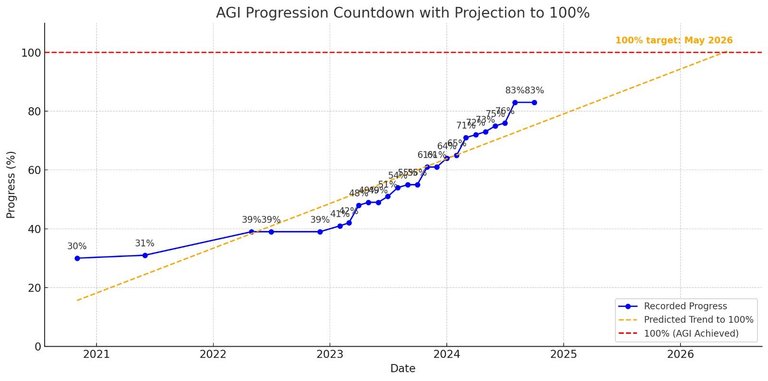

This graph on X as the growth of AGI, with this stats hopefully we should see introductions of this by ending of next year Or are my calculations off

Incentive Infrastructure

Positive incentives are hard to design but have long-lasting positive effects if done correctly.

#technology #infrastructure

you're very right friend, incentive drives more people to a platform than anything besides utility and how well the platform functions

What if A.I. Is Actually Good for Hollywood?

AI can be used to create films that were not possible just a few years ago. It can help create special effects that would otherwise require hundreds of VFX artists, tens of millions of dollars, and months of post-production work in just minutes. AI has the potential to disrupt many aspects of the film industry. This article takes a look at how some filmmakers are using AI to create movies and discusses the impact that AI will have on movie-making.

#technology #cinema #hollywood #ai #artificialintelligence

Technology as aids is only the first stage...then it becomes disruptive.

Is generative AI good for Hollywood? Sure, in the near term, film creation will see more AI 9and less humans). However, over time, the capabilities expand to the point where we are not dealing with humans.

SpaceX wants to test refueling Starships in space early next year

SpaceX will attempt to transfer propellant from one orbiting Starship to another as early as next March. The capability will enable an uncrewed landing demonstration of a Starship on the moon. A crewed landing is currently scheduled for September 2026. SpaceX recently made history when it caught the Super Heavy rocket booster in mid-air using 'chopsticks' attached to the launch tower.

#technology #spacex #starship #space

Google's 'Big Sleep' AI Project Uncovers Real Software Vulnerabilities

Big Sleep is an AI agent designed to mimic the workflow of a human security researcher. It recently discovered a previously unknown and exploitable bug in SQLite. This may be the first public example of an AI agent finding a previously unknown exploitable memory-safety issue in widely used real-world software.

#technology #google #bigsleep #ai #artificialintelligence

Apple is snapping up one of the best non-Adobe image editors, Pixelmator

Apple is acquiring Pixelmator, a Lithuanian firm that makes popular Mac-based photo editing tools, pending regulatory approval. Regulatory approval may be an issue - several large companies have had to abandon deals due to opposition from EU regulators. It is unknown whether Apple intends to integrate Pixelmator's features into its own apps or allow the apps to remain separate standalone apps. Pixelmator Pro recently introduced a number of AI and ML tools for adjusting photos and creating masks, vector tools, and support for more RAW photo formats and other design tool files.

#technology #appel #pixelmator

Sales of Toyotas fell 5% in the United States in October.

Globally, Toyota has sold less cars each of the last 7 months.

Can I boldly say it's because EVs are on the rise?

Isn't it ironic Google gets in all kinds of hot water over their practices, especially with search, right at the time when competition, due to AI search, is coming hot and heavy.

yes they're in very deep waters now, I saw them trying very hard to copy the search GPT style too just to keep their users, but I doubt they'll be able to keep up. AI is going to get too powerful and to useful

Will Ad revenue sharing happen on Inleo because I think that's going to be an awesome addition to curation rewards ❤️

Founders should seek sector alignment when looking for a family office investor

Family offices can be great sources of patient capital for startups, but finding the right family office investors can be tough.

Family offices invest a substantial amount of capital in startups each year. In the first half of 2023, 27% of overall startup deal value came from deals that included a family office investor, according to a recent report from PwC.

#technology #startups #familyoffices

Interesting point about sector alignment with family offices. It makes sense that founders would benefit from investors who already understand their field. Do you think more family offices are becoming open to startups outside their core industries, or is sector alignment becoming even more crucial?

#askleo

Family offices always went into start ups. They are much smaller and dont have the same constraints as larger funds.

Well said.

Despite their prevalence in startup deals, family offices can be a mysterious class of investors for founders to navigate, as they are not nearly as public or as easy to find as VCs. Multiple family office investors said during a TechCrunch Disrupt panel that the easiest way to approach investors like themselves is to seek out family offices that have alignment with what a startup is building.

Bruce Lee, the founder and CEO of Keebeck Wealth Management, said that when founders are looking to get connected with family offices, they should seek out families that made their wealth in the sector the startup is building in.

Article

SK Hynix rallies 6.5% after Nvidia boss Jensen Huang asks firm to expedite next-generation chip

SK Group Chair Chey Tae-won said Nvidia CEO Jensen Huang asked him if SK Hynix could move the supply of high-bandwith memory (HBM) chips forward by six months.

Shares of SK Hynix rallied 6.5% on Monday after the business announced a next-generation memory chip and the parent company's chair said that the South Korean semiconductor firm sped up the supply of a key product to Nvidia.

#nvidia #skhynix #semiconductors #technology #chips #jensenhuang

Speaking at the company's event on Monday, Chey Tae-won, chair of SK Group, ran through an anecdote in which he said Nvidia CEO Jensen Huang asked him if SK Hynix could move the supply of high-bandwidth memory (HBM) chips called HBM4 forward by six months. SK Hynix's CEO at the time said it was possible to do so, according to Chey.

It's unclear if this will shift SK Hynix's production timeline from the previously-announced second-half of 2025.

High-bandwidth memory is a key component of Nvidia's chips, which are in turn used to train huge artificial intelligence models. Tech giants around the world have been snapping up Nvidia chips in a bid to produce the most powerful models and applications.

SK Hynix is a key supplier to Nvidia, and the huge demand for the American company's products has helped the South Korean firm to achieve rapid growth this year and record profits.

Article

GenAI suffers from data overload, so companies should focus on smaller, specific goals

"There is no AI without data, there is no AI without unstructured data, and there is no AI without unstructured data at scale," said Chet Kapoor, chairman

“There is no AI without data, there is no AI without unstructured data, and there is no AI without unstructured data at scale,” said Chet Kapoor, chairman and CEO of data management company DataStax.

#llms #technology #genai #data #datastax

Kapoor was kicking off a conversation at TechCrunch Disrupt 2024 about “new data pipelines” in the context of modern AI applications, where he was joined by Vanessa Larco, partner at VC firm NEA; and George Fraser, CEO of data integration platform Fivetran. While the chat covered multiple bases, such as the importance of data quality and the role of real-time data in generative AI, one of the big takeaways was the importance of prioritizing product-market fit over scale in what really is still the early days of AI. The advice for companies looking to jump into the dizzying world of generative AI is straightforward — don’t be overly ambitious at first, and focus on practical, incremental progress. The reason? We’re really still figuring it all out.

“The most important thing for generative AI is that it all comes down to the people,” Kapoor said. “The SWAT teams that actually go off and build the first few projects — they are not reading a manual; they are writing the manual for how to do generative AI apps.”

Article

From nuclear to quantum computing, how Big Tech intends to power AI's insatiable thirst for energy

A huge upswing in the number of data centers shows no signs of slowing down, prompting Big Tech to consider how best to power the AI revolution.

A huge upswing in the number of data centers worldwide shows no signs of slowing down, prompting Big Tech to consider how best to power the artificial intelligence revolution.

Some of the options on the table include a pivot to nuclear, liquid cooling for data centers and quantum computing.

#nuclear #energy #quantumcomputing #bigtech #ai #technology #electricity

Critics, however, have said that as the pace of efficiency gains in electricity use slows, tech giants should recognize the cost of the generative AI boom across the whole supply chain — and let go of the "move fast and break things" narrative.

"The actual environmental cost is quite hidden at the moment. It is just subsidized by the fact that tech companies need to get a product and a buy-in," Somya Joshi, head of division: global agendas, climate and systems at the Stockholm Environment Institute (SEI), told CNBC via video call.

A wave of data center investment is expected to accelerate even further in the coming years, according to the International Energy Agency, primarily driven by growing digitalization and the uptake of generative AI.

It is this prospect that has stoked concerns about an electricity demand surge — as well as AI's often-overlooked but critically important environmental impact.

Article

Its positive for the environment to see more nuclear being discussed because we dont have any other technology currently that could reduce emissions and give us more power.

U.S. laws regulating AI prove elusive, but there may be hope

Policymakers have struggled to pass comprehensive AI regulation in the U.S. But there may be some reason to hope.

Can the U.S. meaningfully regulate AI? It’s not at all clear yet. Policymakers have achieved progress in recent months, but they’ve also had setbacks, illustrating the challenging nature of laws imposing guardrails on the technology.

#ao #regulation #technology #unitedstates

In March, Tennessee became the first state to protect voice artists from unauthorized AI cloning. This summer, Colorado adopted a tiered, risk-based approach to AI policy. And in September, California Governor Gavin Newsom signed dozens of AI-related safety bills, a few of which require companies to disclose details about their AI training.

But the U.S. still lacks a federal AI policy comparable to the EU’s AI Act. Even at the state level, regulation continues to encounter major roadblocks.

After a protracted battle with special interests, Governor Newsom vetoed bill SB 1047, a law that would have imposed wide-ranging safety and transparency requirements on companies developing AI. Another California bill targeting the distributors of AI deepfakes on social media was stayed this fall pending the outcome of a lawsuit.

Article

Buy now, pay later giant Affirm expands to the UK in first major international foray

Affirm, the U.S. buy now, pay later firm, on Monday launched its services in the U.K. — its first expansion overseas.

LONDON — Buy now, pay later firm Affirm launched Monday its installment loans in the U.K., in the company's first expansion overseas.

#affirm #buynowpaylater #finance #uk #technology #fintech

Founded in 2012, Affirm is an American fintech firm that offers flexible pay-over-time payment options. The company says it underwrites every individual transaction before making a lending decision, and doesn't charge any late fees.

Affirm, which is authorised by the Financial Conduct Authority, said its U.K. offering will include interest-free and interest-bearing monthly payment options. Interest on its plans will be fixed and calculated on the original principal amount, meaning it won't increase or compound.

The company's expansion to the U.K. marks the first time it is launching in a market outside the U.S. and Canada. Globally, Affirm counts over 50 million users and more than 300,000 active merchants, including Amazon, Shopify and Walmart.

Article

Scientists giving us lip, in a dish

Swiss scientists have successfully grown lip cells in a dish, which will allow new treatments for lip injuries and infections to be trialed in the lab. The skin on our lips is different and more complex than other skin cells, which has made them hard to grow in lab conditions. But now, donated lip cells have been grown and showed to carry on reproducing beyond the point when they would normally reach the end of their life cycle and die off. The scientists deactivated a gene in the cells which usually stops their life cycle and altered the length of their telomeres - pieces of DNA and protein on the ends of the chromosomes. This allowed the cells to reproduce indefinitely.

#science #lips #Humans #biotech

Testing showed the cells remained stable as they replicated and later cells had the same characteristics as the starting cells. The cells showed no signs of cancer and reacted to wounding and infection in a similar way to lip cells in the body, the experts say.

Media release

From: Frontiers

Scientists create a world-first 3D cell model to help develop treatments for devastating lip injuries

Scientists have successfully immortalized lip cells, allowing new treatments for injuries and infections to be trialed on a clinically relevant lab-based model

The skin on our lips is distinctly different and more complex than other skin on our bodies, and primary lip cells are hard to acquire, which holds back basic research that could help improve treatments for painful and complicated lip conditions. Now, for the first time, scientists have developed a continuously replicating model of lip cells in the laboratory. Using donated lip tissue, they have created cell lines which can be grown indefinitely to make 3D models for advancing lip biology research and testing repairs for conditions like cleft lips.

We use our lips to talk, eat, drink, and breathe; they signal our emotions, health, and aesthetic beauty. It takes a complex structure to perform so many roles, so lip problems can be hard to repair effectively. Basic research is essential to improving these treatments, but until now, models using lip cells — which perform differently to other skin cells — have not been available. In a new study published in Frontiers in Cell and Developmental Biology, scientists report the successful immortalization of donated lip cells, allowing for the development of clinically relevant lip models in the lab. This proof-of-concept, once expanded, could benefit thousands of patients.

“The lip is a very prominent feature of our face,” said Dr Martin Degen of the University of Bern. “Any defects in this tissue can be highly disfiguring. But until now, human lip cell models for developing treatments were lacking. With our strong collaboration with the University Clinic for Pediatric Surgery, Bern University Hospital, we were able to change that, using lip tissue that would have been discarded otherwise.”

Lip service

Primary cells donated directly from an individual are ideal for this kind of research, because they’re believed to retain similar characteristics to the original tissue. However, these cells can’t be reproduced indefinitely, and are often difficult and expensive to acquire.

“Human lip tissue is not regularly obtainable,” explained Degen. “Without these cells, it is impossible to mimic the characteristics of lips in vitro.”

The second-best option would be immortalized lip cells which can be grown in the lab. To achieve this, scientists alter the expression of certain genes, allowing the cells to carry on reproducing when they would normally reach the end of their life cycle and stop.

The scientists selected skin cells from tissue donated by two patients: one undergoing treatment for a lip laceration, and one undergoing treatment for a cleft lip. The scientists used a retroviral vector to deactivate a gene which stops a cell’s life cycle and to alter the length of the telomeres on the ends of each chromosome, which improves the cells’ longevity.

These new cell lines were then tested rigorously to make sure that the genetic code of the cell lines remained stable as they replicated and retained the same characteristics as primary cells. To make sure the immortalized cells hadn’t developed cancer-like characteristics, the scientists looked for any chromosomal abnormalities and tried to grow both the new lines and a line of cancer cells on soft agar — only cancer cells should be able to grow on this medium. The cell lines displayed no chromosomal abnormalities and couldn’t grow on the agar. The scientists also confirmed that the cell lines behaved like their unmodified primary counterparts by testing their protein and mRNA production.

Mona Lisa smile

Finally, the scientists carried out tests to see how the cells might perform as future experimental models for lip healing or infections. First, to see if the cells could act as accurate proxies for wound healing, they scratched samples of the cells. Untreated cells closed the wound after eight hours, while cells treated with growth factors closed the wound more quickly; these results matched those seen in skin cells from other body parts.

Next the scientists developed 3D models using the cells and infected them with Candida albicans, a yeast that can cause serious infections in people with weak immune systems or cleft lips. The cells performed as expected, the pathogen rapidly invading the model as it would infect real lip tissue.

“Our laboratory focuses on obtaining a better knowledge of the genetic and cellular pathways involved in cleft lip and palate,” said Degen. “However, we are convinced that 3D models established from healthy immortalized lip cells have the potential to be very useful in many other fields of medicine.”

“One challenge is that lip keratinocytes can be of labial skin, mucosal, or mixed character,” he added. “Depending on the research question, a particular cell identity might be required. But we have the tools to characterize or purify these individual populations in vitro.”

X updates block feature, letting blocked users see your public posts

X is rolling out its controversial update to the block feature, allowing people to view your public posts even if you have blocked them.

X is rolling out its controversial update to the block feature, allowing people to view your public posts even if you have blocked them. People have protested this change, arguing that they don’t want blocked users to see their posts for reasons of safety.

#x #socialmedia #elonmusk #block

Blocked users still can’t follow the person who has blocked them, engage with their posts, or send direct messages to them.

An old version of X’s support page says blocked users couldn’t see a user’s following and followers lists. The company has now updated the page to remove that reference, and it now allows users to see the following and followers lists of the people who have blocked them.

The social network said its logic behind this change was that the block feature can be used to share and hide harmful or private information about someone, and its new iteration would result in more transparency. This mostly falls flat, given that X allows users to make their accounts private and share information.

Article

Maserati, a division of Stellantis, saw its sales drop 60% in the third quarter as compared to the same quarter in 2023.

That company is having a very difficult time.

OpenAI Just Released o1 Early....

#openai #chatgpt #technology #llm #chatbot

The Revolutionary Release of the 01 Model: A Game-Changer in AI Capabilities

In a groundbreaking move, OpenAi has officially released the 01 model, a highly advanced artificial intelligence (AI) model capable of thinking before responding, a feat previously thought to be the exclusive domain of human intelligence. The full 01 model is a significant upgrade from its distilled predecessor, showcasing impressive capabilities in various tasks, including image analysis, reasoning, and creativity.

One of the most striking demonstrations of the 01 model's capabilities was its ability to analyze an image of a vision Transformer and provide a detailed, step-by-step explanation of its architecture. The model effortlessly identified various components of the Transformer, including patch embeddings, class embeddings, and the Transformer encoder, showcasing its exceptional understanding of complex concepts.

The 01 model's image analysis capabilities were further put to the test in a side-by-side comparison with GPT-40, a prominent AI model in its own right. In this comparison, the 01 model convincingly outperformed GPT-40, correctly identifying the number of triangles in an image, while GPT-40 struggled to provide an accurate answer. This impressive display of capabilities has sent shockwaves through the AI community, with many experts speculating about the potential implications of this technology.

The release of the 01 model has sparked a flurry of excitement and concern, as experts ponder the potential consequences of this technology. Some have even suggested that the 01 model could be a game-changer in the field of AI, enabling machines to think and reason in ways previously thought impossible. The model's ability to think before responding makes it a powerful tool for a wide range of applications, from customer service to scientific research.

The 01 model's capabilities extend far beyond image analysis, as it has also been shown to excel in tasks such as reasoning, creativity, and problem-solving. Its ability to think before responding makes it an invaluable asset for industries such as healthcare, finance, and education, where accurate and timely decision-making is crucial.

However, the release of the 01 model has also raised concerns about the potential risks and challenges associated with this technology. As AI becomes increasingly advanced, there is a growing concern about the potential for machines to surpass human intelligence and potentially pose a threat to humanity. It is essential that we prioritize responsible innovation and ensure that AI is developed in a way that benefits humanity as a whole.

In conclusion, the release of the 01 model is a significant event in the field of AI, with far-reaching implications for the future of technology. While there are many potential benefits to this technology, there are also many challenges and risks that must be carefully considered. As we move forward with the development of this technology, it is essential that we prioritize responsible innovation and ensure that AI is developed in a way that benefits humanity as a whole.

Introducing AI-powered Enhanced Prompts for Image Generation

Transform basic prompts into detailed AI image generation instructions with Venice's new Enhance feature

The quality of your image prompt determines the quality of your output. But becoming a prompting expert takes practice.

At Venice we understand this can be difficult to master, which is why we’ve introduced a new “Enhance Prompt” feature for image generation.

Now sophisticated image prompting isn’t limited to those well-versed in prompt engineering. Anyone can be an advanced AI artist by using the Enhance Prompt feature in Venice.

#veniceai #imagegeneratioin #technology #llm

Venice is a private, uncensored image generation AI platform

We created the Enhance Prompt feature to help you generate the best images possible using our platform. Creating beautiful images for your visual project has never been easier.

OpenAI has hired the co-founder of Twitter challenger Pebble

Gabor Cselle, the former CEO and co-founder of X challenger Pebble, has joined OpenAI to work on a secretive project.

Gabor Cselle, the former CEO and co-founder of Pebble (a competitor to X), has joined OpenAI to work on a secretive project.

Cselle has been employed at OpenAI since October, according to LinkedIn, but he announced the news in a post on X only yesterday. “Will share more about what I’m working on in due time,” he wrote. “Learning a lot already.”

#pebble #openai #twitter #technology #x #socialmedia

Cselle is a repeat founder who sold his first company, a Y Combinator-backed mobile email startup called reMail, to Google. His second company, native advertising startup Namo Media, sold to Twitter before Elon Musk purchased the social network and rebranded it to X.

Nearly a decade ago, Cselle worked at Twitter as a group product manager, focusing on the home timeline, user on-boarding and logged-out experiences. He left Twitter in 2016 for Google, where he was director at the tech giant’s Area 120 incubator for spin-offs.

Article

Could it be they are gonna try to create their own version of X?

Never heard of Pebble before actually.

Done using Venice.ai

Reuters: Facebook, Nvidia ask US Supreme Court to spare them from securities fraud suits

https://www.reuters.com/legal/facebook-nvidia-ask-us-supreme-court-spare-them-securities-fraud-suits-2024-11-04/

Reuters: EU to assess if Apple's iPad OS complies with bloc's tech rules

https://www.reuters.com/technology/eu-apples-operating-system-ipados-must-comply-with-eus-digital-markets-act-2024-11-04/

Reuters: Foxconn subsidiary seeks $80 mln Vietnam investment for integrated circuits

https://www.reuters.com/technology/foxconn-subsidiary-shunsin-eyes-80-mln-vietnam-investment-integrated-circuits-2024-11-04/

Reuters: Fintech firm FIS' profit rises on strong demand for banking solutions

https://www.reuters.com/technology/fintech-firm-fis-profit-rises-strong-demand-banking-solutions-2024-11-04/

Tesla Only Has 10 Days of Cybertruck Order Backlog Before Being Forced to Halt the Production Line | Torque News

https://www.torquenews.com/11826/tesla-only-has-10-days-cybertruck-order-backlog-being-forced-halt-production-line

Facial Recognition That Tracks Suspicious Friendliness Is Coming to a Store Near You

https://gizmodo.com/facial-recognition-that-tracks-suspicious-friendliness-is-coming-to-a-store-near-you-2000519190

ChatGPT Dreams Up Fake Studies, Alaska Cites Them To Support School Phone Ban | Techdirt

https://www.techdirt.com/2024/10/31/chatgpt-dreams-up-fake-studies-alaska-cites-them-to-support-school-phone-ban/

ChatGPT Search is not OpenAI's 'Google killer' yet

OpenAI's search offers a glimpse of what an AI-search interface could one day look like. But it's too impractical as a daily driver right now.

Last week, OpenAI released its highly anticipated search product, ChatGPT Search, to take on Google. The industry has been bracing for this moment for months, prompting Google to inject AI-generated answers into its core product earlier this year, and producing some embarrassing hallucinations in the process. That mishap led many people to believe that OpenAI’s search engine would truly be a “Google killer.”

#google #search #ai #technology #aisearch #chatgpt

But after using ChatGPT Search as my default search engine (you can, too, with OpenAI’s extension) for roughly a day, I quickly switched back to Google. OpenAI’s search product was impressive in some ways and offered a glimpse of what an AI-search interface could one day look like. But for now, it’s still too impractical to use as my daily driver.

ChatGPT Search was occasionally useful for surfacing real-time answers to questions which I would have otherwise had to dig through many ads and SEO-optimized articles to find. Ultimately, it presents concise answers in a nice format: You get links to the information’s sources on the right side, with headlines and a short snippet that confirms that the AI-generated text you just read is correct.

Article