The last time I communicated on the concept of self governance it was in; "Quantified Self, The Reputation Economy, and AI enabled Self Governance". This time I will provide a bit more detail on how self governance works and how I envision possible technical aspects of this reputation economy.

The concept of stakeholders in the context of self governance

In self governance just as businesses/corporations have what is called "shareholders"; an individual has "stakeholders". These stakeholders are anyone who cares what happens to that individual, anyone who is taking a position on the success of the individual, anyone in the individual's corner. This could be friends, colleagues, family members, anyone who depends on the individual or who may depend on them in the future. So this could be a quite large group and typically an individual may not even know all of who these people are.

Why public opinion matters

Public opinion determines reputation. A person who has a group of stakeholders should and must care what his or her group of stakeholders thinks. These stakeholders ultimately are the supporters behind the individual. If we are talking about books then these are the most loyal fans who buy every book. If we are talking about family then these are the people who will nurse the individual back to health or whom the individual feels the most affinity toward. In general, the idea is each person has a core group which may be between 50-200 people and this core group generally make up the web of influencers on the behavior of the individual. Many individuals care about the opinions of their core group more than anything else in life.

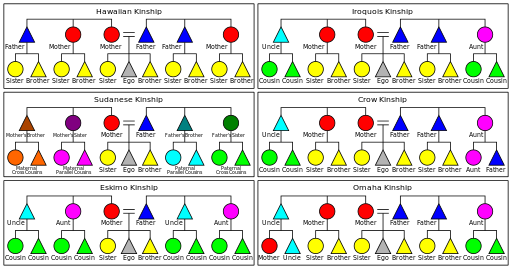

So while public opinion does determine reputation; in specific and in context it can include but is not limited to the reputation within what is known as the "kinship group" which makes up the center of gravity.

See image example:

By ZanderSchubert [CC BY-SA 3.0 (https://creativecommons.org/licenses/by-sa/3.0)], from Wikimedia Commons

This has been proven in scientific literature as evidence supports contract enforcement can be facilitated through social networks. Contract enforcement based on honor and reputation is possible when those involved are considered "socially close". To think of this in a different way, self governance in the context of feedback would mean that Alice can receive a real time measurement of how her stakeholders might react to a particular decision she is contemplating based on their shared values or common values. So in a certain way, having the ability to do moral analysis or run a moral simulation is the machine computational equivalent of a conscience. That is to imagine how certain people might react through mental visualization, and to map out potential reactions of different important stakeholders. This is something which can be facilitated in a more automated and computerized fashion as technology allows for moral analysis.

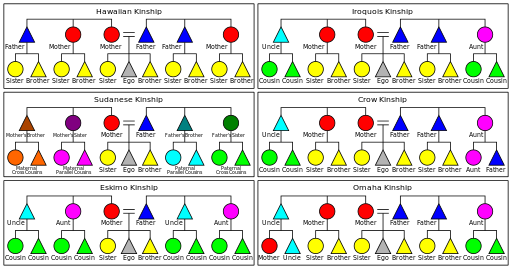

What is stakeholder analysis and how does it contribute to moral analysis?

By Zirguezi [CC0], from Wikimedia Commons

Stakeholder analysis is to assess a decisions potential impact on your stakeholders. Perhaps one of the reasons morality does not scale up is due to the inability (Dunbar's number again) of a person to manage this extremely computationally expensive task. To consider how five people might reaction to a decision may be realistic but this quickly becomes a complexity bomb when 5 becomes 500. Who can actually use their imagination to go into the different perspectives of 500 different people? This is in my opinion never going to scale and because it cannot scale is one of the reasons why I make the audacious claim that morality doesn't scale. It is true that people can perhaps try to be moral when it's half a dozen to a dozen people in a room, but when it's 500 people, then 5000, then 50,000, well the more people you add the more the combinatorial complexity explodes. It's simply not feasible for the same reason it's not feasible in chess to make an optimal move on a board with infinite squares.

Stakeholder analysis can use big data as an input. The big data collected from all stakeholders gets processed and analyzed. This then produces scores representing the results on a spectrum. These scores can then be used to try to predict or simulate (with varying accuracy) how 5 stakeholders may react (based on their interests), or 10, or 100, but even using computers this will not scale up infinitely. It must be noted that this is an open problem in computer science as far as I know, as there is no optimal solution to scale of the analysis but the fact is, at least this way you get at least some ability to see glimpses of the big picture.

These glimpses can allow for moral analysis to take place. That is if you know for sure something is extremely controversial to your stakeholders but you know for sure if you do it that it will lead to the consequences which is best for the majority of your stakeholders, then it is true moral analysis that you can produce numbers which will back up an argument. For example purchasing a certain product or service might not seem to make sense but if you can show that it will over a long period of time benefit all stakeholders then you can make this case in a quantifiable way.

Summary

- Individuals have stakeholders (people who take an interest in the future of that individual).

- An Individual's stakeholders may include but are not limited to being within the kinship group (this can include friends, colleagues, lovers, immediate family, or complete strangers acting as benefactors such as with patreon).

- Individual's when making a decision often try to determine how it might impact each of these stakeholders (supporters) because to not do so could result in hurting their own long term interests.

- The process of trying to imagine how each stakeholder may be affected is computationally expensive. This means it can benefit from formalization and quantified analysis via machines.

- Moral analysis can take place once there is a clear enough picture of each individual within the group of stakeholders. In other words if for example an AI or similar creates a model of a person based on learning how a person tends to react then perhaps simulations are possible based on that model. This is an open area of research as I haven't found anyone specifically studying moral amplification or moral analysis in this way.

References

Chandrasekhar, A. G., Kinnan, C., & Larreguy, H. (2014). Social networks as contract enforcement: Evidence from a lab experiment in the field (No. w20259). National Bureau of Economic Research.

Cruz, C., Keefer, P., & Labonne, J. (2016). Incumbent advantage, voter information and vote buying (No. IDB-WP-711). IDB Working Paper Series.

Hello @dana-edwards, I saw your post and i like it. thanks for your post.please stay with us. thank you.

I never thought of it/them in these terms but its obviously true.

The more I share some of my ideas the more I'm getting these sorts of reactions. I'm showing I guess a new kind of moral system, a sort of computational morality which relies on analysis of public opinion. This is the sort of morality that I predict would be successful in a radically transparent environment. Imagine if everyone can see your decisions? Don't you think your primary stakeholders will make some kind of judgment about your decisions? So if your decisions impact them, then shouldn't you bring them into the decision?

Our society is incredibly bizarre and illogical. On the one hand in our society there is this belief in the individual when it is convenient. When it's a moral decision then the individual is expected to make it alone, to take the negative consequences alone, etc. On the other hand if the decision pays off immensely then the group often steps in with: "No individual made it on their own" and so on.

So which is it? If there is really no such thing in the pragmatic sense a self made individual, then every individual is made up of whomever and whatever influences them. This means you are whoever has influence over you for better or for worse.

Now look at this here:

Deontic logic. Theoretically by symbol manipulate you can easily compute this on a Turing machine. Practically speaking, this is possible on computers but the ideal is you would want to do it in an efficient way so it can scale (handle lots of problems in parallel).

so I'm trying to relate this to Steemit and has always been my contention that members should try as stakeholders to manage their behavior to cater to a group of about 20. If you do it right you can scale because those you manage will in turn become stakeholders in a similar way. While I like to learn from other members and read their posts I don't concern myself with steemit as a hole because I now I can be in control of my success or failures here.

20 doesn't really scale though. You can't go from 20, to 200, to 2000, because every new person you add, is completely different. Imagine this were chess, and every once in a while a completely new piece is added to the board? Can you know the best moves when new pieces keep being added?

Only if it's those same pieces over and over do you get the opportunity to form patterns, find the really good or really bad positions, etc. Now there are some primary principles of chess such as controlling the board (positional chess) but in terms of pure computational (number crunching), this doesn't scale. A human can only see a certain amount of moves ahead and a human can only handle a limited scale.

A computer on the other hand with AI can see many many more moves ahead and will always beat the human. At the same time if you keep adding pieces and growing the board then even the computer will not be able to scale to keep up.

yeah I get that part but I think a small group is manageable for something like personal success on steemit. Reaching beyond that dilutes the message and spreads oneself too thin.

again a thoughtprovoking topic and a very deep explanation

I thought about it as well. many people try to seem independent from other people's opinion, and they often say smth like "I don't care". But actually in reality it is obviously that they do, but they can't realize it.

Even saying such proud words is also a part of unconscious desire to be mentioned by others.

There's nothing bad in our desire to think about other people's opinion about our actions, but the main is it should not rule us completely. And, of course, we must select people whose opinion will have great meaning for us.

If we follow the crowd, we are just a part of it.

This is really interesting! What i don't really get is the step from analysis of ones network of stakeholders to an analysis of morality.To my understanding a decision normally ties in a variety of factors, and i don't see how these can be isolated. They might also be dependent on a matrix of sub groups of ones stakeholders, where sometimes family/friend

s/colleagues/the public outweigh each other.

The sentiment of your social circle is a factor in moral decision making.

Yes. I have been contemplating the potential to (sort of) "extend" the "Dunbar Number" through technology. To illustrate such potential we can consider our activity here on Steemit. Most of us serious contibutors here have built a following of 500 + (often into the 1000s), which is CLEARLY beyond the standard "Dunbar Number". Yet, by using a tool like SteemFollower we are able to receive a significant amount of data from a sizeable portion of our followers. Those who have enough SP to be able to vote for HUNDREDS of posts per day could be the most illustrative of this concept. For me, there are NUMEROUS people who get my daily upvote SIMPLY because they have gained my trust in terms of quality of post content and frequency. I am also seeing their activity daily. Perhaps with some more long-term metric capacity (the users you upvote regularly graphed on a chart including the groth of their SP, following, freuqency of posts, and frequency of your upvotes of their pos[teas] we COULD begin to reach past the "Dunbar Limit" RIGHT HERE on SteemFollower. Then we could start speculating on higher numbers and how tools could be developed for those higher quantities of data. in other words, we could start such an expieriment RIGHT HERE and then scale it. It might be interesting to run this by @mahdiyari to see what he thinks...

I requested this ability to track metrics a while back.